quic: add quic

Working on adding QUIC back. This is a bit of a rewrite of the original and is not yet complete (thus the draft status).

There are several important bits here:

-

The internal classes are

Endpoint,EndpointWrap,Session, andStream(originally these were namedQuicSocket,QuicSession, andQuicStream. -

EndpointandEndpointWrapare a bit special...

-

Endpointwraps auv_udp_tbut does not wrapUDPWrap. The reason is to give us finer control over how the UDP port is used and configured, and how data flows. -

EndpointWrapis the JS Wrapper object aroundEndpoint. It is designed to be cloneable viaMessagePort. What that means is that anEndpoint(and it's associateduv_udp_tcan essentially be shared across multiple worker threads while still being owned by a single event loop). It makes the implementation a bit more complicated but opens up the ability for processing QUIC sessions across multiple worker threads.

-

Streamis no longer aStreamBase. In fact, while the QUIC implementation will be capable of sending from aReadablestream, and will support being read as aReadable, the QUIC JavaScriptStreamobject will not be a Node.js stream object. This is a major API change from the original implementation. More details on this later, but the usage pattern will be closer to what we see in browser environments (e.g.stream.blob(),stream.readable(),stream.text(), etc). -

There's ~~no~~ only partial JS API exposed here ~~yet~~. That's coming as I work through the rewrite.

This doesn't really seem future-proof in a world in which we will eventually be able to transfer handles between threads.

Will we? It's been discussed but I hadn't seen any activity to that end so I didn't really want to bank on that arriving at any time in the near future.

If/when that time does come, it can be accommodated under this model by modifying EndpointWrap to allow for transfers as well as clones. A transfered EndpointWrap would transfer ownership of the underlying inner Endpoint and it's corresponding uv_udt_t handle to the other event loop without breaking the assumptions here.

Using another thread's I/O facilities kind of breaks the mental model that people have of Workers and renders them fairly pointless in general.

Why? Can you explain? It's not far off from the model we use in Piscina, for instance, where we keep the i/o on the main thread and dispatch the compute to workers.

Gonna be honest, this feels like something that's going to come back to bite us, both from an API perspective (special-casing QUIC vs making the API as close as possible to the TCP one is going to make everything harder for users, not easiers) and from an internals perspective (having some consistent stream API on the C++ side does make sense!).

Having been through all this code many times, I disagree entirely. And I don't plan on special casing the QUIC code -- I plan on revisiting both the HTTP/2 and HTTP/1 APIs to align them closer with this model. In other words, I'm intentionally moving away from the existing streams API (in both internal and external APIs).

This doesn't really seem future-proof in a world in which we will eventually be able to transfer handles between threads.

Will we? It's been discussed but I hadn't seen any activity to that end so I didn't really want to bank on that arriving at any time in the near future.

I mean, "nobody's working on it so it's not going to happen" is ... Yes, it's unlikely that we're going to get a nice API for this on the libuv side anytime soon, something that I had been waiting/hoping for, but we can always choose to do the same things we do for child processes. That's not trivial either, but it's still way less complex than adding special casing for each class that wraps a libuv handle.

Using another thread's I/O facilities kind of breaks the mental model that people have of Workers and renders them fairly pointless in general.

Why? Can you explain? It's not far off from the model we use in Piscina, for instance, where we keep the i/o on the main thread and dispatch the compute to workers.

Because in that case the thread whose event loop owns the handle also performs any active I/O calls -- it's the exact opposite of what piscina does.

As an extreme example, when the handle-owning becomes blocked, other threads won't be able to actually make use of the handle.

This is a blocker, imo.

Gonna be honest, this feels like something that's going to come back to bite us, both from an API perspective (special-casing QUIC vs making the API as close as possible to the TCP one is going to make everything harder for users, not easiers) and from an internals perspective (having some consistent stream API on the C++ side does make sense!).

Having been through all this code many times, I disagree entirely. And I don't plan on special casing the QUIC code -- I plan on revisiting both the HTTP/2 and HTTP/1 APIs to align them closer with this model. In other words, I'm intentionally moving away from the existing streams API (in both internal and external APIs).

You can feel free to disagree, but please don't add APIs without having a plan for how to integrate them with the existing streams API that has consensus among collaborators beforehand.

... that has consensus among collaborators beforehand.

That's why this is all being done in the open with visibility through a draft PR.

... that has consensus among collaborators beforehand.

That's why this is all being done in the open with visibility through a draft PR.

Well... in that case, what's the state that you eventually want to reach in terms of internal streams API?

I mostly really want to avoid a scenario in which one chunk of the code base uses one streams model and another uses another streams model. It's bad enough that we have streams like zlib or fs streams that don't align with other code, but let's not make it worse.

Well... in that case, what's the state that you eventually want to reach in terms of internal streams API?

Let's start with a look at the external API then work our way in.

Let's look at server-side once a session has been created and we've received a stream from the peer...

stream.respondWith({ body: fs.promises.open('file') }); // Promise<FileHandle>

stream.respondWith({ body: await fs.promises.open('file') }); // FileHandle

stream.respondWith({ body: fs.openReadStream('...') }); // any stream.Readable

stream.respondWith({ body: blob }); // buffer.Blob

stream.respondWith({ body: buffer }); // Buffer/TypedArray/ArrayBuffer

stream.respondWith({ body: 'hello world' }); // string

stream.blob() // get the stream contents as a Blob

stream.readable() // get the stream contents as a stream.Readable

stream.text() // get the stream contents as a string

// ...

On the open stream side:

session.openStream({ unidirectional: true, body: fs.promises.open('file') }); // Promise<FileHandle>

session.openStream({ unidirectional: true, body: fs.openReadStream('file') }); // stream.Readable

session.openStream({ unidirectional: true, body: 'hello world' }); // string

// ...

This is largely mockup currently because I'm still working through the details and ergonomics but it gives a basic idea. Rather than the QUIC Stream being a stream itself, the API will accept any Node.js Readable as a source and will be capable of being read as a Readable for folks who want to stick with that API.

From here, on the internals, the implementation will be capable of consuming from any Readable and from any StreamBase. It will just also be capable of consuming from TypedArray, Blob, strings, etc.

As you are already aware, internally, the QUIC implementation already has to be different because of the way ngtcp2 works. Outbound data has to be queued internally with potentially multiple passes at attempting to read. Whenever a QUIC packet is serialized, we pass ngtcp2 a pointer to the available data and it decides how much it wishes to consume, we are then required to retain the sent data in memory in case ngtcp2 determines that there has been packet loss. Any single chunk of outbound data could be passed multiple times into ngtcp2, and any packet serialization could be smaller or larger than any single uv_buf_t that is passed through via a StreamBase instance. We can only free the retained data once successful receipt has been acknowledged. This requires functionality that does not exist in the current StreamBase. Inbound data is easier to handle, and when usercode chooses to consume that as a stream.Readable, it will essentially just pass through a StreamBase instance and out via stream.Readable, but there will be other options (such as "consuming" the data as a Blob, in which case the data may never actually pass up into the JS realm at all).

Further, there is a bottleneck currently built into the JS to StreamBase design in that it will queue outbound writes at the JS side until the previous write callback is invoked. However, once invoked the Buffer reference is released, allowing it to be garbage collected. That means we cannot invoke the callback until after the data is acknowledged, which bottlenecks our outbound data flow. StreamBase currently does not give us access to the Buffer to force it to be retained -- it only gives us a set of uv_buf_t's. This means that, when using Stream, the outbound data ends up needlessly being buffered twice and causes our quic stream to be starved of data until all outstanding acks come in. So while the api will support consuming the existing JS to StreamBase model, it won't be limited by it. When reading from a native StreamBase instance, such as a FileHandle, we thankfully won't have that limitation. Reading from those will be straightforward, pretty simple, and very performant -- tho it still needs a bridge into the pull model required by ngtcp2.

@nodejs/collaborators ... While this still definitely has a ways to go before it's ready to land (needs lots of tests and likely has quite a few bugs remaining)... I'm going to go ahead and mark it ready to review. It's going to take a while, and a significant effort, to review this properly. I'm happy to jump on a zoom with reviewers to walk them through things to help get them oriented and ask questions.

/cc @mcollina

@jasnell correct me if I'm wrong but is it expected that someone implementing a server would have to implement the clienthello promise in order to implement certificate rotation (i.e on expiration)?

That's the current thinking yes, but I plan to revisit that. It's not that different than what we have to do now except it's promise based instead of event based. However, I plan to make that part easier once other parts are finished

@jasnell It would be my hope too that it would be made easier. Thanks for confirming the current state though. I can manage that.

I'll convert my testing app from the prevous quic API when I have a chance and see what breaks.

We keep coming back to obj.readableNodeStream() etc... do we want to standardize this interface in some way that could provide best performance regardless which way it's consumed? I tried something along that way: https://github.com/nodejs/undici/pull/895/files#diff-d79c8d03275bb04e4028109c813b934fcf5ae90c4dfc07a0f2336c5790b61233R39-116

So there are 2 parts of this, i.e. we usually have two glue steps every time we consume data, e.g.

undici native -> node streams (1) -> web streams (2) -> consumer

- Choosing a "native" API when implementing a data source. This is the primary problem with e.g.

quic,undicietc. - Transforming into the API expected by the consumer. This is the primary problem with

compose.

1, Is about implementing some kind of body mixin where the different ways of consuming can be implemented without glue. I've made an example of this here.

2, Is about implementing some kind of "lazy" intermediate stream representation.

So basically what we want is:

undici native -> lazy -> web streams which collapses to undici native -> webstreams -> consumer.

Here, I can bypass that question entirely by making it possible to provide your own low level consumer as opposed to getting either a node stream or web stream. The low level consumer plugs directly into the native layer and just receives blocks of data via callback as they are received without any backpressure. As soon as it's attached, any buffered data is pushed immediately and synchronously, and while it is attached all chunks are pushed immediately and synchronously. We can allow frameworks like undici, fastify, etc to decide from there what else to do.

I'm sorry. I posted that comment in the wrong place. It was meant for https://github.com/nodejs/node/pull/39483. Though I guess it is slightly relevant here as well.

Feature detection isnt working with quictls/openssl 3.0.0beta2+quic the QUIC flag is in quic.h not crypto.h

Flag is the same so all I did was swap crypto.h for quic.h in getsharedopensslhasquic.py

@jasnell No luck building on the latest new-quic branch

/build/node/out/Release/.deps//build/node/out/Release/obj.target/libnode/gen/src/node/inspector/protocol/NodeRuntime.o.d.raw -c

../src/quic/buffer.cc: In constructor 'node::quic::JSQuicBufferConsumer::JSQuicBufferConsumer(node::Environment*, v8::Local<v8::Object>)':

../src/quic/buffer.cc:333:39: error: 'PROVIDER_JSQUICBUFFERCONSUMER' is not a member of 'node::AsyncWrap'

333 | : AsyncWrap(env, wrap, AsyncWrap::PROVIDER_JSQUICBUFFERCONSUMER) {}

| ^~~~~~~~~~~~~~~~~~~~~~~~~~~~~

../src/quic/buffer.cc: In constructor 'node::quic::StreamSource::StreamSource(node::Environment*, v8::Local<v8::Object>)':

../src/quic/buffer.cc:585:39: error: 'PROVIDER_STREAMSOURCE' is not a member of 'node::AsyncWrap'

585 | : AsyncWrap(env, wrap, AsyncWrap::PROVIDER_STREAMSOURCE),

| ^~~~~~~~~~~~~~~~~~~~~

../src/quic/buffer.cc: In constructor 'node::quic::StreamBaseSource::StreamBaseSource(node::Environment*, v8::Local<v8::Object>, node::StreamBase*, node::BaseObjectPtr<node::AsyncWrap>)':

../src/quic/buffer.cc:680:38: error: 'PROVIDER_STREAMBASESOURCE' is not a member of 'node::AsyncWrap'

680 | : AsyncWrap(env, obj, AsyncWrap::PROVIDER_STREAMBASESOURCE),

| ^~~~~~~~~~~~~~~~~~~~~~~~~

../src/quic/buffer.cc: In constructor 'node::quic::BlobSource::BlobSource(node::Environment*, v8::Local<v8::Object>, node::BaseObjectPtr<node::Blob>)':

../src/quic/buffer.cc:803:39: error: 'PROVIDER_BLOBSOURCE' is not a member of 'node::AsyncWrap'

803 | : AsyncWrap(env, wrap, AsyncWrap::PROVIDER_BLOBSOURCE),

| ^~~~~~~~~~~~~~~~~~~

In file included from ../src/quic/endpoint.cc:5:

../src/quic/qlog.h: In constructor 'node::quic::LogStream::LogStream(node::Environment*, v8::Local<v8::Object>)':

../src/quic/qlog.h:53:40: error: 'PROVIDER_LOGSTREAM' is not a member of 'node::AsyncWrap'

53 | : AsyncWrap(env, obj, AsyncWrap::PROVIDER_LOGSTREAM),

| ^~~~~~~~~~~~~~~~~~

../src/quic/endpoint.cc: In constructor 'node::quic::Endpoint::SendWrap::SendWrap(node::Environment*, v8::Local<v8::Object>, const std::shared_ptr<node::SocketAddress>&, std::unique_ptr<node::quic::Packet>, node::BaseObjectPtr<node::quic::EndpointWrap>)':

../src/quic/endpoint.cc:490:43: error: 'PROVIDER_QUICSENDWRAP' is not a member of 'node::AsyncWrap'

490 | : UdpSendWrap(env, object, AsyncWrap::PROVIDER_QUICSENDWRAP),

| ^~~~~~~~~~~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'bool node::quic::Endpoint::AcceptInitialPacket(node::quic::quic_version, const node::quic::CID&, const node::quic::CID&, std::shared_ptr<v8::BackingStore>, size_t, const std::shared_ptr<node::SocketAddress>&, const std::shared_ptr<node::SocketAddress>&)':

../src/quic/endpoint.cc:599:34: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

599 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc:623:32: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

623 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc:676:44: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

676 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'void node::quic::Endpoint::ImmediateConnectionClose(node::quic::quic_version, const node::quic::CID&, const node::quic::CID&, const std::shared_ptr<node::SocketAddress>&, const std::shared_ptr<node::SocketAddress>&, node::quic::error_code)':

../src/quic/endpoint.cc:780:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

780 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'void node::quic::Endpoint::OnReceive(size_t, const uv_buf_t&, const std::shared_ptr<node::SocketAddress>&)':

../src/quic/endpoint.cc:879:32: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

879 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc:936:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

936 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc:954:32: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

954 | Debug(env_, DebugCategory::QUICENDPOINT, "Endpoint: No existing session\n");

| ^~~~~~~~~~~~

../src/quic/endpoint.cc:969:34: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

969 | Debug(env_, DebugCategory::QUICENDPOINT, "Endpoint: Stateless reset\n");

| ^~~~~~~~~~~~

../src/quic/endpoint.cc:981:34: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

981 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc:988:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

988 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'void node::quic::Endpoint::ProcessOutbound()':

../src/quic/endpoint.cc:1003:24: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1003 | DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'void node::quic::Endpoint::ProcessSendFailure(int)':

../src/quic/endpoint.cc:1036:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1036 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'void node::quic::Endpoint::SendPacket(node::BaseObjectPtr<node::quic::Endpoint::SendWrap>)':

../src/quic/endpoint.cc:1064:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1064 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'bool node::quic::Endpoint::SendRetry(node::quic::quic_version, const node::quic::CID&, const node::quic::CID&, const std::shared_ptr<node::SocketAddress>&, const std::shared_ptr<node::SocketAddress>&)':

../src/quic/endpoint.cc:1081:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1081 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc:1094:32: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1094 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'bool node::quic::Endpoint::SendStatelessReset(const node::quic::CID&, const std::shared_ptr<node::SocketAddress>&, const std::shared_ptr<node::SocketAddress>&, size_t)':

../src/quic/endpoint.cc:1121:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1121 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'v8::Maybe<long unsigned int> node::quic::Endpoint::GenerateNewToken(uint8_t*, const std::shared_ptr<node::SocketAddress>&)':

../src/quic/endpoint.cc:1177:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1177 | Debug(env_, DebugCategory::QUICENDPOINT, "Endpoint: Generating new token\n");

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'void node::quic::Endpoint::SendVersionNegotiation(node::quic::quic_version, const node::quic::CID&, const node::quic::CID&, const std::shared_ptr<node::SocketAddress>&, const std::shared_ptr<node::SocketAddress>&)':

../src/quic/endpoint.cc:1208:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1208 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'int node::quic::Endpoint::StartReceiving()':

../src/quic/endpoint.cc:1250:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1250 | Debug(env_, DebugCategory::QUICENDPOINT, "Endpoint: Start receiving\n");

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'void node::quic::Endpoint::MaybeStopReceiving()':

../src/quic/endpoint.cc:1258:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1258 | Debug(env_, DebugCategory::QUICENDPOINT, "Endpoint: Stop receiving\n");

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'void node::quic::Endpoint::set_validated_address(const std::shared_ptr<node::SocketAddress>&)':

../src/quic/endpoint.cc:1280:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1280 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'void node::quic::Endpoint::IncrementStatelessResetCounter(const std::shared_ptr<node::SocketAddress>&)':

../src/quic/endpoint.cc:1295:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1295 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'void node::quic::Endpoint::IncrementSocketAddressCounter(const std::shared_ptr<node::SocketAddress>&)':

../src/quic/endpoint.cc:1304:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1304 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'void node::quic::Endpoint::DecrementSocketAddressCounter(const std::shared_ptr<node::SocketAddress>&)':

../src/quic/endpoint.cc:1313:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1313 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In constructor 'node::quic::Endpoint::UDP::UDP(node::Environment*, v8::Local<v8::Object>, node::quic::Endpoint*)':

../src/quic/endpoint.cc:1376:22: error: 'PROVIDER_QUICENDPOINTUDP' is not a member of 'node::AsyncWrap'

1376 | AsyncWrap::PROVIDER_QUICENDPOINTUDP),

| ^~~~~~~~~~~~~~~~~~~~~~~~

../src/quic/stream.cc: In constructor 'node::quic::Stream::Stream(node::quic::Session*, v8::Local<v8::Object>, node::quic::stream_id, node::quic::Buffer::Source*)':

../src/quic/stream.cc:154:52: error: 'PROVIDER_QUICSTREAM' is not a member of 'node::AsyncWrap'

154 | : AsyncWrap(session->env(), object, AsyncWrap::PROVIDER_QUICSTREAM),

| ^~~~~~~~~~~~~~~~~~~

../src/quic/endpoint.cc: In constructor 'node::quic::EndpointWrap::EndpointWrap(node::Environment*, v8::Local<v8::Object>, std::shared_ptr<node::quic::Endpoint>)':

../src/quic/endpoint.cc:1767:41: error: 'PROVIDER_QUICENDPOINT' is not a member of 'node::AsyncWrap'

1767 | : AsyncWrap(env, object, AsyncWrap::PROVIDER_QUICENDPOINT),

| ^~~~~~~~~~~~~~~~~~~~~

In file included from ../src/quic/session.cc:5:

../src/quic/qlog.h: In constructor 'node::quic::LogStream::LogStream(node::Environment*, v8::Local<v8::Object>)':

../src/quic/qlog.h:53:40: error: 'PROVIDER_LOGSTREAM' is not a member of 'node::AsyncWrap'

53 | : AsyncWrap(env, obj, AsyncWrap::PROVIDER_LOGSTREAM),

| ^~~~~~~~~~~~~~~~~~

make[1]: *** [libnode.target.mk:393: /build/node/out/Release/obj.target/libnode/src/quic/buffer.o] Error 1

make[1]: *** Waiting for unfinished jobs....

../src/quic/session.cc: In constructor 'node::quic::Session::Session(node::quic::EndpointWrap*, v8::Local<v8::Object>, const std::shared_ptr<node::SocketAddress>&, const std::shared_ptr<node::SocketAddress>&, const std::shared_ptr<node::quic::Session::Options>&, node::BaseObjectPtr<node::crypto::SecureContext>&, const node::quic::CID&, ngtcp2_crypto_side)':

../src/quic/session.cc:1103:53: error: 'PROVIDER_QUICSESSION' is not a member of 'node::AsyncWrap'

1103 | : AsyncWrap(endpoint->env(), object, AsyncWrap::PROVIDER_QUICSESSION),

| ^~~~~~~~~~~~~~~~~~~~

make[1]: *** [libnode.target.mk:393: /build/node/out/Release/obj.target/libnode/src/quic/stream.o] Error 1

make[1]: *** [libnode.target.mk:393: /build/node/out/Release/obj.target/libnode/src/quic/endpoint.o] Error 1

make[1]: *** [libnode.target.mk:393: /build/node/out/Release/obj.target/libnode/src/quic/session.o] Error 1

Feature detection isnt working with quictls/openssl 3.0.0beta2+quic the QUIC flag is in quic.h not crypto.h

Flag is the same so all I did was swap

crypto.hforquic.hin getsharedopensslhasquic.py

FWIW https://github.com/nodejs/node/pull/38512/commits/788e9493e9b9b2f99b1a69fbbe9e7a83c02027c0 is the equivalent in the ongoing OpenSSL 3 PR (https://github.com/nodejs/node/pull/38512).

Quick update... I'll be working on this more next week to get unresolved comments resolved and get the CI passing so we can land. @mcollina @ronag and others... It would be great to start getting some more active review here. If it's helpful, I'm happy to set up some time in the calendar to walk through the implementation with an interactive code review session.

Resolved many of the outstanding comments and also added a detailed readme that should help with review.

@jasnell

A clean build still fails

../src/quic/buffer.cc:333:39: error: 'PROVIDER_JSQUICBUFFERCONSUMER' is not a member of 'node::AsyncWrap'

333 | : AsyncWrap(env, wrap, AsyncWrap::PROVIDER_JSQUICBUFFERCONSUMER) {}

| ^~~~~~~~~~~~~~~~~~~~~~~~~~~~~

../src/quic/buffer.cc: In constructor 'node::quic::StreamSource::StreamSource(node::Environment*, v8::Local<v8::Object>)':

../src/quic/buffer.cc:585:39: error: 'PROVIDER_STREAMSOURCE' is not a member of 'node::AsyncWrap'

585 | : AsyncWrap(env, wrap, AsyncWrap::PROVIDER_STREAMSOURCE),

| ^~~~~~~~~~~~~~~~~~~~~

../src/quic/buffer.cc: In constructor 'node::quic::StreamBaseSource::StreamBaseSource(node::Environment*, v8::Local<v8::Object>, node::StreamBase*, node::BaseObjectPtr<node::AsyncWrap>)':

../src/quic/buffer.cc:680:38: error: 'PROVIDER_STREAMBASESOURCE' is not a member of 'node::AsyncWrap'

680 | : AsyncWrap(env, obj, AsyncWrap::PROVIDER_STREAMBASESOURCE),

| ^~~~~~~~~~~~~~~~~~~~~~~~~

../src/quic/buffer.cc: In constructor 'node::quic::BlobSource::BlobSource(node::Environment*, v8::Local<v8::Object>, node::BaseObjectPtr<node::Blob>)':

../src/quic/buffer.cc:803:39: error: 'PROVIDER_BLOBSOURCE' is not a member of 'node::AsyncWrap'

803 | : AsyncWrap(env, wrap, AsyncWrap::PROVIDER_BLOBSOURCE),

| ^~~~~~~~~~~~~~~~~~~

In file included from ../src/quic/endpoint.cc:5:

../src/quic/qlog.h: In constructor 'node::quic::LogStream::LogStream(node::Environment*, v8::Local<v8::Object>)':

../src/quic/qlog.h:53:40: error: 'PROVIDER_LOGSTREAM' is not a member of 'node::AsyncWrap'

53 | : AsyncWrap(env, obj, AsyncWrap::PROVIDER_LOGSTREAM),

| ^~~~~~~~~~~~~~~~~~

../src/quic/endpoint.cc: In constructor 'node::quic::Endpoint::SendWrap::SendWrap(node::Environment*, v8::Local<v8::Object>, const std::shared_ptr<node::SocketAddress>&, std::unique_ptr<node::quic::Packet>, node::BaseObjectPtr<node::quic::EndpointWrap>)':

../src/quic/endpoint.cc:490:43: error: 'PROVIDER_QUICSENDWRAP' is not a member of 'node::AsyncWrap'

490 | : UdpSendWrap(env, object, AsyncWrap::PROVIDER_QUICSENDWRAP),

| ^~~~~~~~~~~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'bool node::quic::Endpoint::AcceptInitialPacket(node::quic::quic_version, const node::quic::CID&, const node::quic::CID&, std::shared_ptr<v8::BackingStore>, size_t, const std::shared_ptr<node::SocketAddress>&, const std::shared_ptr<node::SocketAddress>&)':

../src/quic/endpoint.cc:599:34: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

599 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc:623:32: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

623 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc:676:44: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

676 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'void node::quic::Endpoint::ImmediateConnectionClose(node::quic::quic_version, const node::quic::CID&, const node::quic::CID&, const std::shared_ptr<node::SocketAddress>&, const std::shared_ptr<node::SocketAddress>&, node::quic::error_code)':

../src/quic/endpoint.cc:780:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

780 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'void node::quic::Endpoint::OnReceive(size_t, const uv_buf_t&, const std::shared_ptr<node::SocketAddress>&)':

../src/quic/endpoint.cc:879:32: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

879 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc:936:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

936 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc:954:32: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

954 | Debug(env_, DebugCategory::QUICENDPOINT, "Endpoint: No existing session\n");

| ^~~~~~~~~~~~

../src/quic/endpoint.cc:969:34: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

969 | Debug(env_, DebugCategory::QUICENDPOINT, "Endpoint: Stateless reset\n");

| ^~~~~~~~~~~~

../src/quic/endpoint.cc:981:34: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

981 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc:988:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

988 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'void node::quic::Endpoint::ProcessOutbound()':

../src/quic/endpoint.cc:1003:24: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1003 | DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'void node::quic::Endpoint::ProcessSendFailure(int)':

../src/quic/endpoint.cc:1036:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1036 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'void node::quic::Endpoint::SendPacket(node::BaseObjectPtr<node::quic::Endpoint::SendWrap>)':

../src/quic/endpoint.cc:1064:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1064 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'bool node::quic::Endpoint::SendRetry(node::quic::quic_version, const node::quic::CID&, const node::quic::CID&, const std::shared_ptr<node::SocketAddress>&, const std::shared_ptr<node::SocketAddress>&)':

../src/quic/endpoint.cc:1081:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1081 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc:1094:32: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1094 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'bool node::quic::Endpoint::SendStatelessReset(const node::quic::CID&, const std::shared_ptr<node::SocketAddress>&, const std::shared_ptr<node::SocketAddress>&, size_t)':

../src/quic/endpoint.cc:1121:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1121 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'v8::Maybe<long unsigned int> node::quic::Endpoint::GenerateNewToken(uint8_t*, const std::shared_ptr<node::SocketAddress>&)':

../src/quic/endpoint.cc:1177:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1177 | Debug(env_, DebugCategory::QUICENDPOINT, "Endpoint: Generating new token\n");

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'void node::quic::Endpoint::SendVersionNegotiation(node::quic::quic_version, const node::quic::CID&, const node::quic::CID&, const std::shared_ptr<node::SocketAddress>&, const std::shared_ptr<node::SocketAddress>&)':

../src/quic/endpoint.cc:1208:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1208 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'int node::quic::Endpoint::StartReceiving()':

../src/quic/endpoint.cc:1250:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1250 | Debug(env_, DebugCategory::QUICENDPOINT, "Endpoint: Start receiving\n");

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'void node::quic::Endpoint::MaybeStopReceiving()':

../src/quic/endpoint.cc:1258:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1258 | Debug(env_, DebugCategory::QUICENDPOINT, "Endpoint: Stop receiving\n");

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'void node::quic::Endpoint::set_validated_address(const std::shared_ptr<node::SocketAddress>&)':

../src/quic/endpoint.cc:1280:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1280 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'void node::quic::Endpoint::IncrementStatelessResetCounter(const std::shared_ptr<node::SocketAddress>&)':

../src/quic/endpoint.cc:1295:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1295 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'void node::quic::Endpoint::IncrementSocketAddressCounter(const std::shared_ptr<node::SocketAddress>&)':

../src/quic/endpoint.cc:1304:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1304 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In member function 'void node::quic::Endpoint::DecrementSocketAddressCounter(const std::shared_ptr<node::SocketAddress>&)':

../src/quic/endpoint.cc:1313:30: error: 'QUICENDPOINT' is not a member of 'node::DebugCategory'

1313 | Debug(env_, DebugCategory::QUICENDPOINT,

| ^~~~~~~~~~~~

../src/quic/endpoint.cc: In constructor 'node::quic::Endpoint::UDP::UDP(node::Environment*, v8::Local<v8::Object>, node::quic::Endpoint*)':

../src/quic/endpoint.cc:1376:22: error: 'PROVIDER_QUICENDPOINTUDP' is not a member of 'node::AsyncWrap'

1376 | AsyncWrap::PROVIDER_QUICENDPOINTUDP),

| ^~~~~~~~~~~~~~~~~~~~~~~~

../src/quic/endpoint.cc: In constructor 'node::quic::EndpointWrap::EndpointWrap(node::Environment*, v8::Local<v8::Object>, std::shared_ptr<node::quic::Endpoint>)':

../src/quic/endpoint.cc:1767:41: error: 'PROVIDER_QUICENDPOINT' is not a member of 'node::AsyncWrap'

1767 | : AsyncWrap(env, object, AsyncWrap::PROVIDER_QUICENDPOINT),

| ^~~~~~~~~~~~~~~~~~~~~

../src/quic/stream.cc: In constructor 'node::quic::Stream::Stream(node::quic::Session*, v8::Local<v8::Object>, node::quic::stream_id, node::quic::Buffer::Source*)':

../src/quic/stream.cc:154:52: error: 'PROVIDER_QUICSTREAM' is not a member of 'node::AsyncWrap'

154 | : AsyncWrap(session->env(), object, AsyncWrap::PROVIDER_QUICSTREAM),

| ^~~~~~~~~~~~~~~~~~~

In file included from ../src/quic/session.cc:5:

../src/quic/qlog.h: In constructor 'node::quic::LogStream::LogStream(node::Environment*, v8::Local<v8::Object>)':

../src/quic/qlog.h:53:40: error: 'PROVIDER_LOGSTREAM' is not a member of 'node::AsyncWrap'

53 | : AsyncWrap(env, obj, AsyncWrap::PROVIDER_LOGSTREAM),

| ^~~~~~~~~~~~~~~~~~

../src/quic/session.cc: In constructor 'node::quic::Session::Session(node::quic::EndpointWrap*, v8::Local<v8::Object>, const std::shared_ptr<node::SocketAddress>&, const std::shared_ptr<node::SocketAddress>&, const std::shared_ptr<node::quic::Session::Options>&, node::BaseObjectPtr<node::crypto::SecureContext>&, const node::quic::CID&, ngtcp2_crypto_side)':

../src/quic/session.cc:1103:53: error: 'PROVIDER_QUICSESSION' is not a member of 'node::AsyncWrap'

1103 | : AsyncWrap(endpoint->env(), object, AsyncWrap::PROVIDER_QUICSESSION),

| ^~~~~~~~~~~~~~~~~~~~

Dockerfile for build:

FROM ubuntu:20.04

RUN apt update && \

apt install -y software-properties-common && \

add-apt-repository ppa:ubuntu-toolchain-r/test && \

apt update && \

apt install -y \

g++ \

python \

ccache \

build-essential \

git wget \

python3-distutils && \

apt-get clean && \

rm -rf /var/lib/apt/lists/*

ENV OPENSSL_VERSION="3.0.0-beta2"

RUN mkdir -p build

RUN set -x \

&& mkdir -p /tmp; cd /tmp \

&& git clone https://github.com/quictls/openssl.git -b openssl-3.0.0-beta2+quic \

&& cd openssl \

&& ./Configure linux-x86_64 shared \

&& make -j$(nproc) \

&& make test \

&& make install \

&& cd .. \

&& echo /usr/local/lib64 > /etc/ld.so.conf.d/local64.conf \

&& ldconfig \

&& cd /build \

&& git clone https://github.com/jasnell/node.git --depth 1 --branch new-quic \

&& cd node \

&& ./configure --shared-openssl --shared-openssl-libpath /usr/local/lib64 --shared-openssl-includes /tmp/openssl/include/ \

&& make -j$(nproc) \

&& make -j$(nproc) install PREFIX=/usr/local \

&& rm -rf /build /tmp/openssl

CMD [ "node" ]

@splitice ... I haven't yet started making things work with openssl 3, which (I think) moves the define guard. I haven't forgotten, I've just been focusing on other bits.

Given that this is such a large and complex PR... On Friday August 27th at 9am Pacific Time I will be hosting the first of a few zoom calls to start introducing and interactively walking through the QUIC implementation to help potential PR reviewers get oriented. If you'd like to be included on that zoom call, please private message me on twitter or send me an email to get included and let me know what email address I should use for the calendar invite. For this first session I will have a max of 10 people.

Very cool and exciting! I’m just wondering if support for multicasting is planned for the future and what it would look like?

I’m just thinking you could bind to a multicast address, and then create create quic sockets to all the listeners you want to add. The listeners try to listen to the address for a hand shake, but they respond through the original socket that told them to listen. If everything is ok, it starts broadcasting, but all the back pressure/checking for packet loss happens through that original quic socket stream you used to tell the listener to listen too.

Of course, you could also send datagrams over that multicast address too, and let the users check for pocket loss.

Is this something that could be implemented? Sorry if this isn’t the place for this, I’m not sure if this is the right place to ask this

No, multicast is not planned and not currently supported by the protocol. The TLS integration requires a handshake between the client and server. I guess someone could make it work by sharing the negotiated TLS session with multiple endpoints but the path validation functions would have to be disabled. Not impossible but also not a goal at all.

@jasnell what version of OpenSSL is this supposed to be built with?

@jasnell @danbev thanks for the update I can confirm that now that the instructions in my dockefile build this PR.

Havent done any testing yet.

@jasnell Fails to build on 32-bit.

"../src/quic/session.cc:374:6: error: no declaration matches ‘void node::quic::Session::CryptoContext::AcknowledgeCryptoData(ngtcp2_crypto_level, uint64_t)’", " void Session::CryptoContext::AcknowledgeCryptoData(", " ^~~~~~~", "In file included from ../src/quic/endpoint.h:9,", " from ../src/quic/session.cc:3:", "../src/quic/session.h:569:10: note: candidate is: ‘void node::quic::Session::CryptoContext::AcknowledgeCryptoData(ngtcp2_crypto_level, size_t)’", " void AcknowledgeCryptoData(ngtcp2_crypto_level level, size_t datalen);", " ^~~~~~~~~~~~~~~~~~~~~", "../src/quic/session.h:555:9: note: ‘class node::quic::Session::CryptoContext’ defined here", " class CryptoContext final : public MemoryRetainer {", " ^~~~~~~~~~~~~", "../src/quic/session.cc: In member function ‘bool node::quic::Session::SendDatagram(const std::shared_ptr<v8::BackingStore>&, size_t, size_t)’:", "../src/quic/session.cc:1558:46: error: no matching function for call to ‘min(const size_t&, uint64_t&)’", " transport_params_.max_datagram_frame_size);", " ^"

I reviewed. There still looks to be 32bit issues. Basically size_t is not always 64bit. There is implicit casting going on e.g in calls to AcknowledgeCryptoData (parameter datalen)

@jasnell any updates on this?

Really waiting on more reviews and for my time to free up a bit more

I can confirm that @jasnell your latest commits now build on x86, armv7 (32bit) and of course x86_64.

First bug found too.

When responding with responseWith from the server the client does not get a readable event before the final close (or at all) from the NodeJS style stream

Rough code to replicate / workaround (needs q/q-lite):

_streamReadUntil: async function(stream, fn = null, cancelFn = ()=>{}){

// read until end

const deferred = Q.defer()

let ret = Buffer.alloc(0), ended = false

if(!fn) {

stream.once('end', ()=>{

ended = true

deferred.resolve(ret)

})

fn = ()=>ended

}

let chunk

const doReadable = async () => {

console.log("doReadable")

try {

while (null !== (chunk = stream.read(1))) {

console.log({chunk})

ret = Buffer.concat([ret, chunk])

if(fn(chunk)) {

deferred.resolve(ret)

}

}

} catch(ex){

deferred.reject(ex)

}

}

stream.on('readable', doReadable)

if(stream.readableLength){

doReadable()

}

const doClose = async (start = 'Clos')=>{

doReadable() // the workaround

console.log("doClose")

setImmediate(()=>{

deferred.reject(new Error(`${start}ing due to insufficient data after receiving ${ret.length}`))

})

}

stream.once('close', doClose)

const doEnd = ()=>{

if(ended) return

Q.delay(MaxEndTimeToTransmit).then(()=>doClose('End'))

}

stream.once('end', doEnd)

deferred.promise.catch(()=>{}).then(()=>{

stream.removeListener('readable', doReadable)

stream.removeListener('close', doClose)

stream.removeListener('end', doEnd)

})

cancelFn(()=>deferred.reject('cancelled'))

return await deferred.promise

},

This code works with normal nodejs streams (TCP, original QUIC api, fs)

I'm working on getting a feature branch supporting this API for some internal software so I can see how it goes in the real world. The new API makes this a bit more challenging than all the other APIs we have experimented with - but I think it will be doable.

@jasnell What is the correct way of removing a node stream from the consumer? I'm seeing a memory leak currently with node readable stream usage.

I've tried.

- disposing the node stream

- cancelling the underlying quic stream

In both cases I'm seeing that Stream is retaining JS Quic buffer consumer and JS quic buffer consumer is retaining stream.

I did an inspect on the stream at the last point I have a reference to it and got:

Stream {

closed: Promise {

undefined,

[Symbol(async_id_symbol)]: 2881,

[Symbol(trigger_async_id_symbol)]: 103,

[Symbol(destroyed)]: [Object]

},

destroyed: true,

id: 136n,

headers: {

promise: [Promise],

resolve: [Function (anonymous)],

reject: [Function (anonymous)]

},

locked: true,

serverInitiated: false,

session: undefined,

stats: StreamStats {

createdAt: 163028677280813,

duration: 3006538832,

lastReceivedAt: 0,

lastAcknowledgeAt: 0,

closingAt: 0,

bytesReceived: 8,

bytesSent: 0,

maxOffset: 1048584,

maxOffsetAcknowledged: 0,

maxOffsetReceived: 0,

finalSize: 0

},

trailers: Promise {

<pending>,

[Symbol(async_id_symbol)]: 2883,

[Symbol(trigger_async_id_symbol)]: 103,

[Symbol(destroyed)]: [Object]

},

unidirectional: false

}

My initial analysis seems to suggest that it might be the result of inbound_consumer_strong_ptr_ never being released. I did note that handle Destroy() never clears it either.

Ok,

so improvements:

- the inbound consumer needs to be cleared in DoDestroy, this removes one thing keeping the quic buffer alive

// clear inbound consumer

inbound_consumer_ = nullptr;

inbound_consumer_strong_ptr_.reset();

- The QUIC buffer consumer is still live though, it's being kept alive by it's own GC root (I'm still trying to find this).

The QUIC buffer consumer is keeping the stream alive through the reference traken for it's emit (closure) implementation.

I think problem 2 is beyond my understanding of NodeJS @jasnell

Reported when running an automated test case involving connection interruption.

node[19785]: ../src/quic/stream.cc:583:void node::quic::Stream::Resume(): Assertion `session()' failed.

1: 0x55741f64f5a4 node::Abort() [node]

2: 0x55741f64f638 [node]

3: 0x55741f7f3044 node::quic::Stream::Resume() [node]

4: 0x55741f922108 v8::internal::FunctionCallbackArguments::Call(v8::internal::CallHandlerInfo) [node]

5: 0x55741f922a65 [node]

6: 0x55741f922faf v8::internal::Builtin_HandleApiCall(int, unsigned long*, v8::internal::Isolate*) [node]

7: 0x5574202a4f39 [node]

- Picked this up looping our application tests

node:internal/quic/binding:185

this[owner_symbol][kResetStream](error);

^

TypeError: this[owner_symbol][kResetStream] is not a function

at Session.onStreamReset (node:internal/quic/binding:185:35)

Thank you for the testing and reviewing @splitice ! Really appreciated! My plan on this PR is to return to it in mid to late december to give it some needed updates. I've been a bit too buried in Cloudflare day job stuff to be able to give it the necessary attention. I do plan on trying to split it up into a number of smaller PRs and will be refactoring some of the public facing APIs further.

@jasnell thats unfortunate timing for me given my availability is largely this week. I'll keep testing but I'm a bit limited currently with the crash frequency preventing the longer running tests from completing.

I'm testing a couple of crude patches for 1 & 3, a potential fix for 2 (building now, but unsure if I'm even on the right track, advanced native lifeycycle management in node isnt an area I'm super familiar with) and added some additional debug info for 4 (I'm guessing it's the session object for some reason, its logged so I'll know next time).

Yeah, I get it, and I'm sorry I can't dedicate time sooner. I'm in the middle of a complex refactoring and expanded implementation of the WHATWG streams API in Workers and it's pretty much sucked away all the spare time I have at the moment. I will be getting back to this just as soon as I'm able to and know that your testing is deeply appreciated. Everything you're doing is very helpful.

In regards to 4 - this[owner_symbol] is a session object that should be a stream. In retrospect it's right there in the stacktrace sigh.

node:internal/quic/binding:188

this[owner_symbol][kResetStream](error);

^

TypeError: this[owner_symbol][kResetStream] is not a function

at Session.onStreamReset (node:internal/quic/binding:188:35)

Now I just need to figure out why. I believe I've "fixed" 1, 2 & 3. Most importantly it now looks like Streams are getting freed.

Found matter 4.

My work is not PR ready (nor is it intended to be) but in case it helps: https://github.com/HalleyAssist/node/commit/6e53ec3083131e0ef1eff1cf9631c5aea74f5ac2

This squashed commit hasnt been tested yet (currently building for all platforms) but the previous pre-squash build has been tested.

NOTE: I also forward ported this to 7.1.0 as 7.0.0 was buggy on release and interfering with our test suite. No QUIC code base changes were needed for this.

If an error (triggering a 'error' event) occurs during Session.close() the promise (i.e await session.close()) never returns.

end()ing an outgoing stream source doesnt transmit an end of stream to the remote end. Outside of HTTP3 ShutdownStreamWrite doesnt appear to be used. Neither does stream.destroy()

ResetStream(kQuicNoError); in Stream::Destroy appears to do the job (lightly tested) on the destroy side. Putting a write closure in Stream::Resume appears to be a positive but unrelated to this issue.

- Streams leak unresolved promises (retained by rejection catching hooks installed by

setPromiseHandled).

Roughly the solution could be as simple as:

function destroyStream(stream, error) {

// [...]

const {

// [...]

trailers,

headers

} = stream[kState];

trailers.resolve(null)

headers.resolve(null)

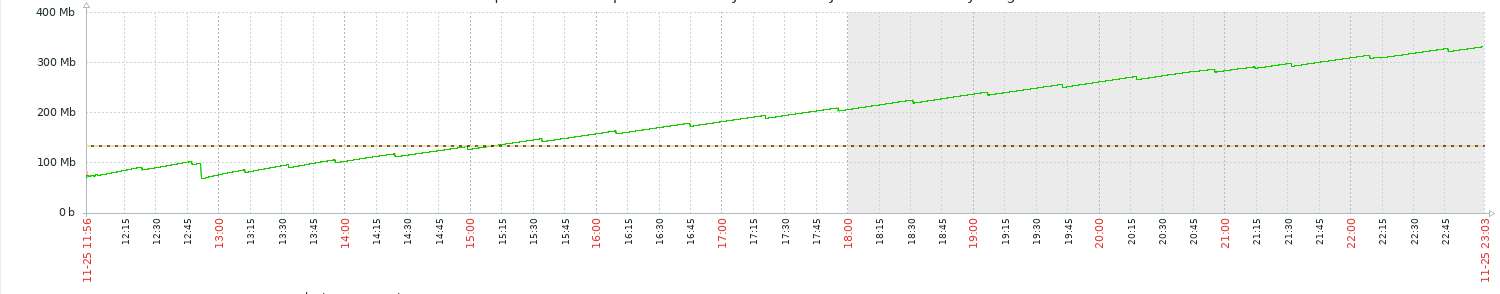

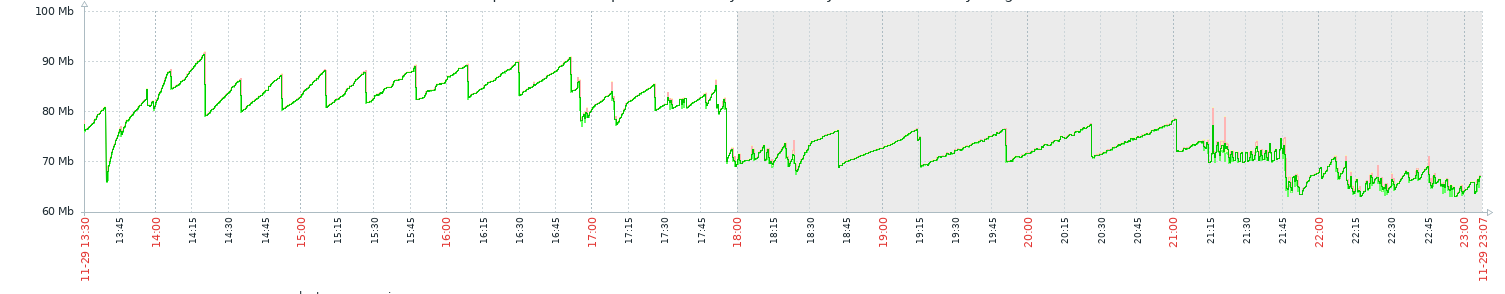

- There still appears to be a memory leak.

Its not v8 memory (stats as taken when process memory was 332mb):

{

total_heap_size: 20226048,

total_heap_size_executable: 2097152,

total_physical_size: 19728840,

total_available_size: 128976416,

used_heap_size: 18404664,

heap_size_limit: 146800640,

malloced_memory: 532552,

peak_malloced_memory: 2177120,

does_zap_garbage: 0,

number_of_native_contexts: 2,

number_of_detached_contexts: 0

}

It's not QUIC memory either aparently (the value of current_ngtcp2_memory_ is 87423). I've checked for thp (disabled) lowered malloc arenas & mmap threshold to test for fragmentation. I found no signs of serious fragmentation.

Valgrind found nothing, so it appears the memory still has live pointers to it.

Looking at all the allocations above 4KB I see the majority on a server doing only a stream open, read 4 bytes, write 4 bytes, stream close loop (i.e echo back what we read) are:

> /usr/lib/x86_64-linux-gnu/libc-2.31.so(mmap64+0x26) [0x11ba46]

> /usr/lib/x86_64-linux-gnu/libc-2.31.so(pthread_attr_setschedparam+0x2bc9) [0x9aaf9]

> /usr/lib/x86_64-linux-gnu/libc-2.31.so(pthread_attr_setschedparam+0x39e3) [0x9b913]

> /usr/lib/x86_64-linux-gnu/libc-2.31.so(__libc_malloc+0x1b9) [0x9d419]

> /usr/local/bin/node(node::NodeArrayBufferAllocator::Allocate(unsigned long)+0x68) [0x78c288]

> /usr/local/bin/node(v8::internal::Heap::AllocateExternalBackingStore(std::function<void* (unsigned long)> const&, unsigned long)+0x91) [0xcc91d1]

> /usr/local/bin/node(v8::internal::BackingStore::Allocate(v8::internal::Isolate*, unsigned long, v8::internal::SharedFlag, v8::internal::InitializedFlag)+0xb3) [0xdf7963]

> /usr/local/bin/node(v8::ArrayBuffer::NewBackingStore(v8::Isolate*, unsigned long)+0x73) [0xae55f3]

> /usr/local/bin/node(node::quic::Endpoint::OnAlloc(unsigned long)+0x59) [0x9d21e9]

> /usr/local/bin/node(node::quic::Endpoint::UDP::OnAlloc(uv_handle_s*, unsigned long, uv_buf_t*)+0x1c) [0x9d238c]

> /usr/local/bin/node(uv__udp_io+0xd6) [0x14384f6]

[pid 12] <... brk resumed>) = 0x55780a6ea000

> /usr/lib/x86_64-linux-gnu/libc-2.31.so(brk+0xb) [0x1173eb]

> /usr/lib/x86_64-linux-gnu/libc-2.31.so(__sbrk+0x91) [0x1174d1]

> /usr/lib/x86_64-linux-gnu/libc-2.31.so(__default_morecore+0xd) [0x9fd0d]

> /usr/lib/x86_64-linux-gnu/libc-2.31.so(pthread_attr_setschedparam+0x27c5) [0x9a6f5]

> /usr/lib/x86_64-linux-gnu/libc-2.31.so(pthread_attr_setschedparam+0x39e3) [0x9b913]

> /usr/lib/x86_64-linux-gnu/libc-2.31.so(__libc_malloc+0x1b9) [0x9d419]

> /usr/local/bin/node(node::NodeArrayBufferAllocator::Allocate(unsigned long)+0x68) [0x78c288]

> /usr/local/bin/node(v8::internal::Heap::AllocateExternalBackingStore(std::function<void* (unsigned long)> const&, unsigned long)+0x91) [0xcc91d1]

> /usr/local/bin/node(v8::internal::BackingStore::Allocate(v8::internal::Isolate*, unsigned long, v8::internal::SharedFlag, v8::internal::InitializedFlag)+0xb3) [0xdf7963]

> /usr/local/bin/node(v8::ArrayBuffer::NewBackingStore(v8::Isolate*, unsigned long)+0x73) [0xae55f3]

> /usr/local/bin/node(node::quic::Endpoint::OnAlloc(unsigned long)+0x59) [0x9d21e9]

> /usr/local/bin/node(node::quic::Endpoint::UDP::OnAlloc(uv_handle_s*, unsigned long, uv_buf_t*)+0x1c) [0x9d238c]

I couldnt find many matching mmunmap's indicating a possible build up. To prove Array Buffers are the cause of the leak we can use the API process.memoryUsage() and what do you know:

{

rss: 67227648,

heapTotal: 14458880,

heapUsed: 13409768,

external: 1278913,

arrayBuffers: 3815167

}

[...]

{

rss: 71024640,

heapTotal: 16031744,

heapUsed: 15261248,

external: 1369363,

arrayBuffers: 23107665

}

So what do you know... It is an Array buffer leak. API provides a value higher than actual in all likelihood due to virtual mem allocated not being written and therefore never actually allocated as RSS (4 byte read/writes).

@jasnell any quick thoughts here as to where this memory should be freed and why it would not be?

P.S Also found this while looking - totally unrelated and not triaged. I tried to get data with ./configure --debug (perhaps there would have been more information) but node ran too slow to successfully get to this point (quic timeout) when under valgrind.

==13833== Conditional jump or move depends on uninitialised value(s)

==13833== at 0x18DA72F: conn_recv_pkt (in /usr/local/bin/node)

==13833== by 0x18E6E64: ngtcp2_conn_read_pkt (in /usr/local/bin/node)

==13833== by 0xB06F3B: node::quic::Session::ReceivePacket(ngtcp2_path*, unsigned char const*, long) (in /usr/local/bin/node)

==13833== by 0xB084BD: node::quic::Session::Receive(unsigned long, std::shared_ptr<v8::BackingStore>, std::shared_ptr<node::SocketAddress> const&, std::shared_ptr<node::SocketAddress> const&) (in /usr/local/bin/node)

==13833== by 0xADBB9E: node::quic::EndpointWrap::ProcessInbound() (in /usr/local/bin/node)

==13833== by 0x152F087: uv__async_io.part.0 (async.c:163)

==13833== by 0x1542D83: uv__io_poll (epoll.c:374)

==13833== by 0x152FA83: uv_run (core.c:389)

==13833== by 0x892245: node::SpinEventLoop(node::Environment*) (in /usr/local/bin/node)

==13833== by 0x9BA2B4: node::NodeMainInstance::Run(int*, node::Environment*) (in /usr/local/bin/node)

==13833== by 0x9BA77B: node::NodeMainInstance::Run(node::EnvSerializeInfo const*) (in /usr/local/bin/node)

==13833== by 0x92BACE: node::Start(int, char**) (in /usr/local/bin/node)

==13833== Uninitialised value was created by a stack allocation

==13833== at 0xB06EB0: node::quic::Session::ReceivePacket(ngtcp2_path*, unsigned char const*, long) (in /usr/local/bin/node)

Not sure which member is being referred to but it would appear to be a bug.

Streams closed before the source can be attached triggers unhandled rejection.

setInterval(async ()=>{

const stream = session.open({ body: 'Hello World' });

console.log(stream)

stream.cancel()

}, 200)

stream.cancel destroys the stream before setStreamSource resulting in:

Error [ERR_INVALID_STATE]: Invalid state: Stream is already destroyed

at new NodeError (node:internal/errors:371:5)

at setStreamSource (node:internal/quic/stream:460:11)

at node:internal/quic/session:661:9

at processTicksAndRejections (node:internal/process/task_queues:96:5) {

code: 'ERR_INVALID_STATE'

}

Easy fix is to put if(stream.destroyed) return before setStreamSource in Session::open which seems sensible enough

No replication instructions yet but I managed to get an infinite loop on the client end when transmitting 10MB chunks at 50MB/s over a NodeStream from the client to a server at 127.0.0.1.

GDB suggested regular calling of ProcessInbound. No node event loops were executed. Mainly a note for me to investigate in the future and I'll extend with further information if I catch it in testing again.

The following script is able to replicate the issue 30%+ of the time (tested on a single core VPS).

const {getTLSKeySomehow, getTLSCertSomehow} = require('./common')

const Stream = require('stream');

const {

Endpoint,

} = require('net/quic');

const b = Buffer.alloc(1024*1024*10) // 10mb

async function main() {

let client = new Endpoint();

const session = client.connect({

address: '127.0.0.1',

port: 1234,

}, { alpn: 'xyz' });

await session.handshake;

const writeStream = new Stream.PassThrough();

let stream = session.open({ body: writeStream });

setTimeout(async ()=>{

writeStream.write(b)

console.log(require('process').memoryUsage())

}, 1000)

setTimeout(async ()=>{

writeStream.write(b)

console.log(require('process').memoryUsage())

}, 2000)

setTimeout(async ()=>{

writeStream.write(b)

console.log(require('process').memoryUsage())

}, 3000)

setTimeout(async ()=>{

console.log('closing')

await client.close()

client.destroy()

client = null

}, 4000)

setTimeout(async ()=>{

console.log(require('process').memoryUsage())

}, 5000)

//await client.close();

}

main()

It wasnt built for this issue (rather to see if the memory leak outlives the endpoint - and it does) however proved itself a demonstration of a client side infinite loop.

- Continued

Here are two javascript files which demonstrate the ArrayBuffer memory leak. I have not been able to isolate it as either receive or transmit side yet it would appear to be on both sides (I'm scratching my head on that one).

const {getTLSKeySomehow, getTLSCertSomehow} = require('./common')

const {

Endpoint,

} = require('net/quic');

setInterval(()=>{

console.log(require('process').memoryUsage())

}, 4000)

async function main() {

const client = new Endpoint();

const session = client.connect({

address: '127.0.0.1',

port: 1234,

}, { alpn: 'xyz' });

await session.handshake;

let stream

setInterval(async ()=>{

if(stream) stream.cancel()

stream = session.open({ body: 'Hello World' });

}, 200)

//await client.close();

}

main()

const {getTLSKeySomehow, getTLSCertSomehow} = require('./common')

const {SocketAddress} = require('net')

const {

Endpoint,

} = require('net/quic');

setInterval(()=>{

console.log(require('process').memoryUsage())

}, 4000)

const server = new Endpoint({address: new SocketAddress({address: '127.0.0.1', port: 1234})});

server.onsession = ({ session }) => {

session.onstream = ({ stream, respondWith }) => {

if(respondWith) respondWith({ body: 'Hello World' });

else{

stream.cancel()

}

};

};

server.listen({

alpn: 'xyz',

secure: {

key: getTLSKeySomehow(),

cert: getTLSCertSomehow(),

},

});

You can also see it without open/closing streams.

If you can spare the time @jasnell I'd really apreciate your insights in regards to direction.

- Continued

Confirmed that leaked packets are the BackingStore from Endpoint::UDP::OnReceive. I'm doing some refactoring of the API to (hopefully) resolve this. Leak size is 64k (the suggested size for udp reads). Actual RSS leak is usually one page per packet.

Nice. Ok, I've made note of that and it's a great find. I'll track that down when I dig back in about mid December.

- Confirmed appears fixed as part of patchset 3

They key fix is not disposing of uv_buf_t's without first converting to a BackingStore and freeing (via stl smart pointers).

Results:

If a client quic session is disconnected before the server sends the hello (e.g connect to something that doesnt have a quic server running on it):

../src/quic/session.cc:2569:node::quic::quic_version node::quic::Session::version() const: Assertion `!is_destroyed()' failed.

Backtrace:

#0 0xb6bf8746 in ?? () from /lib/arm-linux-gnueabihf/libc.so.6

#1 0xb6c060ae in raise () from /lib/arm-linux-gnueabihf/libc.so.6

#2 0xb6bf81f2 in abort () from /lib/arm-linux-gnueabihf/libc.so.6

#3 0x00bf5158 in node::Abort() ()

#4 0x00bf51a6 in node::Assert(node::AssertionInfo const&) ()

#5 0x00cebf84 in node::quic::Session::SendConnectionClose() ()

#6 0x00cef3e8 in node::quic::Session::ConnectionCloseScope::~ConnectionCloseScope() ()

#7 0x00cec056 in ?? ()

Fix: https://github.com/HalleyAssist/node/commit/f505f7a325746c58d551906ee7f6c19b93ad16b4

Cant supply a securecontext on client hello

Unhandled Rejection at: Promise {

<rejected> TypeError [ERR_INVALID_ARG_TYPE]: The "context" argument must be an crypto.SecureContext. Received an instance of SecureContext

at new NodeError (node:internal/errors:371:5)

at Object.callback (node:internal/quic/binding:74:13)

at node:internal/quic/session:199:38

at /usr/src/app/lib/QuicServer.js:47:21 {

code: 'ERR_INVALID_ARG_TYPE'

}

Corrected type validation is for SecureContext (https://github.com/HalleyAssist/node/commit/126da97de681e428b4f598b800b97df7f3039712)

I was unable to get SNI hostname to make it through to server side. hostname was undefined in all cases in clienthello

Will this QUIC implementation support datagram-style API as specified here: https://datatracker.ietf.org/doc/html/draft-ietf-quic-datagram?

I'm interesting seeing if QUIC can be used as an underlying P2P networking solution, and therefore may require UDP hole-punching. When sending hole-punching packets, these are usually just single datagrams as a stream connection hasn't been established yet. Seeing that QUIC is based on UDP, it seems that it should be possible to use QUIC in stream-style or datagram-style, but I'm not entirely familiar with the protocol.

As a side-note https://web.dev/webtransport/ is built on HTTP/3 and they indicate that it will support both datagram-style API and stream-style API.

==17== Invalid read of size 2

==17== at 0x4D4AB30: memmove (in /usr/lib/x86_64-linux-gnu/valgrind/vgpreload_memcheck-amd64-linux.so)

==17== by 0x18FD944: ngtcp2_cpymem (in /usr/local/bin/node)

==17== by 0x18F1A1D: ngtcp2_pkt_encode_stream_frame (in /usr/local/bin/node)

==17== by 0x18F3DB0: ngtcp2_ppe_encode_frame (in /usr/local/bin/node)

==17== by 0x18E268B: conn_write_pkt (in /usr/local/bin/node)

==17== by 0x18E4A64: ngtcp2_conn_write_vmsg (in /usr/local/bin/node)

==17== by 0x18E5098: ngtcp2_conn_writev_stream (in /usr/local/bin/node)

==17== by 0xB07681: node::quic::Session::Application::SendPendingData() (in /usr/local/bin/node)

==17== by 0xB07D74: node::quic::Session::SendPendingData() (in /usr/local/bin/node)

==17== by 0x152B6A0: uv__run_timers (timer.c:178)

==17== by 0x152F751: uv_run (core.c:380)

==17== by 0x892245: node::SpinEventLoop(node::Environment*) (in /usr/local/bin/node)

==17== Address 0x141834b8 is 6,248 bytes inside a block of size 8,192 free'd

==17== at 0x4D44A3F: free (in /usr/lib/x86_64-linux-gnu/valgrind/vgpreload_memcheck-amd64-linux.so)

==17== by 0xF00CBC: v8::internal::BackingStore::~BackingStore() (in /usr/local/bin/node)

==17== by 0xBD592E: std::_Sp_counted_deleter<v8::internal::BackingStore*, std::default_delete<v8::internal::BackingStore>, std::allocator<void>, (__gnu_cxx::_Lock_policy)2>::_M_dispose() (in /usr/local/bin/node)

==17== by 0xD698AD: v8::internal::ArrayBufferSweeper::SweepingJob::SweepListFull(v8::internal::ArrayBufferList*) (in /usr/local/bin/node)

==17== by 0xD69918: v8::internal::ArrayBufferSweeper::SweepingJob::SweepFull() (in /usr/local/bin/node)

==17== by 0xD69D6A: std::_Function_handler<void (), v8::internal::ArrayBufferSweeper::RequestSweep(v8::internal::ArrayBufferSweeper::SweepingScope)::{lambda()#1}>::_M_invoke(std::_Any_data const&) (in /usr/local/bin/node)

==17== by 0xCA9DAF: non-virtual thunk to v8::internal::CancelableTask::Run() (in /usr/local/bin/node)

==17== by 0x9EC048: node::(anonymous namespace)::PlatformWorkerThread(void*) (in /usr/local/bin/node)

==17== by 0x55E0608: start_thread (pthread_create.c:477)

==17== by 0x571C292: clone (clone.S:95)

==17== Block was alloc'd at

==17== at 0x4D437F3: malloc (in /usr/lib/x86_64-linux-gnu/valgrind/vgpreload_memcheck-amd64-linux.so)

==17== by 0x894287: node::NodeArrayBufferAllocator::Allocate(unsigned long) (in /usr/local/bin/node)

==17== by 0xDD10C0: v8::internal::Heap::AllocateExternalBackingStore(std::function<void* (unsigned long)> const&, unsigned long) (in /usr/local/bin/node)

==17== by 0xEFF852: v8::internal::BackingStore::Allocate(v8::internal::Isolate*, unsigned long, v8::internal::SharedFlag, v8::internal::InitializedFlag) (in /usr/local/bin/node)

==17== by 0xC4A364: v8::internal::(anonymous namespace)::ConstructBuffer(v8::internal::Isolate*, v8::internal::Handle<v8::internal::JSFunction>, v8::internal::Handle<v8::internal::JSReceiver>, v8::internal::Handle<v8::internal::Object>, v8::internal::Handle<v8::internal::Object>, v8::internal::InitializedFlag) (in /usr/local/bin/node)

==17== by 0xC4A64C: v8::internal::Builtin_ArrayBufferConstructor(int, unsigned long*, v8::internal::Isolate*) (in /usr/local/bin/node)

==17== by 0x15C2CF8: Builtins_CEntry_Return1_DontSaveFPRegs_ArgvOnStack_BuiltinExit (in /usr/local/bin/node)

==17== by 0x154F377: Builtins_JSBuiltinsConstructStub (in /usr/local/bin/node)

==17== by 0x1632A72: Builtins_CreateTypedArray (in /usr/local/bin/node)

==17== by 0x15B7180: Builtins_TypedArrayConstructor (in /usr/local/bin/node)

==17== by 0x154F377: Builtins_JSBuiltinsConstructStub (in /usr/local/bin/node)

==17== by 0x85E50EA: ???

- Patch for uninitialized read.

pimay not be used but does contain flags that need to be 0.

https://github.com/HalleyAssist/node/commit/ba98719f350831c73f3301ed62045b340d9ca6e2

Another trace for 15

==15== Invalid read of size 1

==15== at 0x4D4AB6E: memmove (in /usr/lib/x86_64-linux-gnu/valgrind/vgpreload_memcheck-amd64-linux.so)

==15== by 0x18FD944: ngtcp2_cpymem (in /usr/local/bin/node)

==15== by 0x18F1A1D: ngtcp2_pkt_encode_stream_frame (in /usr/local/bin/node)

==15== by 0x18F3DB0: ngtcp2_ppe_encode_frame (in /usr/local/bin/node)

==15== by 0x18E268B: conn_write_pkt (in /usr/local/bin/node)

==15== by 0x18E4A64: ngtcp2_conn_write_vmsg (in /usr/local/bin/node)

==15== by 0x18E5098: ngtcp2_conn_writev_stream (in /usr/local/bin/node)

==15== by 0xB07681: node::quic::Session::Application::SendPendingData() (in /usr/local/bin/node)

==15== by 0xB07D74: node::quic::Session::SendPendingData() (in /usr/local/bin/node)

==15== by 0x152B6A0: uv__run_timers (timer.c:178)

==15== by 0x152F751: uv_run (core.c:380)

==15== by 0x892245: node::SpinEventLoop(node::Environment*) (in /usr/local/bin/node)

==15== Address 0x594c022 is 2,242 bytes inside a block of size 8,192 free'd

==15== at 0x4D44A3F: free (in /usr/lib/x86_64-linux-gnu/valgrind/vgpreload_memcheck-amd64-linux.so)

==15== by 0xF00CBC: v8::internal::BackingStore::~BackingStore() (in /usr/local/bin/node)

==15== by 0xBD592E: std::_Sp_counted_deleter<v8::internal::BackingStore*, std::default_delete<v8::internal::BackingStore>, std::allocator<void>, (__gnu_cxx::_Lock_policy)2>::_M_dispose() (in /usr/local/bin/node)

==15== by 0xAD2F29: std::deque<std::unique_ptr<node::quic::Buffer::Chunk, std::default_delete<node::quic::Buffer::Chunk> >, std::allocator<std::unique_ptr<node::quic::Buffer::Chunk, std::default_delete<node::quic::Buffer::Chunk> > > >::~deque() (in /usr/local/bin/node)

==15== by 0xAD355A: non-virtual thunk to node::quic::ArrayBufferViewSource::~ArrayBufferViewSource() (in /usr/local/bin/node)

==15== by 0xD66BF2: v8::internal::GlobalHandles::InvokeFirstPassWeakCallbacks() (in /usr/local/bin/node)

==15== by 0xDCD650: v8::internal::Heap::PerformGarbageCollection(v8::internal::GarbageCollector, v8::GCCallbackFlags) (in /usr/local/bin/node)

==15== by 0xDCE0EF: v8::internal::Heap::CollectGarbage(v8::internal::AllocationSpace, v8::internal::GarbageCollectionReason, v8::GCCallbackFlags) (in /usr/local/bin/node)

==15== by 0xE3D5F3: v8::internal::ScavengeJob::Task::RunInternal() (in /usr/local/bin/node)

==15== by 0xCA9DAF: non-virtual thunk to v8::internal::CancelableTask::Run() (in /usr/local/bin/node)

==15== by 0x9ECE1C: node::PerIsolatePlatformData::RunForegroundTask(std::unique_ptr<v8::Task, std::default_delete<v8::Task> >) (in /usr/local/bin/node)

==15== by 0x9EECFD: node::PerIsolatePlatformData::FlushForegroundTasksInternal() (in /usr/local/bin/node)

==15== Block was alloc'd at

==15== at 0x4D437F3: malloc (in /usr/lib/x86_64-linux-gnu/valgrind/vgpreload_memcheck-amd64-linux.so)

==15== by 0x894287: node::NodeArrayBufferAllocator::Allocate(unsigned long) (in /usr/local/bin/node)

==15== by 0xDD10C0: v8::internal::Heap::AllocateExternalBackingStore(std::function<void* (unsigned long)> const&, unsigned long) (in /usr/local/bin/node)

==15== by 0xEFF852: v8::internal::BackingStore::Allocate(v8::internal::Isolate*, unsigned long, v8::internal::SharedFlag, v8::internal::InitializedFlag) (in /usr/local/bin/node)

==15== by 0xC4A364: v8::internal::(anonymous namespace)::ConstructBuffer(v8::internal::Isolate*, v8::internal::Handle<v8::internal::JSFunction>, v8::internal::Handle<v8::internal::JSReceiver>, v8::internal::Handle<v8::internal::Object>, v8::internal::Handle<v8::internal::Object>, v8::internal::InitializedFlag) (in /usr/local/bin/node)

==15== by 0xC4A64C: v8::internal::Builtin_ArrayBufferConstructor(int, unsigned long*, v8::internal::Isolate*) (in /usr/local/bin/node)

==15== by 0x15C2CF8: Builtins_CEntry_Return1_DontSaveFPRegs_ArgvOnStack_BuiltinExit (in /usr/local/bin/node)

==15== by 0x154F377: Builtins_JSBuiltinsConstructStub (in /usr/local/bin/node)

==15== by 0x1632A72: Builtins_CreateTypedArray (in /usr/local/bin/node)

==15== by 0x87E2CF7: ???

==15== by 0x154F1AD: Builtins_JSConstructStubGeneric (in /usr/local/bin/node)

==15== by 0x869FAEA: ???

==15==

Looking at the backtrace I'm hypothesizing that the ArrayBufferViewSource is being removed before the stream is Destroyed (or, more specifically it's list of outbound buffers) is cleared. Most likely this occurs if a stream is closed by the other end while the server is outputting data.

Update: I've come to suspect that perhaps it's the order of operations in Stream Destroy, Process Inbound could in theory re-schedule for outbound. If unschedule was later this wouldnt be an issue.

I've got a build in testing for this issue currently (and so far so good).

15 looks to be fixed by the removal of the goto in commit https://github.com/HalleyAssist/node/commit/6ef5d59108b49ac2e850127cfc2a1aacf444ad21

Looks like it was destructing too early. There was also a data corruption error in SendPendingData as well.

19 continued

https://github.com/HalleyAssist/node/commit/60d9bbcc7174ac2a3bc1ba8de442afe7aa938e7a

Prevents the Packet retained children from being freed by the GC during the time between SendPendingData and the UDP packet transmission

An update on this PR: I will definitely be returning back to this PR soon. As discussed on the recent http mini-summit call, however, I will be removing the JavaScript APIs from this PR and moving those into a separate PR. This changeset will be limited to just the internal/native code bits that are expose up via the internalBinding. The JavaScript APIs will be moved into a separate PR.

19 assertion fix

https://github.com/HalleyAssist/node/commit/4d25c39f041d8771ce8ad7a0c82ee23b20b76022

I suspected this was possible but only now triggered the assertion. Fixed.

@jasnell may I make a suggestion?

That before the code base becomes too different you take the time to review the fixes we implemented. I know theres a bit there, however they are required to get the previous code base to run reasonably well (we did both demo's with it and now have a prototype fleet running it in development).

Hey @splitice ! Yeah I actually have all of your fixes open in a set of tabs that I'm going to start going through later this week :-)

@jasnell then I appologise in advance for all my sins :)

Heh, don't apologize! Based on all the work you've done I owe you the beverage of your choice if we ever end up in the same location face to face.

@jasnell I havent merged your squashed build changes however 2 more for the list.

-

Session close() on the client side takes a long time to resolve, appears to be until the server starts it's own closure (due to timeout)

-

Closing the endpoint with an active session results in:

node: ../deps/uv/src/unix/udp.c:103: uv__udp_finish_close: Assertion `!uv__io_active(&handle->io_watcher, POLLIN | POLLOUT)' failed.

Aborted (core dumped)

Thread 1 "node" received signal SIGABRT, Aborted.

__GI_raise (sig=sig@entry=6) at ../sysdeps/unix/sysv/linux/raise.c:50

50 ../sysdeps/unix/sysv/linux/raise.c: No such file or directory.

(gdb) bt

#0 __GI_raise (sig=sig@entry=6) at ../sysdeps/unix/sysv/linux/raise.c:50

#1 0x00007ffff75d5535 in __GI_abort () at abort.c:79

#2 0x00007ffff75d540f in __assert_fail_base (fmt=0x7ffff7737ee0 "%s%s%s:%u: %s%sAssertion `%s' failed.\n%n",

assertion=0x55555760ed98 "!uv__io_active(&handle->io_watcher, POLLIN | POLLOUT)", file=0x55555760efd0 "../deps/uv/src/unix/udp.c", line=103, function=<optimized out>) at assert.c:92

#3 0x00007ffff75e3102 in __GI___assert_fail (assertion=assertion@entry=0x55555760ed98 "!uv__io_active(&handle->io_watcher, POLLIN | POLLOUT)",

file=file@entry=0x55555760efd0 "../deps/uv/src/unix/udp.c", line=line@entry=103, function=function@entry=0x55555760f240 <__PRETTY_FUNCTION__.9212> "uv__udp_finish_close")

at assert.c:101

#4 0x0000555556871071 in uv__udp_finish_close (handle=handle@entry=0x5555598bcf98) at ../deps/uv/src/unix/udp.c:103

#5 0x0000555556860f18 in uv__finish_close (handle=0x5555598bcf98) at ../deps/uv/src/unix/core.c:295

#6 uv__run_closing_handles (loop=0x5555597505c0 <default_loop_struct>) at ../deps/uv/src/unix/core.c:321

#7 uv_run (loop=0x5555597505c0 <default_loop_struct>, mode=UV_RUN_ONCE) at ../deps/uv/src/unix/core.c:399

#8 0x0000555555d13ae0 in node::Environment::CleanupHandles() () at ../deps/uv/src/unix/core.c:863

#9 0x0000555555d185ab in node::Environment::RunCleanup() () at ../deps/uv/src/unix/core.c:863

#10 0x0000555555ccd240 in node::FreeEnvironment(node::Environment*) () at ../deps/uv/src/unix/core.c:863

#11 0x0000555555dd44d3 in node::NodeMainInstance::Run(node::EnvSerializeInfo const*) () at ../deps/uv/src/unix/core.c:863

#12 0x0000555555d549ee in node::Start(int, char**) () at ../deps/uv/src/unix/core.c:863

#13 0x00007ffff75d709b in __libc_start_main (main=0x555555cc3b60 <main>, argc=2, argv=0x7fffffffbf38, init=<optimized out>, fini=<optimized out>, rtld_fini=<optimized out>,

stack_end=0x7fffffffbf28) at ../csu/libc-start.c:308

#14 0x0000555555cc612a in _start () at ../deps/uv/src/unix/core.c:863

The following test script is supplied:

const { Endpoint } = require('node:net/quic'),

crypto = require('crypto')

class DumpCertificate{

constructor(ip, port){

this._ip = ip

this._port = port

}

async retrieveCert() {

let peerCertificate = null

const client = new Endpoint()

try {

let session = await this._clientConnect(client)

peerCertificate = session.peerCertificate

// for 20 await session.close() here

} finally {

await this._closeClient(client)

}

return peerCertificate

}

async _clientConnect(client){

const session = client.connect({

address: this._ip,

port: this._port

}, { alpn: 'stuff', hostname: 'certificate' })

await session.handshake;

return session

}

async _closeClient(client){

try {

await client.close()

} catch (err) {

debug(`Failed to close connection to ${this._ip}: ${err}`)

}

}

}

async function main(){

const ip = process.argv[1]

if (!ip) {

console.log('No ip')

process.exit(1)

}

const port = parseInt(process.argv[2])

if (!port) {

console.log('No port')

process.exit(2)

}

const dc = new DumpCertificate(ip, port)

const start = Date.now()/1000

let peerCertificate

try {

peerCertificate = await dc.retrieveCert()

} catch(ex){

const elapsed = Date.now()/1000 - start

console.log(JSON.stringify({error: ex.message, elapsed}))

return

}

let result, elapsed = Date.now()/1000 - start

if(peerCertificate){

const validTo = new Date(peerCertificate.validTo).getTime()/1000

result = {

validTo,