Thomas Capelle

Thomas Capelle

this is weird cause the `ndarray` is correctly imported on `imports.py`

I am pasting this here. Have been running a bunch of sweeps comparing this `timm` split vs the `default_split` method here: https://wandb.ai/capecape/fine_tune_timm/sweeps . ## (3 epoch finetune with 1/0 epoch...

would you mind printing the `learn.path` and `learn.model_dir` please?

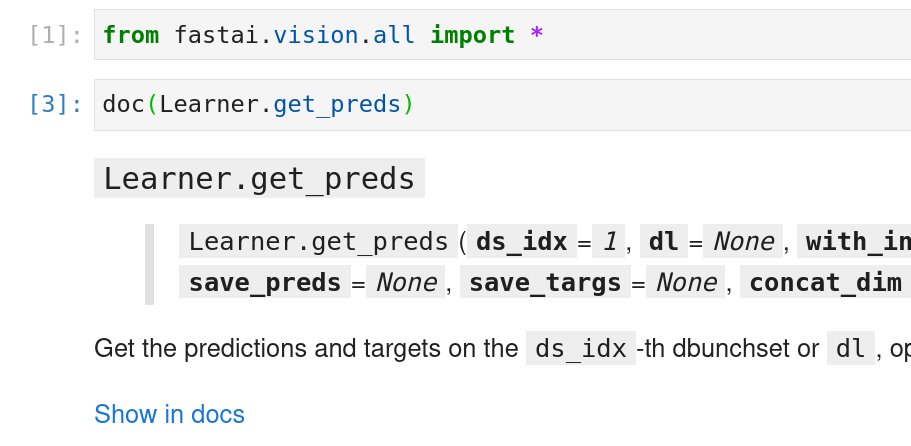

Fastai's docstring are minimal, one liners. The documentation is on the website or the notebooks where the function is defined. This is intended: https://docs.fast.ai/learner.html#Learner.validate

I agree that there is no easy way to go from the code to the doc. I would also like better docstrings, but don't know how ti could be done...

Got it, thanks!  It is nice for us using jupyter, but for the regular folk using VSCode it may be more complicated.

We could put a big integer for non indexed datasets. `n` is mostly used to know where on the training loop we are, to do scheduling, etc...

if you pass `n` at constructor it works

sorry did not get that, I get it now, this extra argument is not present on pytorch Dataloader class.

I would think this way: `get_preds` does validate on the `validation_dataloader` running `one_epoch`, so kind of makes sense to call `after_epoch`.