szopal

szopal

I get error SSLHandshake because SCDF not send client certificate to ADFS server while try to get oauth token.

I run SCDF in Kubernetes. But I don't want to have OAuth between my credentials provider and SCDF, I world like to have connection between SCDF and my credentials provider...

I have the same problem but with ADFS - after logout from SCDF, it takes me to Log In screen but after click on this it re-authentication me without ask...

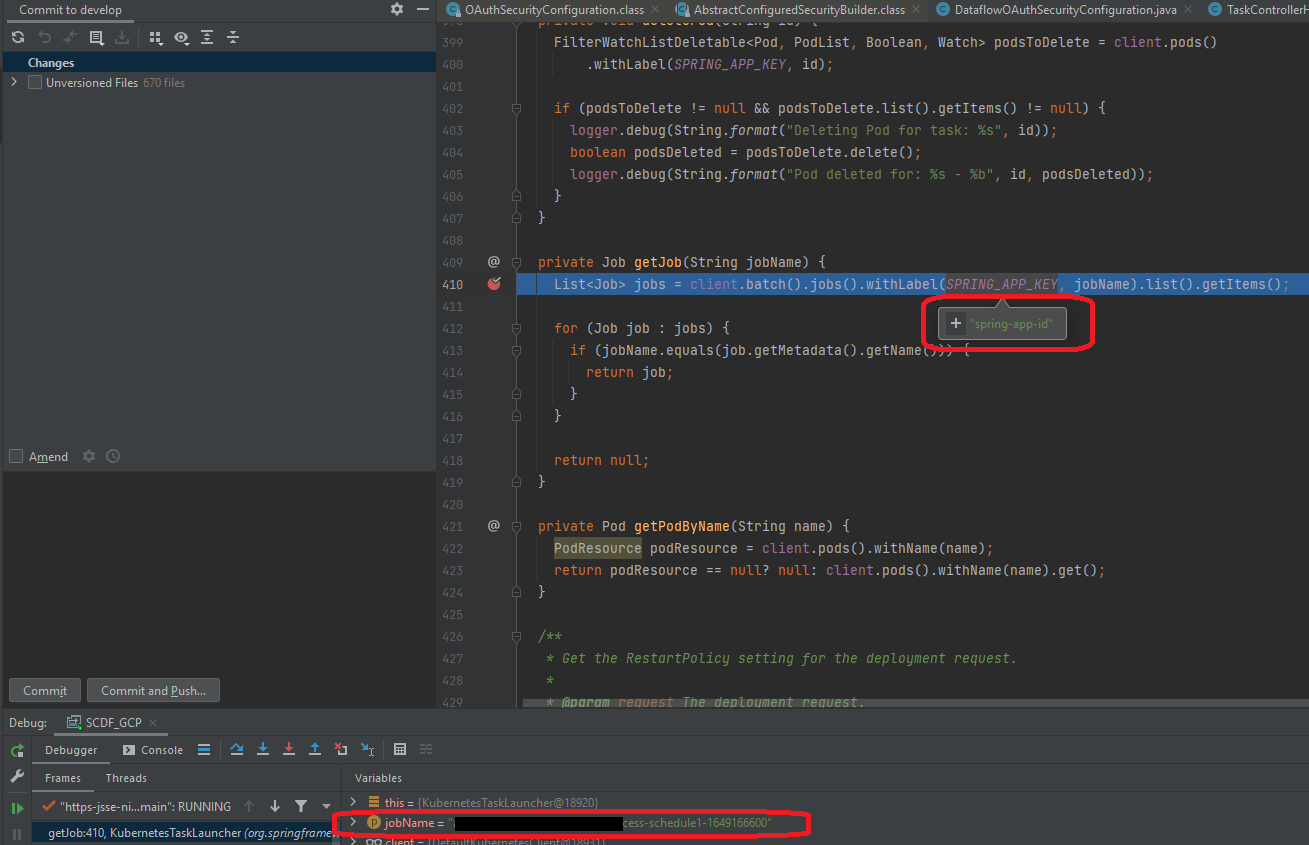

I can't agree because Job launched by CronJobs has property: `job-name=my-task-cronjobs-schedule1-1649166600` and I think that SCDF could retrieve logs via this property instead of _spring-app-id_. What dou you think? ...

Yes, of course: ``` szopal@aps000xxxx50-mb:/mnt/d$ kubectl logs $(kubectl get pods --selector=job-name=xxxxxxx-process-schedule2-manual-sd2qh --output=jsonpath='{.items[*].metadata.name}') {"timestampSeconds":1649273223,"timestampNanos":246000000,"severity":"INFO","thread":"main","logger":"xx.xx.xxxx.batch.xx.XXXBootApplication","message":"Starting XXXBootApplication v1.8.7.228-SNAPSHOT using Java 11.0.11 on xxxxxxx-process-schedule2-manual-sd2qh-zp5ms with PID 39 (/xxxxxx/xxxxx-boot.jar started by root in /)","context":"default"} {"timestampSeconds":1649273223,"timestampNanos":268000000,"severity":"DEBUG","thread":"main","logger":"xx.xxx.xxx.batch.xxx.XXXBootApplication","message":"Running...

It's seems that just add in class KubernetesDeployerProperty ``` private int backoffLimit = 0; public int getBackoffLimit() { return backoffLimit; } public void setBackoffLimit(int backoffLimit) { this.backoffLimit = backoffLimit; }...

Hi, I would like to attach my problem to this issue - I also need lifecycle properties to connect by Cloud Fuse to Cloud Storage on GCP: ``` lifecycle: postStart:...

We have the same problem - we have private cluster without access to internet, and when I've installed grafana I've got the same error.

DSL is simple: lab1: performance-diagnostic-process && lab2: performance-diagnostic-process

Oh, sory - I've pasted different DSL task - but it's the same flow:  diag1: performance-diagnostic-process && diag2: performance-diagnostic-process