spring-cloud-dataflow

spring-cloud-dataflow copied to clipboard

spring-cloud-dataflow copied to clipboard

Can't retrieve logs from Task launch by CronJobs

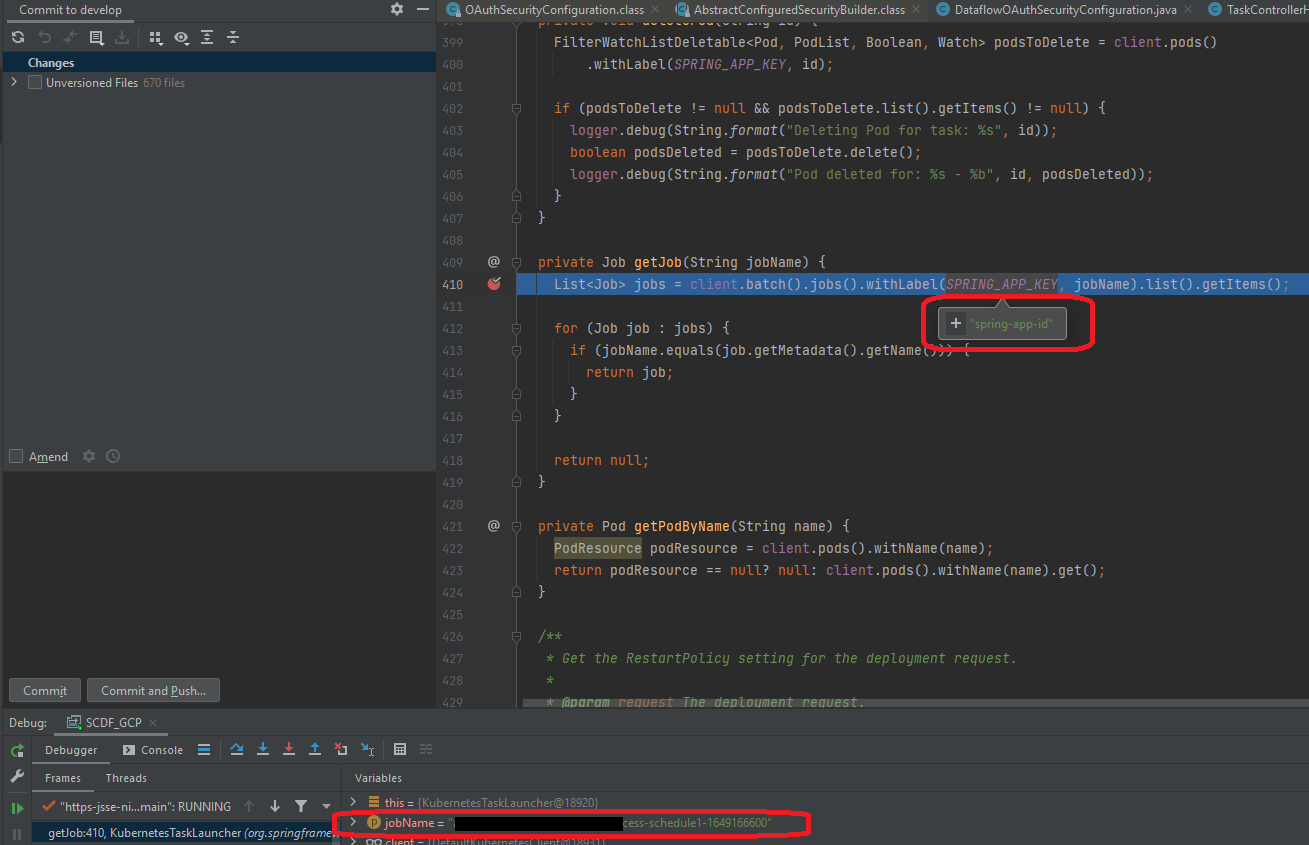

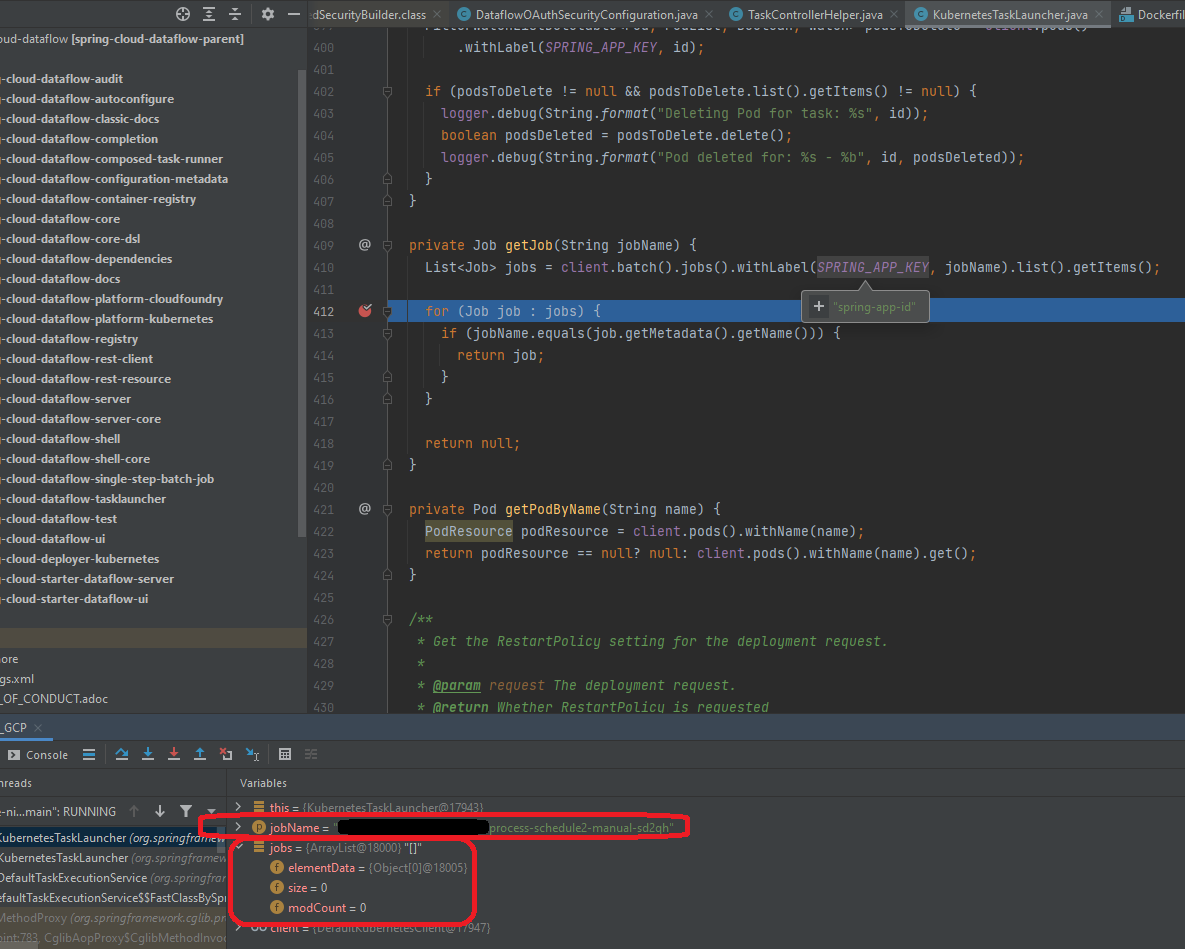

I noticed that when define in SCDF schedule for some task (so SCDF create CronJobs), and this CronJobs launch Jobs, logs can't be retrieve. I check code in KubernetesTaskLauncher class and I saw that in method private Job getJob(String jobName) jobs are get by label spring-app-id:

List<Job> jobs = client.batch().jobs().withLabel(SPRING_APP_KEY, jobName).list().getItems();

but when I describe my Job which was launched by CronJobs there is no label with this key:

szopal@aps00088850-mb:/$ kubectl describe jobs my-task-cronjobs-schedule1-1649166600

Name: my-task-cronjobs-schedule1-1649166600

Namespace: ns

Selector: controller-uid=687f3b60-5f9e-4434-92a2-bc355adae237

Labels: controller-uid=687f3b60-5f9e-4434-92a2-bc355adae237

job-name=my-task-cronjobs-schedule1-1649166600

spring-cronjob-id=my-task-cronjobs

Annotations: <none>

Controlled By: CronJob/my-task-cronjobs-schedule1

Parallelism: 1

Completions: 1

Start Time: Tue, 05 Apr 2022 15:50:09 +0200

Completed At: Tue, 05 Apr 2022 15:51:36 +0200

Duration: 87s

Pods Statuses: 0 Active / 1 Succeeded / 0 Failed

Pod Template:

Labels: controller-uid=687f3b60-5f9e-4434-92a2-bc355adae237

job-name=my-task-cronjobs-schedule1-1649166600

spring-cronjob-id=my-task-cronjobs

Service Account: default

Containers:

my-task-cronjobs:

Image: eu.gcr.io/xxxxxxx/my-task-cronjobs:1.8.6.125-SNAPSHOT

Port: <none>

Host Port: <none>

When I describe simply Jobs which was launched manually not by CronJobs there is present label spring-app-id:

szopal@aps00088850-mb:/$ kubectl describe jobs my-task-jobs-gg5pq89mo3

Name: my-task-jobs-gg5pq89mo3

Namespace: ns

Selector: controller-uid=ed0258d9-1c80-454d-b01a-0dc60affebb6

Labels: spring-app-id=my-task-jobs-gg5pq89mo3

spring-deployment-id=my-task-jobs-gg5pq89mo3

task-name=my-task-jobs

Annotations: <none>

Parallelism: 1

Completions: 1

Start Time: Tue, 05 Apr 2022 15:37:35 +0200

Completed At: Tue, 05 Apr 2022 15:38:56 +0200

Duration: 81s

Pods Statuses: 0 Active / 1 Succeeded / 0 Failed

Pod Template:

Labels: controller-uid=ed0258d9-1c80-454d-b01a-0dc60affebb6

job-name=my-task-jobs-gg5pq89mo3

role=spring-app

spring-app-id=my-task-jobs-gg5pq89mo3

spring-deployment-id=my-task-jobs-gg5pq89mo3

task-name=my-task-jobs

Containers:

my-task-jobs-xx23l513dl:

Image: eu.gcr.io/xxxxxxx/my-task-jobs:1.8.6.12-SNAPSHOT

Port: <none>

Host Port: <none>

Feels like this is related to #3978

I can't agree because Job launched by CronJobs has property:

job-name=my-task-cronjobs-schedule1-1649166600

and I think that SCDF could retrieve logs via this property instead of spring-app-id. What dou you think?

We set property in sh in container:

POD_NAME=$(head -1 /etc/hostname)

JOB_ID=$(getK8sJobId $POD_NAME)

TASK_EXTERNAL_EXECUTION_ID_KEY="--spring.cloud.task.external-execution-id"

ARGS=$(add2Args $ARGS $TASK_EXTERNAL_EXECUTION_ID_KEY $JOB_ID)

So finally in SCDF external-execution-id is present.

So just to confirm, when you fail to get logs via scdf, you're able to see logs if you get those i.e. via kubectl?

Yes, of course:

szopal@aps000xxxx50-mb:/mnt/d$ kubectl logs $(kubectl get pods --selector=job-name=xxxxxxx-process-schedule2-manual-sd2qh --output=jsonpath='{.items[*].metadata.name}')

{"timestampSeconds":1649273223,"timestampNanos":246000000,"severity":"INFO","thread":"main","logger":"xx.xx.xxxx.batch.xx.XXXBootApplication","message":"Starting XXXBootApplication v1.8.7.228-SNAPSHOT using Java 11.0.11 on xxxxxxx-process-schedule2-manual-sd2qh-zp5ms with PID 39 (/xxxxxx/xxxxx-boot.jar started by root in /)","context":"default"}

{"timestampSeconds":1649273223,"timestampNanos":268000000,"severity":"DEBUG","thread":"main","logger":"xx.xxx.xxx.batch.xxx.XXXBootApplication","message":"Running with Spring Boot v2.6.6, Spring v5.3.18","context":"default"}

{"timestampSeconds":1649273223,"timestampNanos":269000000,"severity":"INFO","thread":"main","logger":"xx.xxx.xxx.xxx.xxx.XXXXBootApplication","message":"The following 1 profile is active: \"xxx-xxx-xxx-xxx\"","context":"default"}

{"timestampSeconds":1649273226,"timestampNanos":612000000,"severity":"INFO","thread":"main","logger":"org.springframework.integration.config.DefaultConfiguringBeanFactoryPostProcessor","message":"No bean named \u0027errorChannel\u0027 has been explicitly defined. Therefore, a default PublishSubscribeChannel will be created.","context":"default"}

{"timestampSeconds":1649273226,"timestampNanos":652000000,"severity":"INFO","thread":"main","logger":"org.springframework.integration.config.DefaultConfiguringBeanFactoryPostProcessor","message":"No bean named \u0027integrationHeaderChannelRegistry\u0027 has been explicitly defined. Therefore, a default DefaultHeaderChannelRegistry will be created.","context":"default"}

{"timestampSeconds":1649273226,"timestampNanos":945000000,"severity":"INFO","thread":"main","logger":"org.springframework.cloud.context.scope.GenericScope","message":"BeanFactory id\u003defc7fee1-b7df-38d4-9fad-ae2f09bec4aa","context":"default"}

@cppwfs As you've been handling these cases in a past would you know what happens here?

@jvalkeal we can discuss.

Since this task was launched by the cronjob Data flow does not have the external execution id. Without this external execution id data flow can not obtain the log. However we can add a feature that would put a label on the task that would allow dataflow to identify the pod and get the appropriate log.

Is there a plan to develop this feature? 🥲

Hello,

We encountered an error in version 2.11.2-SNAPSHOT:

o.fabric8.kubernetes.client.KubernetesClientException: Failure executing: GET at: https://X.X.X.X/api/v1/namespaces/dataflow/pods?labelSelector=spring-app-id%3D%7BtaskExternalExecutionId%7D. Message: unable to parse requirement: values[0][spring-app-id]: Invalid value: "{taskExternalExecutionId}": a valid label must be an empty string or consist of alphanumeric characters

We are attempting to retrieve logs for a cronjob. Could there be a configuration issue or an underlying problem? We encountered the same error in version 2.11.1.

Seems like taskExternalExecutionId not being replaced in the kubernetes query

Regards.

We are looking to add this feature in SCDF 3.x.

@cppwfs What is the expected date for version-up to 3.x?