LAVIS

LAVIS copied to clipboard

LAVIS copied to clipboard

LAVIS - A One-stop Library for Language-Vision Intelligence

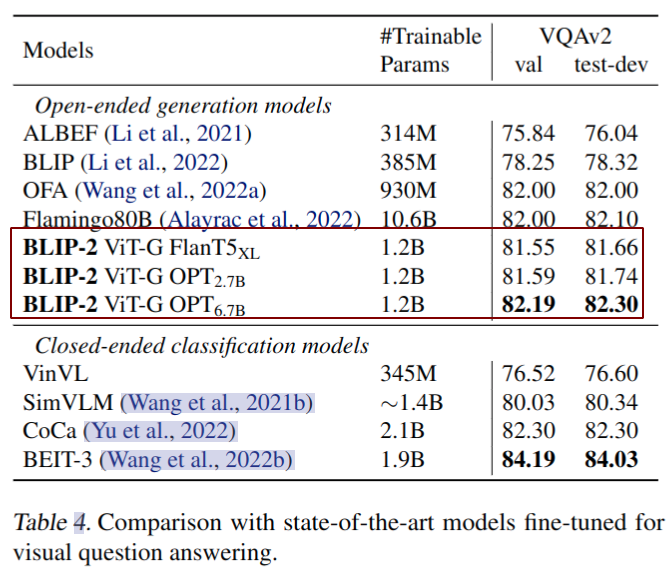

Thanks for the great work. Will the code related to the following table be open source soon?And does the current code support okvqa finetune?  Thanks.

Add a image-text generation app for blip2 for better interaction.

should be `torch.autocast()` https://github.com/salesforce/LAVIS/blob/0e9a3ecfa5dc668761ce6c0e3cf827578e8593c4/lavis/models/blip2_models/blip2_t5.py#L218

Thanks for the great work! I was trying out BLIP2 feature extractor,actually it works. But when i load the model by `load_model_and_preprocess(name="blip2_feature_extractor", model_type="pretrain", is_eval=True, device=device)` There're lots of missing keys....

ViT (if not finetuned) and LLM weights are not stored in the current BLIP2 checkpoints. The default load_checkpoint() in BaseModel raises warning on missing keys, which causes confusion. Proposed solution...

Great work! Thanks for releasing the models, pre-trained checkpoints and examples. I have a couple of questions about the BLIP and BLIP 2 models - I can't clearly find this...

It's a great job indeed. I encountered a downloading issue and I have to run the program on a remote server that cannot connect to the network. When I tried...

When running the examples from the [colab](https://colab.research.google.com/github/salesforce/LAVIS/blob/main/examples/blip2_instructed_generation.ipynb), they work fine on GPU but I get the following runtime error when trying to run on CPU: ```sh RuntimeError: Input type (torch.FloatTensor)...

Thank you for your fantastic work! Would you release the weights for the BLIP2 ViT-L version?