madsthaks

madsthaks

Thank you for your response, this was very helpful. I had one follow up question that is related. To double check my shap values for an individual prediction, I took...

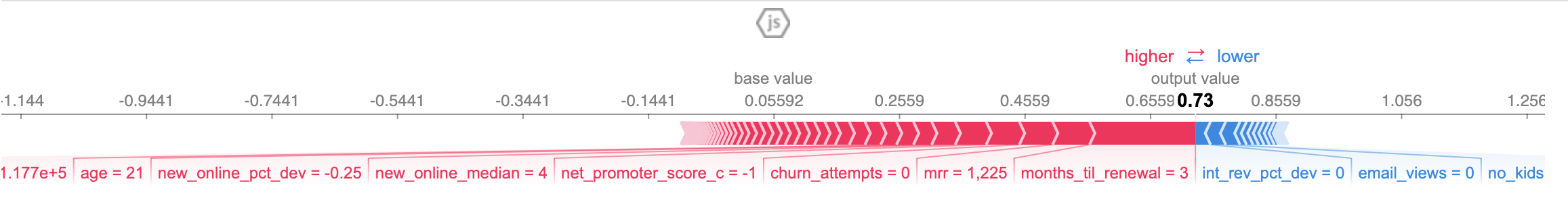

Here is the code i've used. ``` explainerModel_prob = shap.TreeExplainer(xgb_model,data = shap.sample(df, 100),model_output='probability') shap_values_model_prob = explainerModel_prob.shap_values(df) shap.initjs() shap.force_plot(explainerModel_prob.expected_value, shap_values_model_prob[5],df.iloc[[0]]) ```  ``` print(np.sum(shap_values_model_prob[5])) 0.67 ``` The output_value in the force...

Thanks! So, can you elaborate on how this `expected_value` is calculated? I looked through your code and saw this piece: ``` elif data is not None: try: self.expected_value = self.model.predict(self.data,...

Ah, silly mistake. Thanks for the response. I did have one more question: I started analyzing the top drivers using SHAP and compared the results using probabilities and log odds....

Got it, thanks again. One last question, I also noticed that for a particular prediction, the directionality of a specific driver changes. This seems a bit odd. Any ideas why...