Yi-Chen (Howard) Lo

Yi-Chen (Howard) Lo

### [NeurIPS 2019 Reviewer Guildelines](https://nips.cc/Conferences/2019/PaperInformation/ReviewerGuidelines#Examples) I found that maybe try to follow this guideline to summarize the paper can be helpful for developing the research taste.

### Summary This paper presents a systematic re-evaluation for a number of recently purposed GAN-based text generation models. They argued that N-gram based metrics (e.g., BLEU, Self-BLEU) is not sensitive...

@xpqiu Hi Xipeng Actually, your paper is currently in my paper reading pending list! Ha! I will soon get to your paper and write a summary about it. It would...

### Summary 1. Pre-training the word embeddings in the source and/or target languages helps to increase BLEU scores. 2. Pre-training source language embeddings gains much improvement, indicating that better encoding...

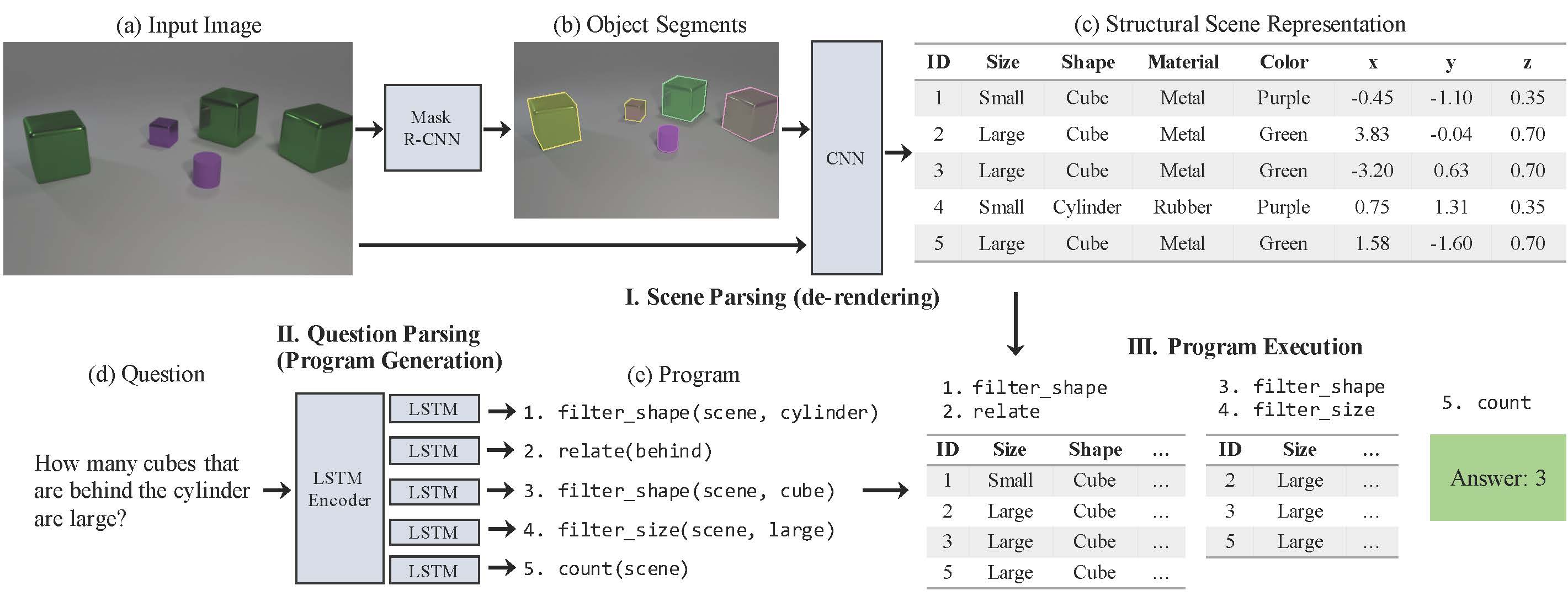

### Summary  Neural-symbolic VQA (NS-VQA) model has three components: - A scene parser (de-renderer) that segments an input image (a-b) and recovers a structural scene representation (c). - A...

### Summary - Present fluency boost learning and inference mechanism for seq2seq model. (See figure 2) - Fluency boost learning: Generates more error-corrected sentence pairs from model's n-best list, enabling...

### Summary This paper purposes a Relation Networks (RN) to solve relational problems, and achieves SOTA on VQA challenge on CLEVER dataset, text-based QA on bAbI dataset, and complex reasoning...

### Summary This paper purposes a new paradigm of learning to ground comparative adjectives within the realm of color description: given a reference RGB color and a comparative term (e.g.,...

### Model uncertainty Model or epistemic uncertainty captures uncertainty in the model parameters. It is higher in regions of no or little training data and lower in regions of more...

### Summary This paper purposes a *Deep Reinforcement Relevance Network* (DQN-based) learn to understand the texts from both text-based state and action (learn the "relevance" between state and action) via...