Kyle Bi

Kyle Bi

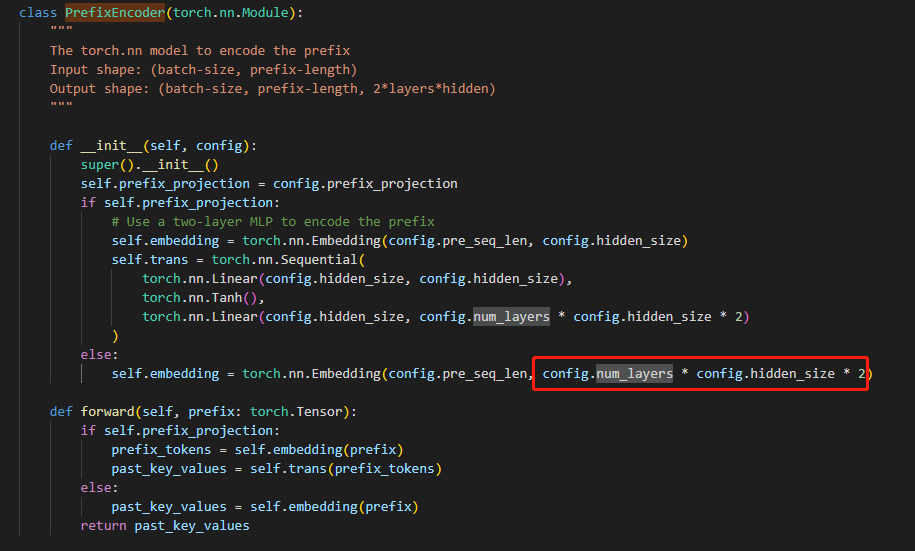

> > > > 看源码,实现在所有层了。 源码位置在modeling_chatglm.py 136行,和ptuning v2,代码一致。  您好,多问一句,PrefixEncoder的forward函数的输入是什么?我看论文中的图应该指的是pre_seq_len的tokens作为输入?

哈喽楼主,问下在运行train_prompts.sh的时候,出现因为actor_optim和critic_optim中可优化的参数为空,导致zero的策略中flatten的操作报错的情况,因为flatten的参数不能为空。 我在train_peft_prompts.py文件里把optimizer的参数都设置requires_grad=True也不行。

> @yynil > > act_num, cri_num = 0,0 for name,para in actor.named_parameters(): if para.requires_grad: print(name) act_nuum +=1 for name,para in critic.named_parameters(): if para.requires_grad: print(name) cri_num +=1 print(act_num, cri_num) > >...

hi, after setting llama_model: "wangrongsheng/MiniGPT-4-LLaMA" in minigpt4/configs/models/minigpt4.yaml, which llama model will it load? 13B or 7B

> hello, when I running with wangrongsheng/MiniGPT-4-LLaMA-7B, an error happened that the shape of the weight and bias in the llama_proj module in original minigpt4 mismatched(4096 vs 5120). Thus, I'm...

楼主你好,请问LLaMA-13B的原始模型参数可以分享一下吗?十分感谢

乱码问题,这个要改一下Chrome编码方式?

@yangapku 辛苦大佬有空的话解答一下

import time def train(): for i, epoch in enumerate(range(start_epoch, end_epoch)): for train_sample in train_data_loader: start_time = time.time() doing... print('Time consuming: {}s'.format(time.time() - start_time))

如果方便的话,可否提供AVA的数据地址?感谢