baedan

baedan

Zygote v0.6.41, Julia 1.7.3 MWE: ```julia using Zygote α, β = randn(2, 2), randn(2, 2) g(v) = map(eachcol(v), eachcol(β)) do x, y sum(x.*x.*y) end |> sum # this fails gradient(α)...

is there a way to create a copy of an existing model with a new, _flat_ parameters vector, _without mutating_, and _without using `restructure`_? the reason for the latter two...

TRPO

this PR implements Trust-Region Policy Optimization, and adds a CartPole experiment for it. to this end, i wrote a few utility functions that are shared amongst policy gradient policies (#737)....

hello! while going through `vpg.jl` i had some odds-and-ends questions. still pretty new to julia and especially Flux.jl, so please bear with me :D 1. i don't understand the point...

as far as i can tell, only off-line λ-return is implemented (`TDλReturnLearner`). any interest in implementing others, such as TD(λ), n-step truncated return, true online TD(λ), and so on? i'm...

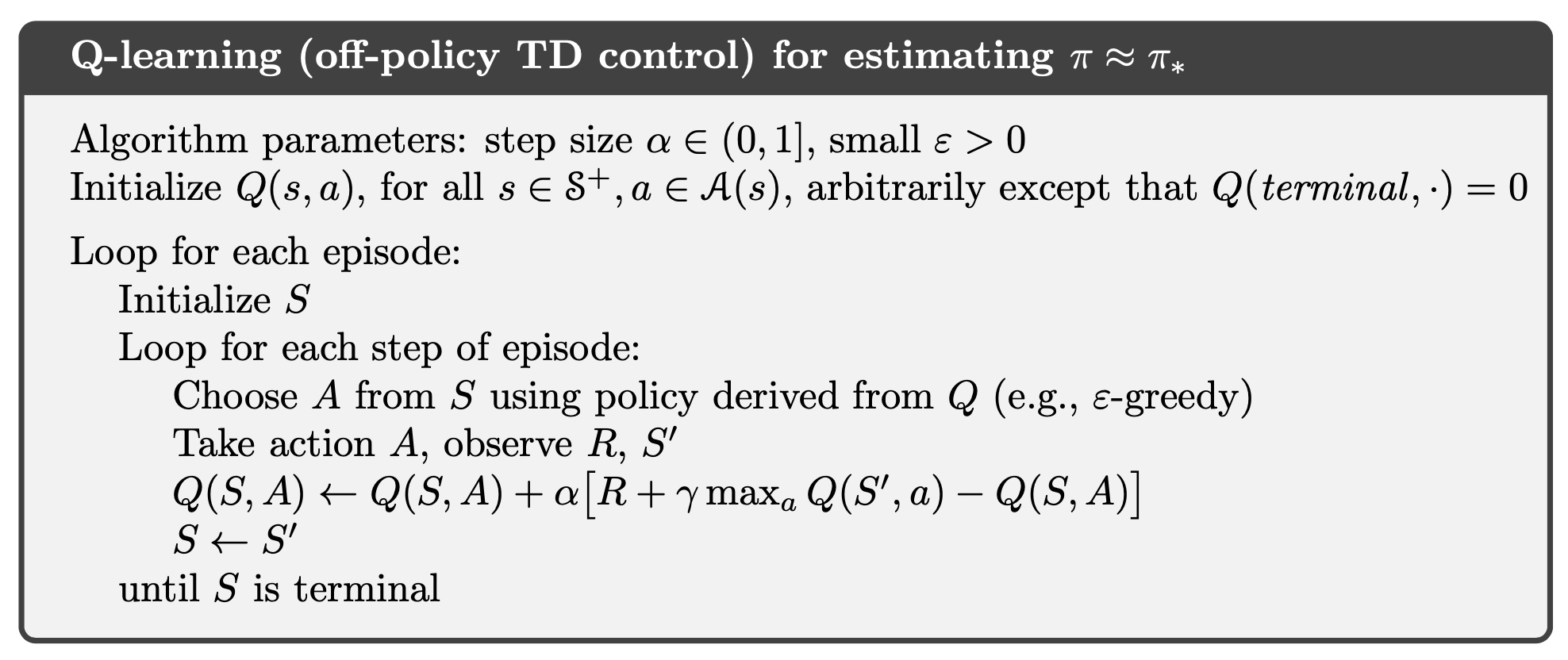

according to RL: An Introduction (page 131), Q-learning should select an action _having already learned from the transition immediately preceding it_.  this differentiates it from SARSA, which selects an...

today i was trying estimate the state values of a policy using off-policy `n`-step TD. as far as i can tell, i need to use a `VBasedPolicy` to represent my...

`TDLearner(;approximator, γ=1.0, method, n=0)`: the `n` in the constructor is strangely not the number of time steps used, but rather that number minus 1. this is really strange.

low hanging fruit for a ton of performance gain. will make pr when i get the chance