Q-learning update timing

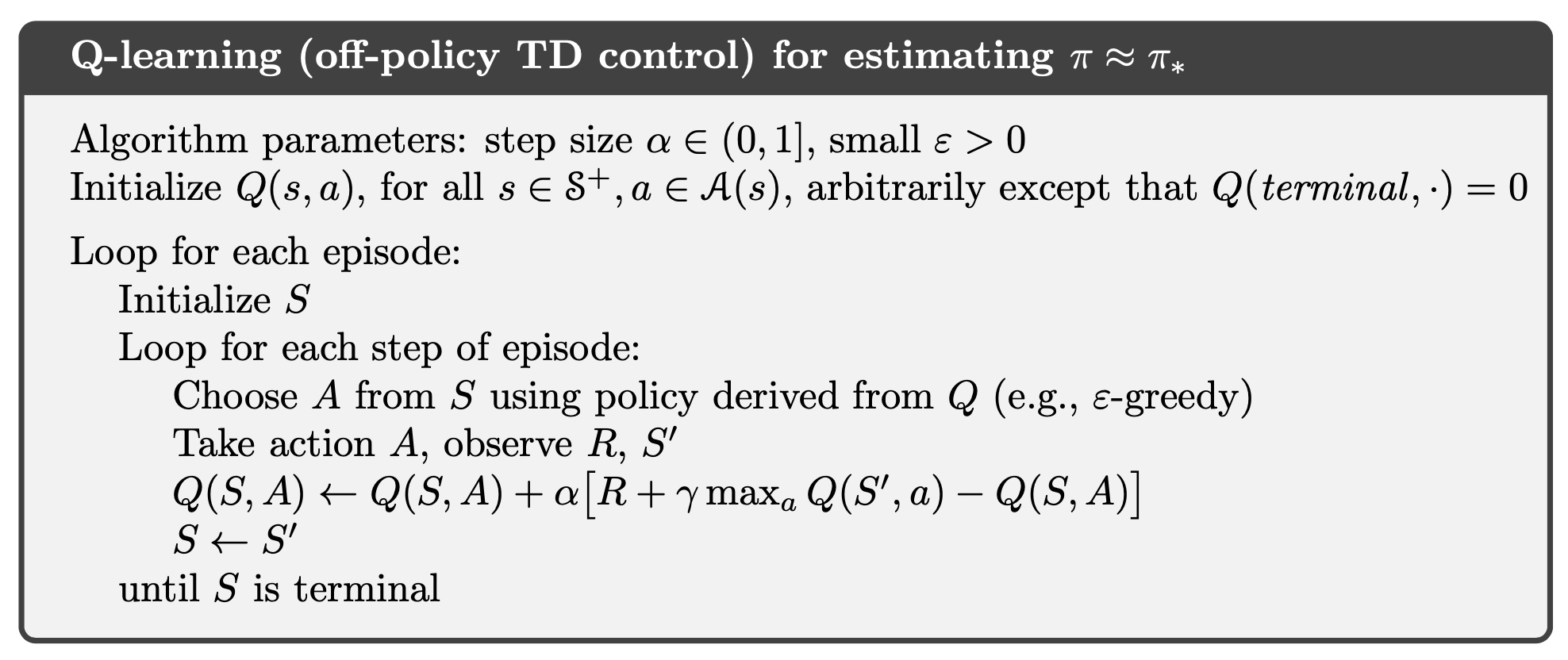

according to RL: An Introduction (page 131), Q-learning should select an action having already learned from the transition immediately preceding it.

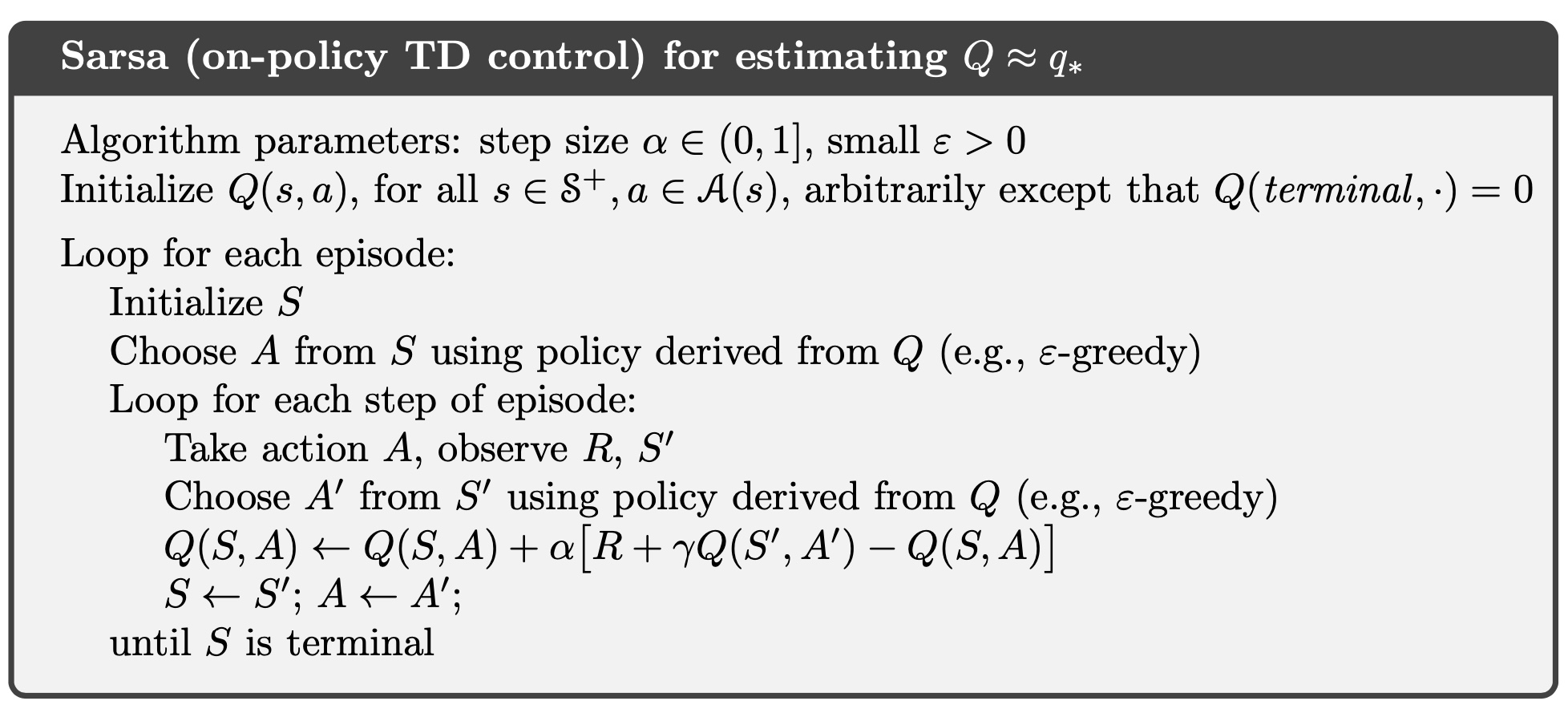

this differentiates it from SARSA, which selects an action not having learned from the transition immediately preceding it. the reason is that a SARSA update requires precisely that action as input. Q-learning, being an off-policy algorithm, doesn't have this requirement.

however, here, at every step $t$, :SARS TD updates during :PreActStage, using the trajectory from the previous transition at $t-1$. so in the next step $t+1$, the algorithm chooses action not having updated using the transition at $t$. this is in accordance to SARSA, but not Q-learning.

https://github.com/JuliaReinforcementLearning/ReinforcementLearning.jl/blob/fc74394b4552d09d50411fcb46d62c6b85ac3da9/src/ReinforcementLearningZoo/src/algorithms/tabular/td_learner.jl#L125-L143

Thanks! This is a very important bug that may be the root reason of several strange results. I'll fix it in the next release.

note that expected SARSA has the same issue. it's similar to q-learning in that action need not be selected before an update using the previous transition. there's no pseudocode in the book, but here's a paper with it.

https://github.com/JuliaReinforcementLearning/ReinforcementLearning.jl/blob/fc74394b4552d09d50411fcb46d62c6b85ac3da9/src/ReinforcementLearningZoo/src/algorithms/tabular/td_learner.jl#L103-L123