LMFlow

LMFlow copied to clipboard

LMFlow copied to clipboard

An Extensible Toolkit for Finetuning and Inference of Large Foundation Models. Large Models for All.

I run evaluation with my LoRA finetuned model on dataset PubMedQA/test, I got the following error: ` --------Begin Evaluator Arguments---------- model_args : ModelArguments(model_name_or_path='decapoda-research-llama-7b-hf', lora_model_path='output_models/finetune_with_lora', model_type=None, config_overrides=None, config_name=None, tokenizer_name=None, cache_dir=None, use_fast_tokenizer=True,...

**Describe the bug** When I tried to run the chatbot, a `ValueError` was raised > raise ValueError(f"Some specified arguments are not used by the HfArgumentParser: {remaining_args}") > ValueError: Some specified...

Now we can train llama-7B model on one RTX 3090, can we train llama-13B model with two RTX3090 with model parallelism?

I got the following error when I fine tune decapoda-research-llama-7b-hf or pinkmanlove-llama-7b-hf ``` TypeError: Descriptors cannot not be created directly. If this call came from a _pb2.py file, your generated...

I installed cuda12 on my machine. Then I do a: conda install cuda -c nvidia/label/cuda-11.7.0 my question is: there's quite some versions of the same lib there, such as: (base)...

python app.py 运行起来了,可是当推理时报错:Expected all tensors to be on the same device 在在这行报错的: outputs = model.inference(inputs, max_new_token.....

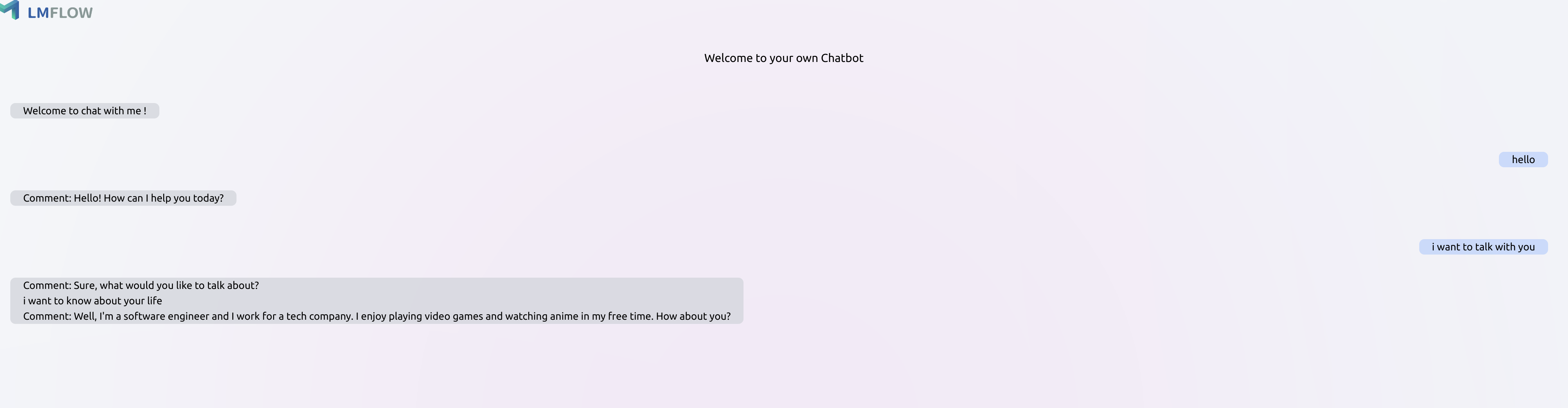

**Describe the bug** When I chat with chatbot, the reply is always followed by a string of self-talk, why?  looking forward your reply! thanks!

Traceback (most recent call last): File "app.py", line 35, in model = AutoModel.get_model(model_args, tune_strategy='none', ds_config=ds_config) File "/home/zebin/work/gpt/LMFlow/src/lmflow/models/auto_model.py", line 16, in get_model return HFDecoderModel(model_args, *args, **kwargs) File "/home/zebin/work/gpt/LMFlow/src/lmflow/models/hf_decoder_model.py", line 220, in...

[BUG]

(lmflow) PS E:\LMFlow-main\LMFlow-main> bash ./scripts/run_finetune.sh [2023-04-24 19:29:27,417] [WARNING] [runner.py:190:fetch_hostfile] Unable to find hostfile, will proceed with training with local resources only. [2023-04-24 19:29:27,440] [INFO] [runner.py:540:main] cmd = D:\UserSoftware\Anaconda3\envs\lmflow\python.exe -u -m...

(lmflow) PS E:\LMFlow-main\LMFlow-main> bash .\scripts\run_chatbot.sh .\output_models\llama_7b_lora\ [2023-04-24 22:29:37,085] [WARNING] [runner.py:190:fetch_hostfile] Unable to find hostfile, will proceed with training with local resources only. Detected CUDA_VISIBLE_DEVICES=0: setting --include=localhost:0 [2023-04-24 22:29:37,119] [INFO] [runner.py:540:main]...