LMFlow

LMFlow copied to clipboard

LMFlow copied to clipboard

An Extensible Toolkit for Finetuning and Inference of Large Foundation Models. Large Models for All.

(lmflow) PS E:\LMFlow-main\LMFlow-main> bash .\scripts\run_chatbot.sh .\output_models\llama7b-lora-medical\ .\output_models\llama7b-lora-medical\llama7b-lora-medical\ [2023-04-24 22:59:26,646] [WARNING] [runner.py:190:fetch_hostfile] Unable to find hostfile, will proceed with training with local resources only. Detected CUDA_VISIBLE_DEVICES=0: setting --include=localhost:0 [2023-04-24 22:59:26,667] [INFO]...

[2023-04-24 16:32:24,796] [WARNING] [runner.py:186:fetch_hostfile] Unable to find hostfile, will proceed with training with local resources only. Traceback (most recent call last): File "/usr/local/bin/deepspeed", line 6, in main() File "/usr/local/lib/python3.10/site-packages/deepspeed/launcher/runner.py", line...

(lmflow) PS E:\LMFlow-main\LMFlow-main\service> python .\app.py [2023-04-25 21:05:12,655] [INFO] [comm.py:570:init_distributed] Not using the DeepSpeed or dist launchers, attempting to detect MPI environment... '"hostname -I"' 不是内部或外部命令,也不是可运行的程序 或批处理文件。 Traceback (most recent call last):...

(lmflow) PS E:\LMFlow-main\LMFlow-main> python ./scripts/convert_llama_weights_to_hf.py --input_dir .\output_models\llama_7b_lora\ --model_size 7B --output_dir .\output_models\llama-7b-hf\ Traceback (most recent call last): File "E:\LMFlow-main\LMFlow-main\scripts\convert_llama_weights_to_hf.py", line 279, in main() File "E:\LMFlow-main\LMFlow-main\scripts\convert_llama_weights_to_hf.py", line 267, in main write_model( File...

I have installed all the steps, but it doesn't work! see that:

i run the run_finetune.py,ouccur the following erreo, the scape show as the following picture.

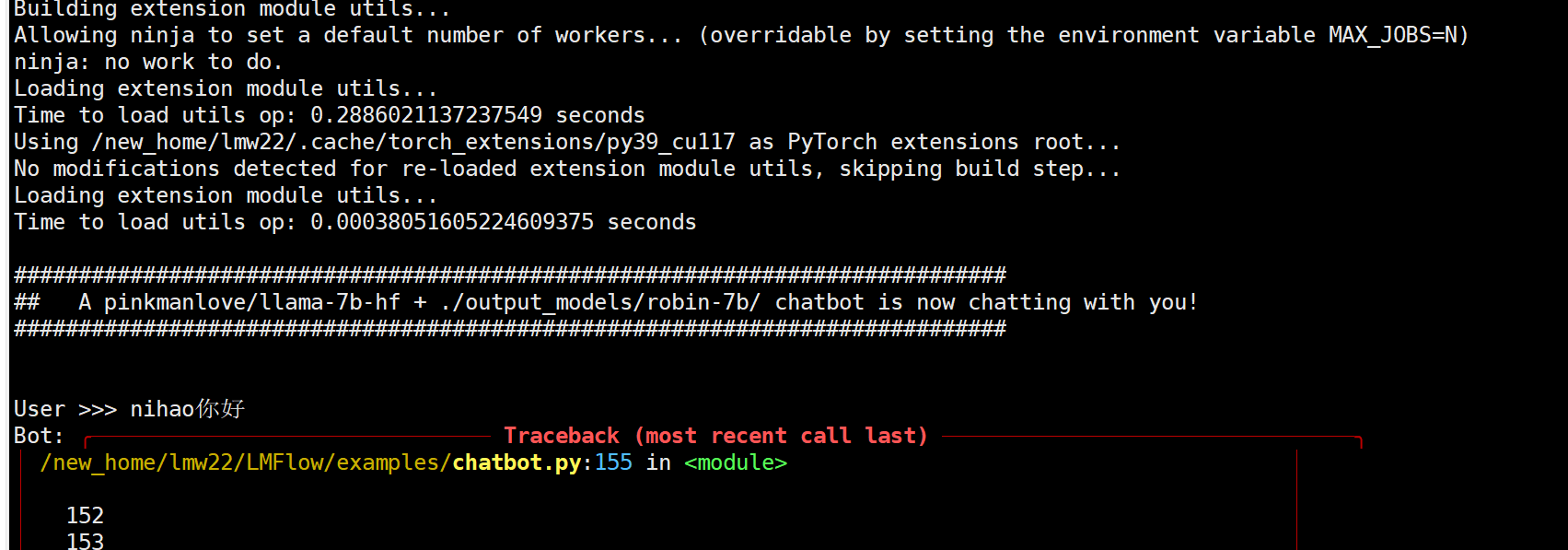

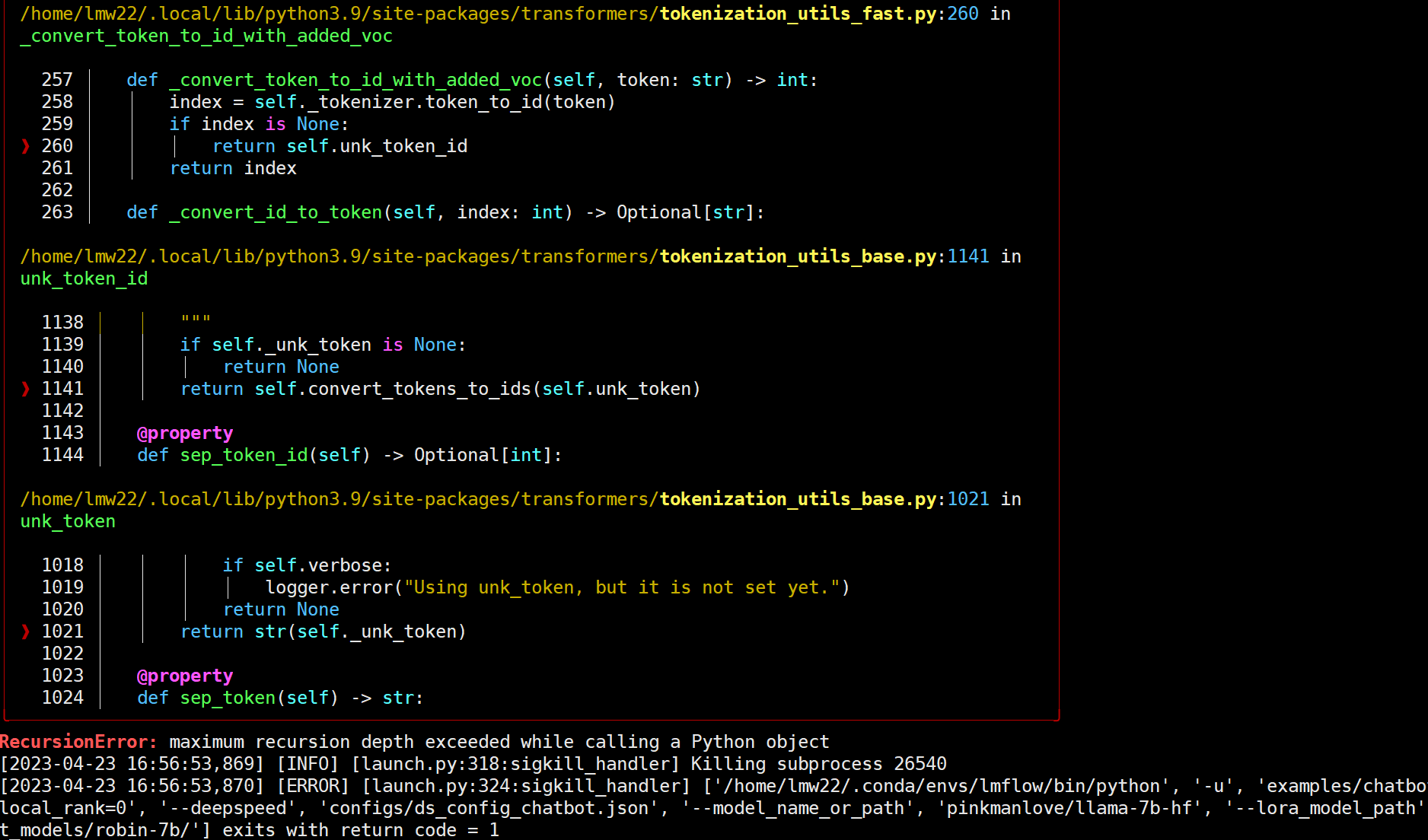

Hi, dear @shizhediao dalao, I would like to reproduce Robin-7B in my machine, I need to use `--model_name_or_path pinkmanlove/llama-7b-hf` in `run_finetune_with_lora.sh` and run `./scripts/run_finetune_with_lora.sh`, right? Any extract dataset and parameters...

I've fine-turned **llama-7b** model with command: ``` #!/bin/bash deepspeed_args="--num_gpus=8 --master_port=11000" exp_id=llama-7b-v2 project_dir=XXXX base_model_path=${project_dir}/models/pinkmanlove/llama-7b-hf lora_model_path=${project_dir}/models/llama7b-lora-380k output_dir=${project_dir}/output_models/${exp_id} log_dir=${project_dir}/log/${exp_id} dataset_path=${project_dir}/dataset/train_2M_CN/lmflow/ mkdir -p ${output_dir} ${log_dir} deepspeed ${deepspeed_args} \ finetune.py \ --model_name_or_path ${base_model_path} \ --lora_model_path...

Hi, master. How to finetune loar model on the other loar model? ----- hi,大师。如何在一个loar model上再预训练呢?