Error when run_evaluation_with lora

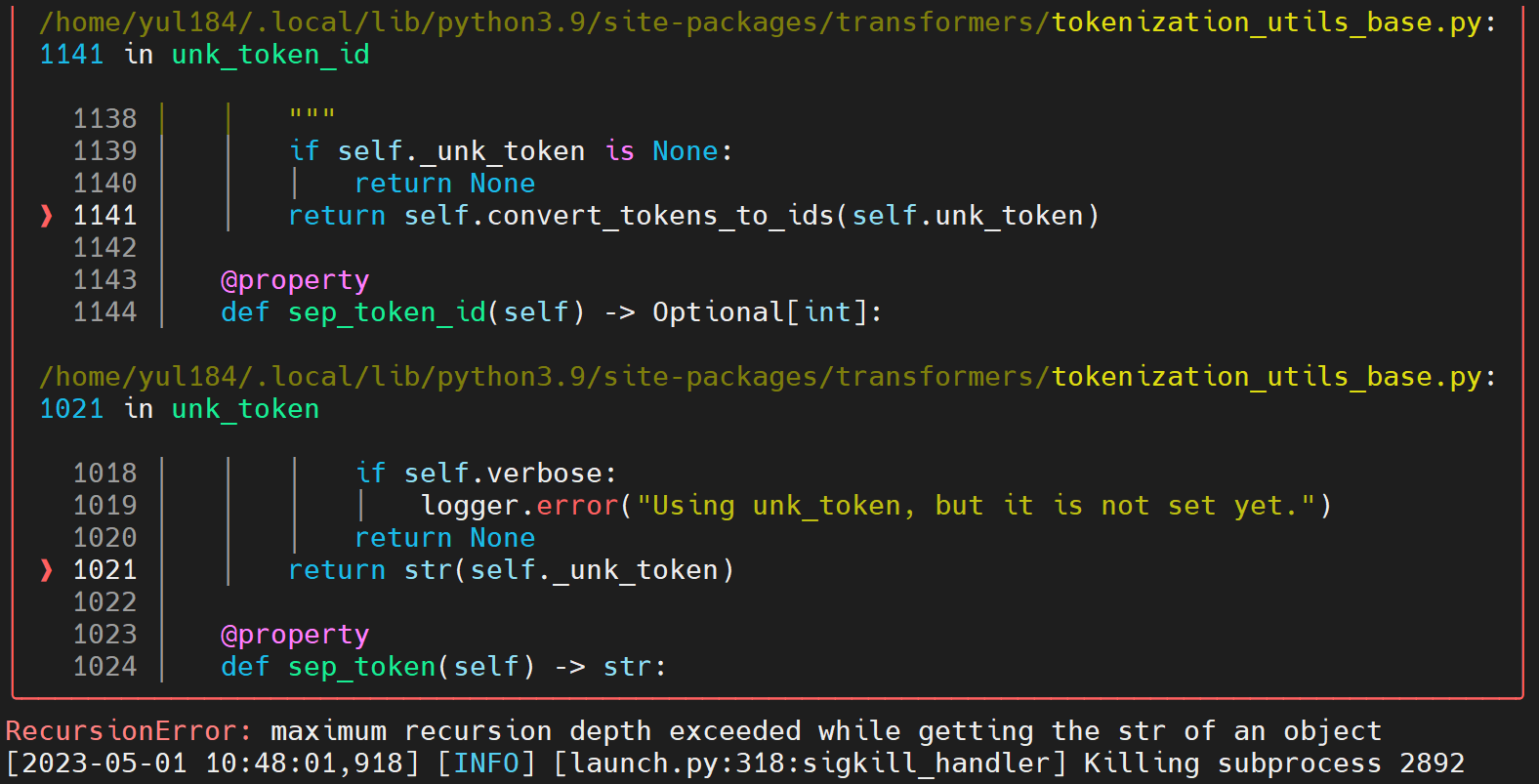

I run evaluation with my LoRA finetuned model on dataset PubMedQA/test, I got the following error: ` --------Begin Evaluator Arguments---------- model_args : ModelArguments(model_name_or_path='decapoda-research-llama-7b-hf', lora_model_path='output_models/finetune_with_lora', model_type=None, config_overrides=None, config_name=None, tokenizer_name=None, cache_dir=None, use_fast_tokenizer=True, model_revision='main', use_auth_token=False, torch_dtype=None, use_lora=False, lora_r=8, lora_alpha=32, lora_dropout=0.1, use_ram_optimized_load=True) data_args : DatasetArguments(dataset_path='data/PubMedQA/test', dataset_name='customized', is_custom_dataset=False, customized_cache_dir='.cache/llm-ft/datasets', dataset_config_name=None, train_file=None, validation_file=None, max_train_samples=None, max_eval_samples=10000000000.0, streaming=False, block_size=None, overwrite_cache=False, validation_split_percentage=5, preprocessing_num_workers=None, disable_group_texts=False, keep_linebreaks=True, test_file=None) evaluator_args : EvaluatorArguments(local_rank=0, random_shuffle=False, use_wandb=False, random_seed=1, output_dir='./output_dir', mixed_precision='bf16', deepspeed='examples/ds_config.json', answer_type='binary_choice', prompt_structure='Input: {input}') --------End Evaluator Arguments---------- model_hidden_size = 4096 Successfully create dataloader with size 1000. ╭─────────────────────────── Traceback (most recent call last) ─.... ───────────────────────────────────────╯

RecursionError: maximum recursion depth exceeded while getting the str of an object `

Thank you!

I appreciate any help!

Please refer to this: https://github.com/OptimalScale/LMFlow/issues/191

Please refer to this: #191

I tried, but still got the same error:( @shizhediao

It's weird, could you check the installation again? or try to re-install the lmflow package.

This issue has been marked as stale because it has not had recent activity. If you think this still needs to be addressed please feel free to reopen this issue. Thanks