LaaZa

LaaZa

Okay, it appears that it is trying to load a normal model and not ggml because the model isn't named properly for textgen. Rename the `Pygmalion-7b-4bit-Q4_1-GGML.bin` to something like `ggml-Pygmalion-7b-4bit-Q4_1.bin`

You changed the folder name and not the .bin file?

Honestly, try to use a GGML model that runs on the cpu instead. 4 GB is just hopeless to run anyhing on the GPU. You can technically split the model...

Look for models here [Hugging Face models](https://huggingface.co/models?pipeline_tag=text-generation&sort=downloads&search=ggml) @oranda Doesn't sound like you are using textgen, so not really the issue for here. But eitherway, if you are using llama-cpp-python, try...

Correct me if I'm wrong but doesn't textgen use specifically llama.cpp via llama-cpp-python, which are for LLaMA? Maybe it could be useful to be able to change this value anyway...

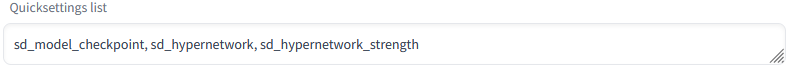

In the settings tab > user interface > quicksettings list. Change it to this list: `sd_model_checkpoint, sd_hypernetwork, sd_hypernetwork_strength` You can add other settings separated by commas and you can find...

@Shake128 does it look exactly like this?  For me the Apply and Restart buttons seem to stop working after using the restart from the UI. Make sure when you...

If possible please test ^

You should probably use Cuda 12 and modern version of pytorch, but originally I think the Cuda 12 was not in your path, so it found an old version. Make...

Yes, they need to match, but python needs to find the right version so it needs to be in your path when you have it installed.