pytorch-metric-learning

pytorch-metric-learning copied to clipboard

pytorch-metric-learning copied to clipboard

The easiest way to use deep metric learning in your application. Modular, flexible, and extensible. Written in PyTorch.

The docs should have the following type of explanation, plus a short note per loss function - If you pass pairs into a triplet loss, then triplets will be formed...

One option could involve overriding ```__getattribute()__```

See #192. For other people who encounter performance problems, perhaps a 'performance optimization' section can be added to the docs that describes first using a miner of [type 2](https://github.com/KevinMusgrave/pytorch-metric-learning/issues/192#issuecomment-689814355) and...

If the number of mined pairs or triplets is small, then it doesn't make sense to compute all pairwise distances in the batch using compute_mat.

This would allow users to limit the number of pairs/triplets returned by a miner. The ```triplets_per_anchor``` flag would be removed from TripletMarginLoss and MarginLoss. See #192 for related discussion.

BaseMetricLossFunction can split it into a tuple if its a tensor.

In the N-pairs loss paper, there is a "Hard negative class mining". But this is not implemented in this repository. Will you make this mining strategy? I just wonder...

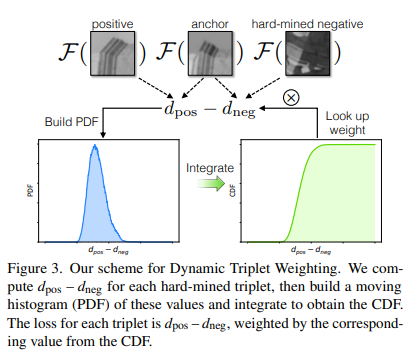

https://gfx.cs.princeton.edu/pubs/Zhang_2019_LLD/softmargin.pdf Implemented here, might be possible to borrow with author approval https://github.com/lg-zhang/dynamic-soft-margin-pytorch/blob/master/modules/dynamic_soft_margin.py#L10

Currently there is the option to reduce the dimensionality of the embeddings using PCA. To allow more flexibility, this should be changed to a "dim reducer" input, which is expected...

Is center loss considered to be a metric learning approach? If you think it can be part of this repo, I am interested in contributing.