pytorch-metric-learning

pytorch-metric-learning copied to clipboard

pytorch-metric-learning copied to clipboard

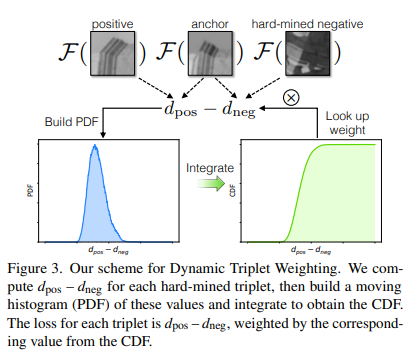

DynamicSoftMarginLoss

https://gfx.cs.princeton.edu/pubs/Zhang_2019_LLD/softmargin.pdf

Implemented here, might be possible to borrow with author approval

https://github.com/lg-zhang/dynamic-soft-margin-pytorch/blob/master/modules/dynamic_soft_margin.py#L10

Hello @KevinMusgrave , which of the issues tagged with new algorithm request do you think would be the most beneficial to have implemented?

I'm looking to contribute to some open source in my spare time and I've always been interested in metric learning.

@elanmart Thanks for your interest in contributing!

I think this one (DynamicSoftMarginLoss) or P2SGrad would be good additions. But really, any new additions are appreciated, so if these ones are too much work, feel free to go for something easier and/or something that isn't listed in the issues yet.

Currently, there isn't really any documentation for extending the base loss function. And it also became more complicated after the latest version where I added the reducers module. But for now, if you decide to write a loss function, you can refer to these:

The function compute_loss should return a dictionary. An an initial working version, you can just compute the loss like you normally do, and then return

return {"loss": {"losses": your_computed_loss, "indices": None, "reduction_type": "already_reduced"}}

Let me know if you have any questions!

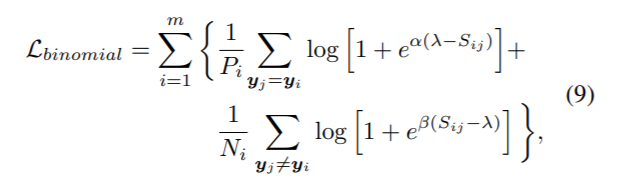

The binomial deviance loss would also be a good addition, and would probably be an easier loss to start with. Here's the equation (taken from the multi-similarity paper)

Added by @domenicoMuscill0 in v2.4.0:

pip install pytorch-metric-learning==2.4.0

https://kevinmusgrave.github.io/pytorch-metric-learning/losses/#dynamicsoftmarginloss