Harris.Chu

Harris.Chu

``` (root@nebula) [sf100]> return datetime("1659602415") +------------------------------+ | datetime("1659602415") | +------------------------------+ | -31249-01-01T00:00:00.000000 | +------------------------------+ Got 1 rows (time spent 298/12422 us) Thu, 04 Aug 2022 16:52:09 CST ``` we support...

``` StatusOr MetaClient::getStorageLeaderFromCache(GraphSpaceID spaceId, PartitionID partId) { if (!ready_) { return Status::Error("Not ready!"); } { folly::RWSpinLock::ReadHolder holder(leadersLock_); auto iter = leadersInfo_.leaderMap_.find({spaceId, partId}); if (iter != leadersInfo_.leaderMap_.end()) { return iter->second; }...

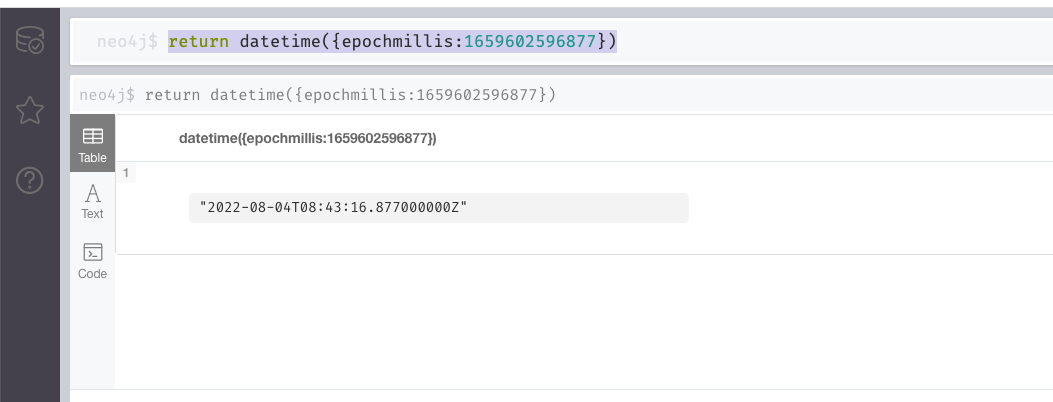

neo4j: ``` return datetime({epochmillis:1659602596877}) ```  but it cannot be converted in nebula.

could we add more storage elapsed time in profile? e.g. elapsed time for io elapsed time for decode elapsed time for other logic

in db_upgrader, when we upgrade from v1 to v2: 1. read from src_db_path, and then write to dst_db_path but when we upgrade from v2 to v3: 1. rewrite to src_db_path...

show the importer version e.g.:

we could define version environment, and then could launch different version by using if compatible ``` NEBULA_VERSION=v3.0.1 docker-compose up -d ```

## Issue 1 generally there are three type metrics: * latency * succeed count * error count but not all of metrics with these three types. e.g. graphd * `num_sort_executors`...

1. use log interface 2. delete every logger in function, just use a unique logger. to keep consistent, doesn't change code in `cmd/importer.go`, and other library could change logger by...

importer read all the files in concurrent, it would cause below issues: 1. if there're 1000+ files, it would read too many data, and would OOM. 2. one goroutine reads...