ProGamerGov

ProGamerGov

Here are the stats for using CUDA 9.0, cuDNN v7 with the faster feval code: Neural-Style-PT (one `backward()`) with CUDA 9.0, cuDNN v7, and PyTorch v0.3.1: * `-backend nn -optimizer...

My code is now fully compatible with both Python 2, and Python 3. It is also now fully compatible with Pytorch v0.4, in addition to v0.3.1. I have also replaced...

Interestingly, the sum of all the content and style loss values, is not equal to the loss variable. If I add up the loss values by doing: ``` def maybe_print(t,...

On the subject of total variance denoising, I think this code replicates the behavior of [Neural-Style's Backward TVLoss](https://github.com/jcjohnson/neural-style/blob/master/neural_style.lua#L585-L593) function: ``` self.gradInput = input.clone().zero_() self.x_diff = input[:,:,:-1,:-1] - input[:,:,:-1,1:] self.y_diff =...

I created these two functions to replicate the terminal outputs for loadcaffe, and printing a sequential net in Torch7: ``` # Print like Lua/loadcaffe def print_loadcaffe(cnn, layerList): c = 0...

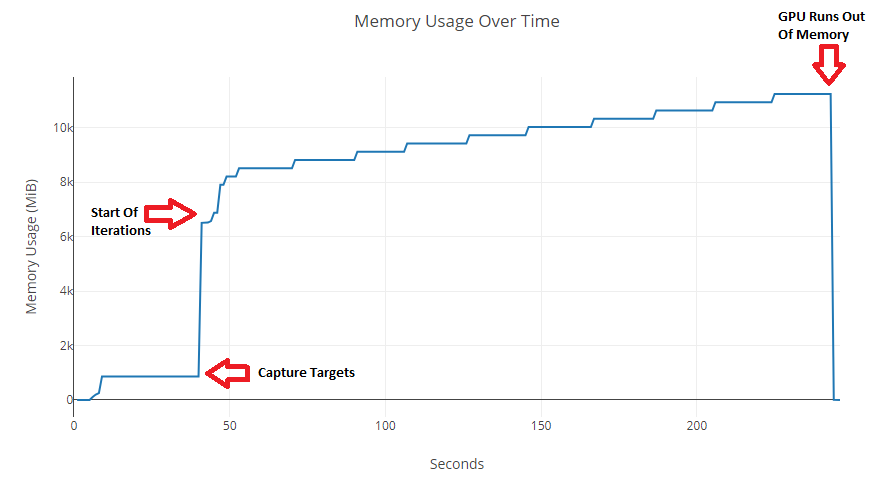

I'm not sure if this is an issue, but while using L-BFGS, the memory usage seems to increase over time (at about 10-15 iterations between each increase):  Using `-lbfgs_num_correction...

I rebuilt the style image directory/image sorting function (which let you specify one or more directories, along with separate images, for the `-style image` parameter): ``` style_image_input = params.style_image.split(',') style_image_list,...

So I discovered that this in-place operation: ``` self.G.div_(input.nelement()) ``` Uses a lot more memory compared to it's non in-place counterpart: ``` self.G = self.G.div(input.nelement()) ``` This makes sense according...

In neural_style.lua, I don't think that these [two lines of code](https://github.com/jcjohnson/neural-style/blob/master/neural_style.lua#L536-L537 ) in the StyleLoss function are needed: ``` if self.blend_weight == nil then self.target:resizeAs(self.G):copy(self.G) ``` These [lines here]( https://github.com/jcjohnson/neural-style/blob/master/neural_style.lua#L81-L88)...

@htoyryla Are these lines in the forward content and style loss modules really needed? ``` self.output = input return self.output ``` https://github.com/jcjohnson/neural-style/blob/master/neural_style.lua#L468-L469 https://github.com/jcjohnson/neural-style/blob/master/neural_style.lua#L546-L547 `self.output` just seems to just end up...