zhangyuge1

zhangyuge1

> 是不是缺少了org.apache.flink.table.factories.TableSourceFactory类,所以对应的应该是flink连接JDBC的包。去官网上下载你对应flink版本的连接JDBC的包 我用的是Kakfa Connector,数据类型是csv

> you can add `CHARSET = 'utf8mb4'` when creating mysql table. It still doesn't work. String sql = "CREATE TABLE test (\n" + " name varchar(255) NOT NULL,\n" + "...

> If it's a standard JDBC protocol, then we just need a test on it, I think #2321 has OK, if you are familiar with ClickHouse, I would more recommend...

@CalvinKirs Hello, I want to try the source and sink for jdbc. Can you assign them to me?

@CalvinKirs Can you assign me the sink and source of clickhouse? I can do them.

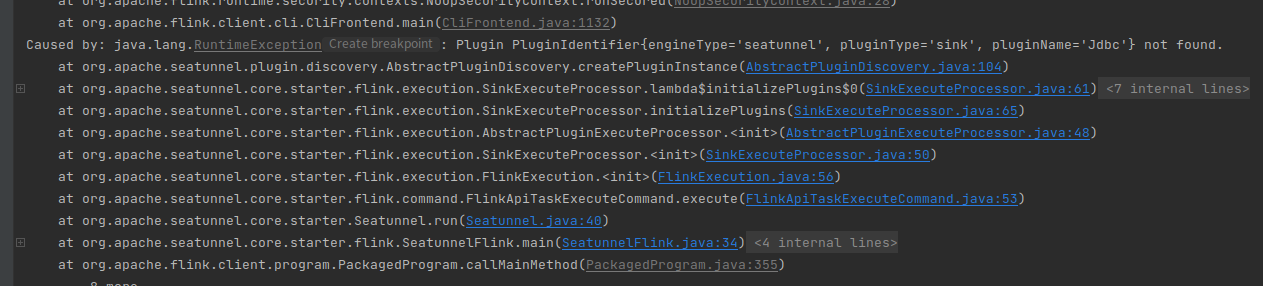

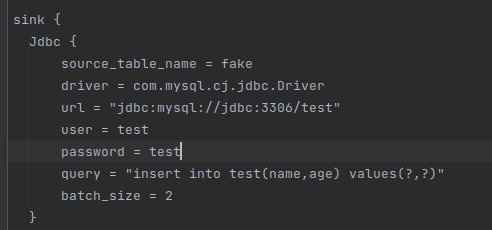

I changed the JdbcSink to Jdbc, but it still doesn't work. And e2e test is using jdbc connector jar package

@lhyundeadsoul Following your method I encountered a new problem.

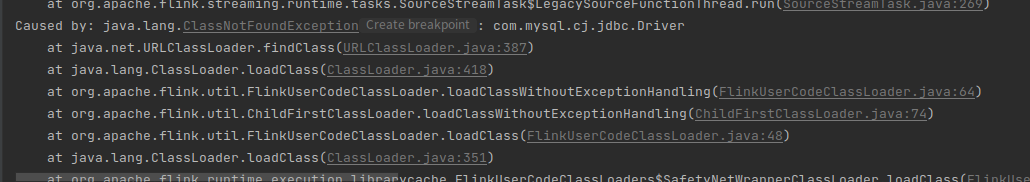

This is probably because there is no mysql-connector-java dependency?But test scope has been deleted.

> > This is probably because there is no mysql-connector-java dependency?But test scope has been deleted. > > > This is probably because there is no mysql-connector-java dependency?But test scope...