Xiong Ma

Xiong Ma

@santhoshkolloju when you train this model, do you use bert embedding as abstract embedding, it mean article and abstract will go through bert when train this summary model?

[transformer-generator](https://github.com/policeme/transformer-pointer-generator)

@Kyubyong

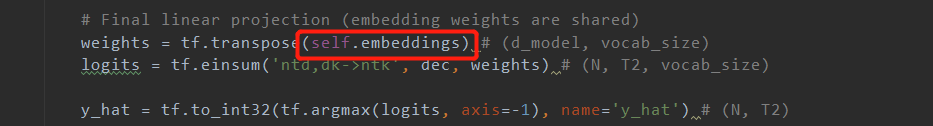

@Kyubyong in this code, why use self.embedding, why not redefine a matrix, is there a another meaning?

@Kyubyong droupout rate in paper is 0.1, but there is 0.3, is any different between you and paper? paper:

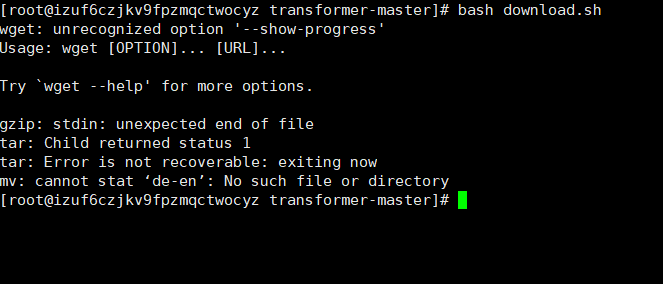

@Kyubyong when I run download.sh, I got this error  when I download this dataset, I didn't find be.vocab, can you tell where can be found? thx.

@Kyubyong I am very confuse at encoder's block and decoder's block, if we have one block in encoder, it means we have one encoder? if we have two blocks in...