wzgrx

wzgrx

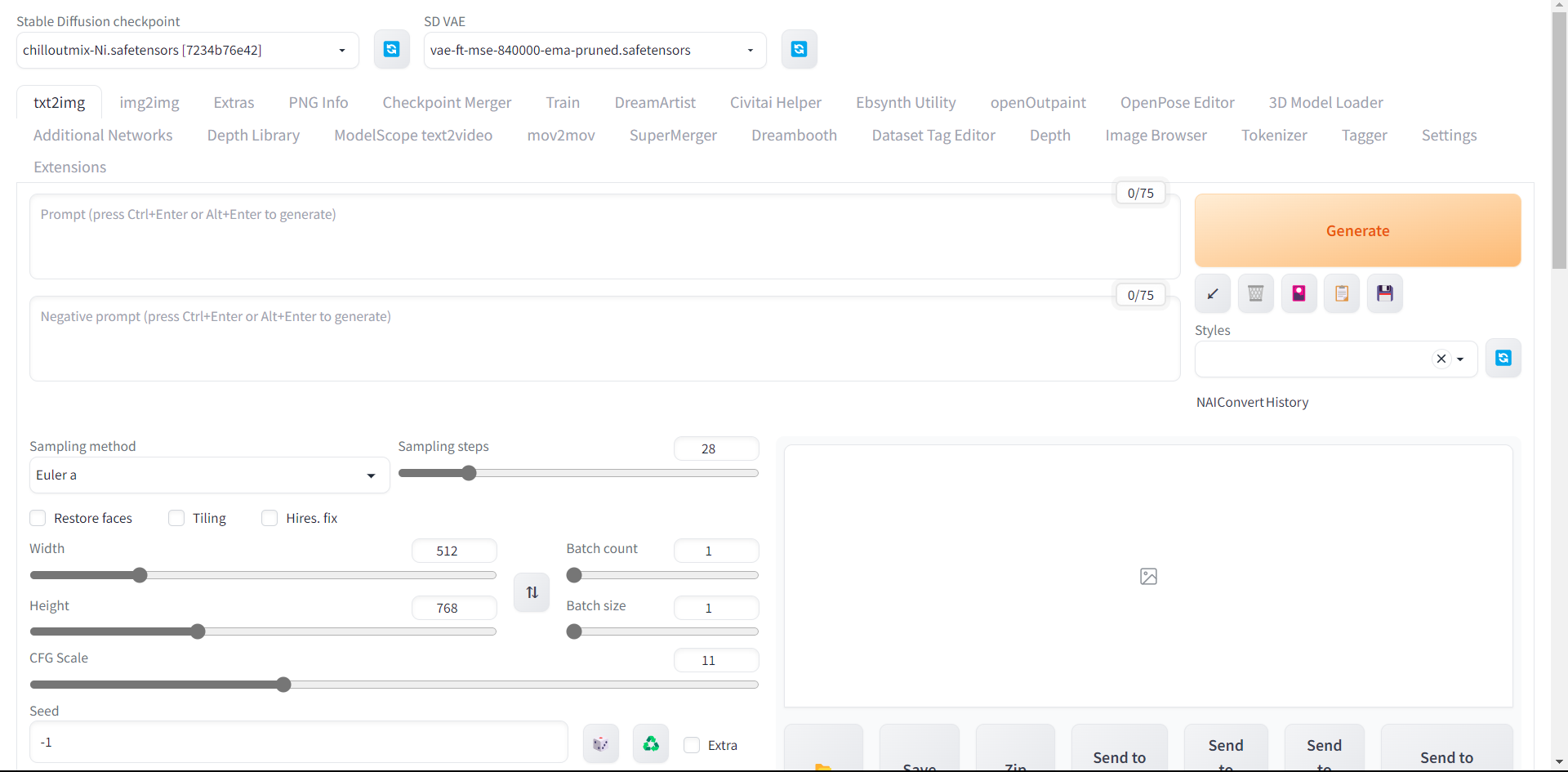

This problem still exists LocalProtocolError: Can't send data when our state is ERROR ERROR: Exception in ASGI application Traceback (most recent call last): File "D:\stable-diffusion-webui\venv\lib\site-packages\uvicorn\protocols\http\h11_impl.py", line 428, in run_asgi result...

https://github.com/ggerganov/llama.cpp/pull/1405 It seems that there is a problem with the new model format updated by ccp

https://github.com/ggerganov/llama.cpp/issues/1408

It seems that there is a problem with the new model format updated by CCP, and the quantified version of the model format has undergone significant changes. This is also...

> 对于那些使用一键式安装程序(Windows)的用户:在文本生成webui/requirements中编辑以下行.txt > > 老 > > ``` > llama-cpp-python==0.1.45; platform_system != "Windows" > https://github.com/abetlen/llama-cpp-python/releases/download/v0.1.45/llama_cpp_python-0.1.45-cp310-cp310-win_amd64.whl; platform_system == "Windows" > ``` > > 新增功能 > > ``` > llama-cpp-python==0.1.50; platform_system !=...

> 是否需要更改 UI 才能访问 llama.cpp 的新 GPU 加速? no need

Using clash, turn on tun mode