Zhenheng TANG

Zhenheng TANG

I also find that the most current codes of dirichlet partition method cannot generate a balanced client datasets. This may cause training harder.

> @wizard1203 could you help to check if this improves the performance? Seems it can.

> BytePS is for data center-based distributed training, while FedML (e.g., FedAvg) is edge-based distributed training. The particular assumptions of FL include: > > 1. heterogeneous data distribution cross devices...

> FedML supports multiple parameter servers for the communication efficiency via hierarchical FL and decentralized FL . > In hierarchical FL, there are group parameter servers that split the total...

@chaoyanghe Thanks for your detailed explanation. Maybe I can try to complete it by myself, and when I finish it I would like to push it to your master branch.

> @wizard1203 Do you mean modifying based on this code? > https://github.com/FedML-AI/FedML/tree/master/fedml_experiments/distributed/fedavg @chaoyanghe No, maybe it needs to base on those codes on fedml_core. Whatever, I may try to do...

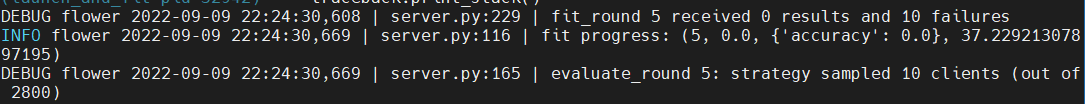

I met the same problems when using simulation... There are always failures during each round.

I apparently raise RuntimeError in the function fit(). But there is only the print of 5 failures without the error log.

@Mirian-Hipolito Thanks, let me try it.

@AbdulMoqeet @hangxu0304 Hi, could you have another try with a smaller number of local epochs, e.g. E=1. The large epochs usually make training harder to converge.