GpuRamDrive

GpuRamDrive copied to clipboard

GpuRamDrive copied to clipboard

Does this have LRU caching layer (on RAM) between VRAM and disk access?

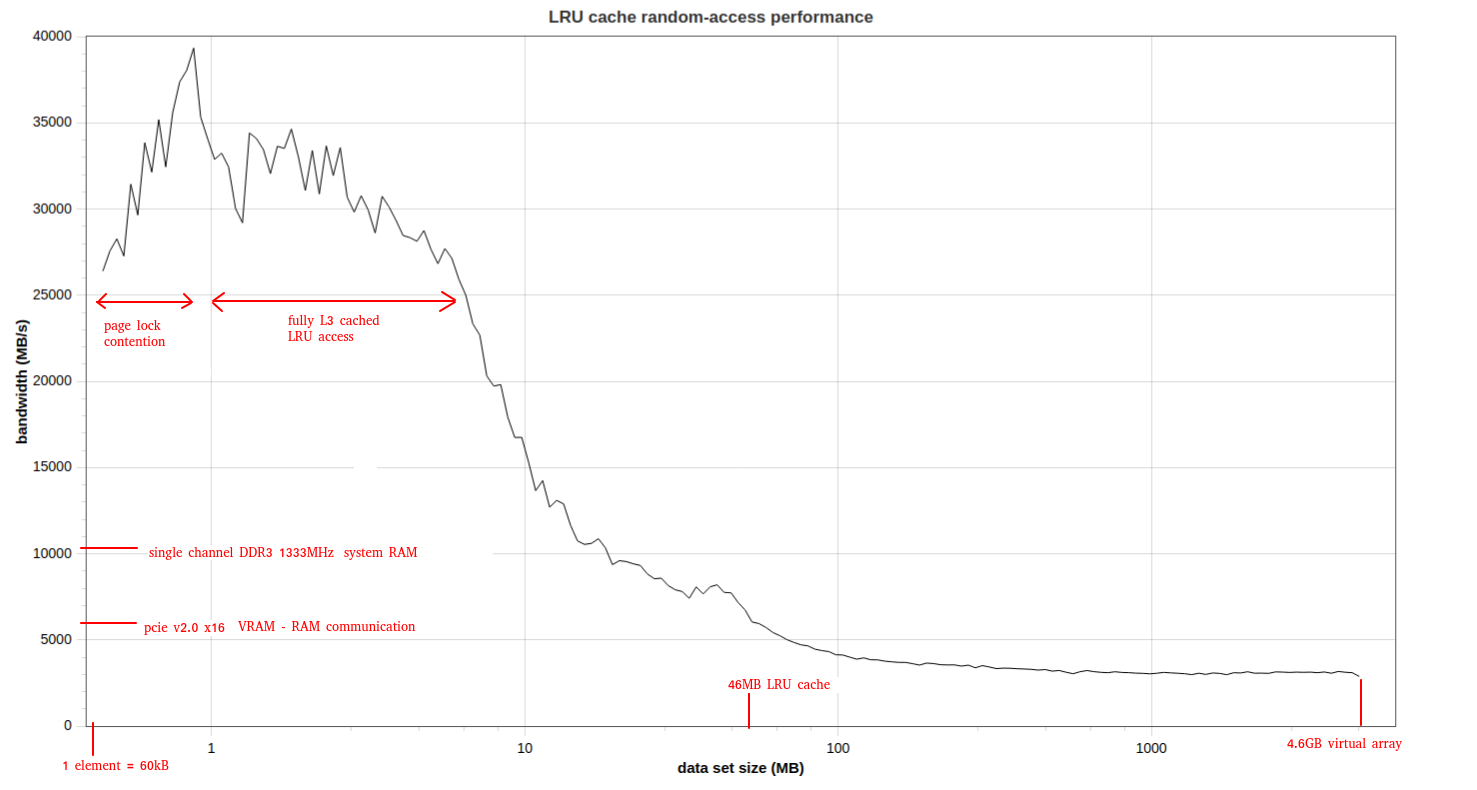

For example, I have an OpenCL-based VRAM virtual-array class that improves performance even for random-accesses even when the accesses are not in too big chunks:

https://github.com/tugrul512bit/VirtualMultiArray/wiki/Cache-Hit-Ratio-Benchmark

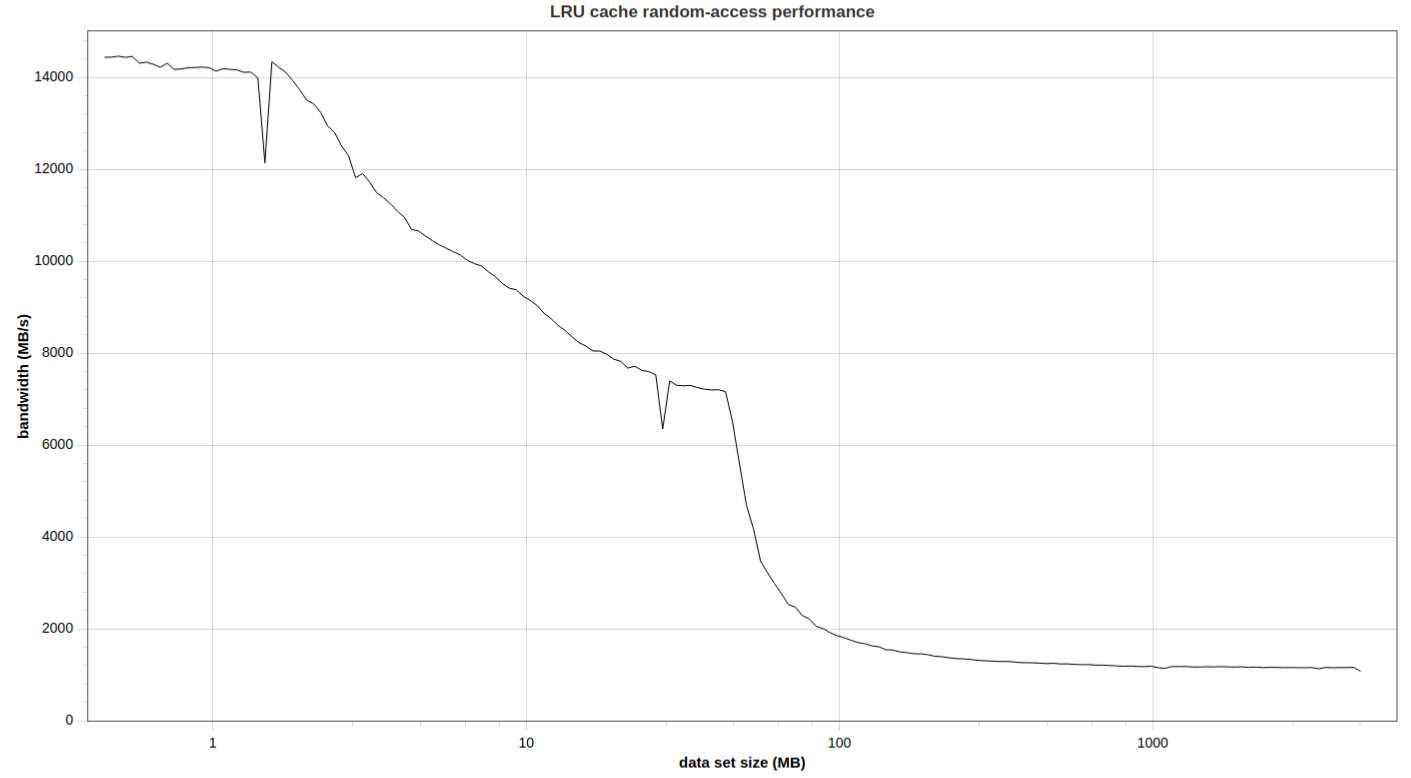

64-threads access:

Single-thread access:

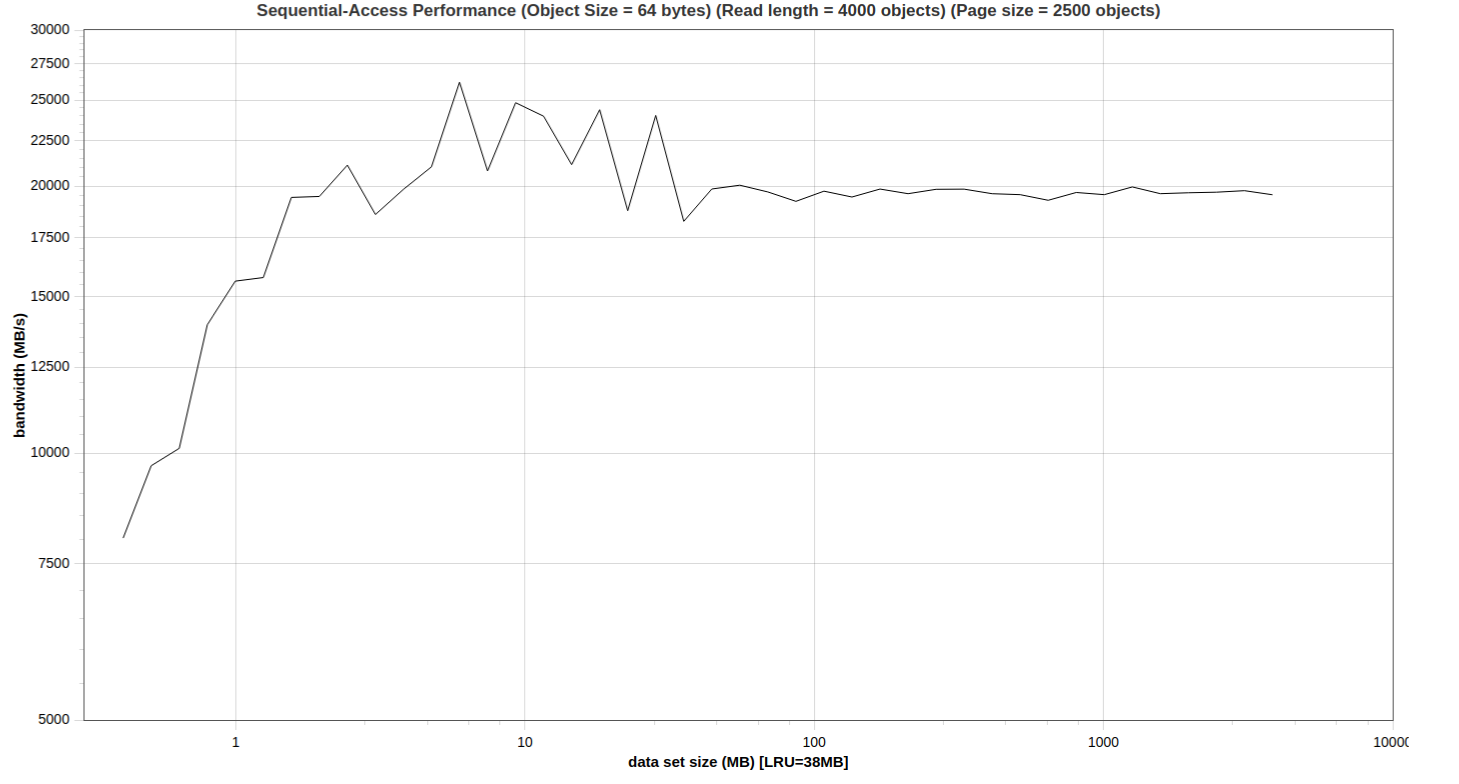

Partially-overlapped 16-thread access (overlapping region = better cache efficiency):

Note: the test system is a low-end one with these components:

- fx8150 at 2.1 GHz + 4GB RAM (single channel 1333 MHz DDR3)

- GT1030 2GB VRAM (pcie v2.0 4x capability but on 16x bridge)

- K420 2GB VRAM (pcie v2.0 8x bridge)

- K420 2GB VRAM (pcie v2.0 4x bridge)

so the benchmarks are result of combined bandwidth of 8x + 4x + 4x v2.0 bridge and 10GB/s RAM bandwidth.

By exchanging m_pBuff(experimenting on the host_mem setting) with my virtual array and setting 3MB caching per GPU, this happened:

This is with 4MB caching and a different page-size:

with 32 MB caching per GPU, it even goes 1GB/s too but not for all sizes.

If I use plain RAM, it does max 1GB/s but on more I/O sizes than VRAM.

1.6MB cache per gpu, 16MB data-set:

7MB cache per gpu, 4kB page size, 32MB data-set (realworld test), 512MB drive size:

Same cache but with peak-performance bench:

10 MB cache, 1MB page size, default test with 32MB data-set: