core-v-verif

core-v-verif copied to clipboard

core-v-verif copied to clipboard

Pass/Fail Criteria in DV plan template

In Verification Planning 101, the Pass/Fail Criteria allows for selection between Self Checking, Signature Check, Check against RM, Assertion Check, Any/All, Other or N/A. It has been suggested that the selection be expanded to include Scoreboarding, etc.

The purpose of this issue is to capture the team's thoughts on this idea and drive a consensus view as to whether to expand the selection options for the P/F criteria in the DV plan template.

Additional context

The most up-to-date version of the simulation DV plan template can be found here.

Note: the Check against RM P/F criteria is written as Check against ISS in the current version of the DV Plan (f25b80d). This will be updated to RM pending resolution of this issue.

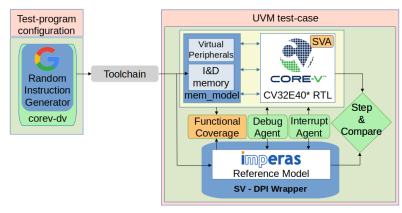

I'm not sure it makes sense to add additional P/F Criteria and the following attempts to explain my reasoning. Below is a schematic view of the E40* environment showing the step-and-compare method of checking the PC/CSRs/GPRs of the core against the predictions of the ISS:

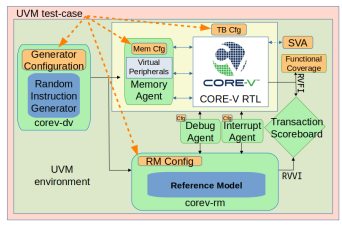

Below is a schematic of a possible future implementation of the E40* environment showing transaction scoreboarding of the PC/CSRs/GPRs of the core against the predictions of an RM:

At the resolution of the figures, there isn't a lot of difference between them. It could be argued that the P/F Criteria for both of these could be defined as Check against RM, even though one environment uses step-and-compare and the other uses transaction scoreboarding. That is an implementation detail - both are comparing the state of the DUT against an RM.

I guess this is to some extent a matter of interpretation and to a certain degree organization; to me your reasoning appears sound, @MikeOpenHWGroup.

Furthermore I don't think that there should be anything providing "reference data" (in lieu of a better word) to the scoreboard other than the reference model. Thus, anything the scoreboard checks the RTL against should be based on output from the reference model - be it the ISS or ISS + extended functionality that we implement inside an RM-wrapper to cover functionality lacking from the ISS.

That said, anything that modifies the instruction flow that's not modelled in the ISS/RM sounds a bit sketchy to me. (In that case, how do we ensure that the RM and RTL stays fully in sync? And what is the point of a reference model if it's not fully encompassing the functionality of the design?)

That said, anything that modifies the instruction flow that's not modelled in the ISS/RM sounds a bit sketchy to me.

Agreed. I am not suggesting this for all the same reasons that you mention.

Hi. A related thing about the template (thought not directly about this issue) that I want to mention. We have test types "Directed Self-Checking" and "Directed Non-Self-Checking". But wouldn't it be cleaner to just have "Directed"? Since we already have the pass/fail criteria called "Self Checking", and for non-self-checking there are also pass/fail criteria that would be specified.

Another idea. Sorry, not about "pass/fail criteria" but this thread is already on the topic of vPlan methodology.

Filenames could (if it is not burdensome) have a semantic versioning suffix, like myvplan-1.0.2.xlsx.

It just came to mind because I wrote a sentence referring to part of the pma vplan but I couldn't see how I could make the reference more exact.

@silabs-robin said:

Filenames could (if it is not burdensome) have a semantic versioning suffix, like

myvplan-1.0.2.xlsx.

A topic worthy of discussion. Since that is a separate topic, please create a new issue for it. Multiple topics per issue can make it difficult to find specific discussion threads after the fact, and adding/changing topics in the middle of an issue will make it impossible to find.