Osman Tursun

Osman Tursun

Hi, that would be great. We have few members who have been actively contributing to the Uyghur CV dataset. We would like to offer our assistance. ________________________________ From: ginamoape ***@***.***>...

@saeziae @tommai4881 Thanks for sharing.

@naoto0804 That is the reason why I ask the mask.

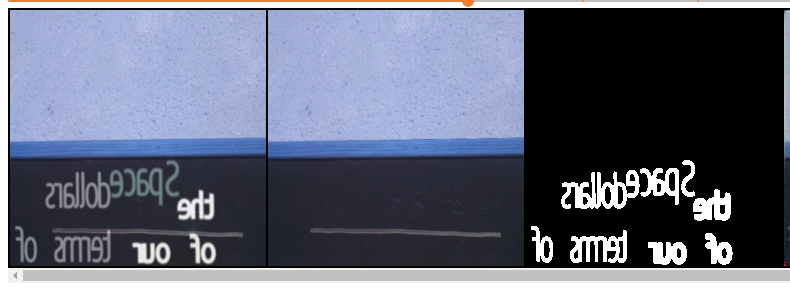

@naoto0804 I used the following code. ``` masks_refine_gt = np.greater(np.mean(np.abs(img.astype(np.float32) - img_gt.astype(np.float32)), axis=-1), self.mask_threshold).astype(np.uint8) # Threshold is set to 25 ```

> @neouyghur You can try to get the mask from the background image and the input image. @HCIILAB @zhangshuaitao Although we can generate the mask by input and group truth...

I plotted your PSNR and l2 scores in a figure. It clearly shows your result is not consistent. Could you explain why? @naoto0804 did you get the same PSNR score?...

@zhangshuaitao I am comparing my method with yours. I am also following the same protocol, however, my MSE and PSNR curves share the same trend. Besides that, as we know...

@zhangshuaitao is your l2 score is rmse or mse? thanks.

@naoto0804 @zhangshuaitao I think your result is reasonable since only a small part of the scene is text. However, I felt they didn't fully train the baselines. With more training,...

That is great news. Thanks. On Wed, Nov 20, 2019 at 12:53 PM sunshine wrote: > @neouyghur ,The pre-trained weights will > be released soon. > > — > You...