lw3259111

lw3259111

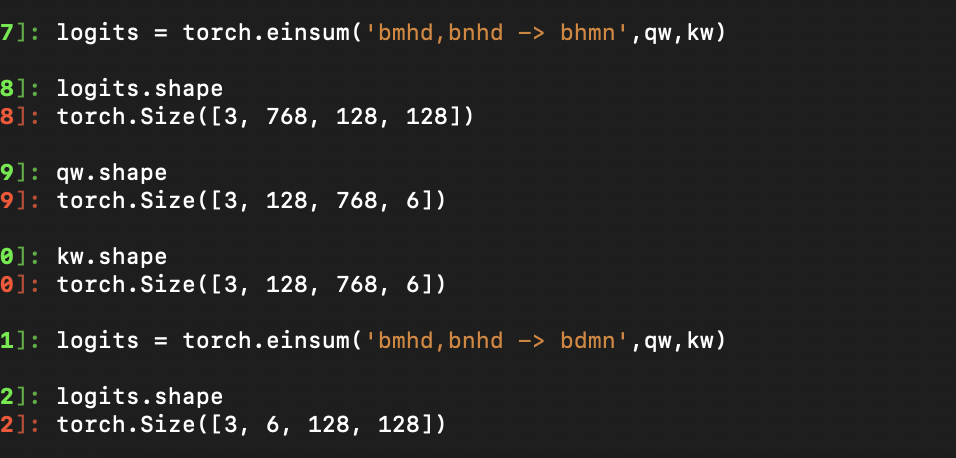

你好,我看了你的globalpointer实体识别的代码,然后又在pytorch进行了测试,感觉在qw * kw的时候有点问题。我的理解在你的代码里面b是batch_size,m和nseq_len,h是hidden_size, d是实体类别,因此我们在最后输出的时候应该计算每一个实体类别的矩阵 , ### 核心代码 ```python logits = tf.einsum('bmhd,bnhd->bhmn', qw, kw) 是不是应该改成 logits = tf.einsum('bmhd,bnhd->bdmn', qw, kw) ``` ### 输出信息 ```shell  ```

python version 3.8 unsupported operand type(s) for "|"

deepspeed to one node multi gpu with run_clm.py is error! deepspeed == 0.8.1 the error is ``` AttributeError: module 'cpu_adam' has no attribute 'create_adam' Exception ignored in: Traceback (most recent...

The model I trained cannot be loaded,The training code `torchrun --nnodes=1 --nproc_per_node=4 \ fastchat/train/train_mem.py \ --model_name_or_path \ --data_path \ --bf16 True \ --output_dir ./checkpoints \ --num_train_epochs 3 \ --per_device_train_batch_size 4...

We would like to fine-tune your model, and we are wondering if the fine-tuned model can be used for commercial purposes.

### System Info transformers ==4.28.0.dev0 pytorch==1.13.1 ### Who can help? -- ### Information - [ ] The official example scripts - [ ] My own modified scripts ### Tasks -...

I trained the model using the 33B architecture and the train.py file with deepspeed , but when I saved the model using the safe_save_model_for_hf_trainer function, it was only 400M. the...

请问中文预训练数据集有多大?训练了多久?多少个epoch?

when the genome contig are longer than 536,870,912 bases, samtools will fail. ``` ftp://ftp.ensemblgenomes.org/pub/plants/release-38/fasta/hordeum_vulgare/dna/Hordeum_vulgare.Hv_IBSC_PGSB_v2.dna.toplevel.fa.gz ``` I have use the genome to assemble my reads,it failed to sort the bwa output...