luhairong11

luhairong11

File "/usr/local/lib/python3.7/site-packages/albumentations-0.4.1-py3.7.egg/albumentations/augmentations/bbox_utils.py", line 28, in ensure_data_valid if data.get(data_name) and len(data[data_name][0]) < 5: ValueError: The truth value of an array with more than one element is ambiguous. Use a.any() or a.all()...

模型解密问题

有没有出现解密出来的caffemodel 在caffe中解析不了,报错

你好,想请教一下,为何我训练的视频背景会抖动很厉害 训练命令:python main.py data/May/ --workspace trial_May/ -O --iters 200000 以下是训练参数:    下面是模型训练完的测试结果 https://github.com/ashawkey/RAD-NeRF/assets/11584869/fc3fb595-3c93-4bee-be6b-41b0721bff0f

流式输出没有token的统计吗,看代码中是支持的,为何在输出却没有

采用的模型是codegeex2-6b-int4,在有些请求中,会出现“问”“答”字眼,感觉这个输出有问题,“问”“答”不是在构造prompt的时候的吗,为何在输出的时候有时候也会出现这个情况,频率还挺高的

在使用chatglm-cpp推理加速时,比transformer推理慢很多,有人遇到过这个问题吗,采用的模型是codegeex2-6b-int4

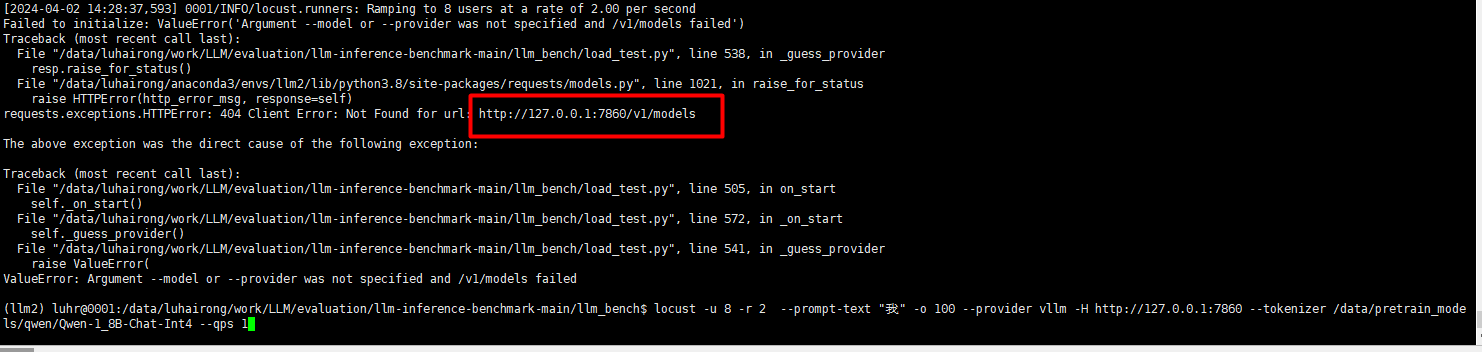

执行命令: locust -u 8 -r 2 --prompt-text "我" -o 100 --provider vllm -H http://127.0.0.1:7860 --tokenizer /data/pretrain_models/qwen/Qwen-1_8B-Chat-Int4 --qps 1 有相关的群吗

I have successfully converted to ONNX, but I'm getting an error when converting to TensorRT. What should I do? [TRT] [E] /ecd1/ecd1.0/ecd1.0.0/Conv: two inputs (data and weights) are allowed only...

### Reminder - [X] I have read the README and searched the existing issues. ### Reproduction python src/api.py --model_name_or_path /data/models/LLM_models/qwen/Qwen-72B-Chat-Int4 --template qwen --infer_backend vllm --vllm_gpu_util 0.9 --vllm_maxlen 8000 上述配置设置了最大token为8000,当输入token超过8000的时候,流式调用接口的时候还是会返回2条空内容的json数据,vllm底层会有一个警告,提示超过了最大token。咱们代码里面能不能抛出一个异常错误,这样返回的内容便于直观理解。 ###...