studio

studio copied to clipboard

studio copied to clipboard

Improve caching behavior for remote files (bag and mcap)

When playing the sample nuscenes data, we download the entire dataset multiple times over. We even re-download the entire dataset when I do things like delete a panel. We should be much smarter about caching.

We should be much smarter about caching.

@amacneil What would you consider "ok" behavior here? Would you be ok if we downloaded the data set 2x? but not 3x? Since this is a feature and not a bug I'm wanting to understand what would be an acceptable behavior or what you want to make sure we don't do. Additionally at what file sizes would you expect we take certain actions and not others?

I'm trying to figure out what the scope of this feature issue is and whether we need to break this up into smaller deliverables and where we want to stack that on the roadmap.

I need a bit more information around what led to having this data downloaded. We have a 1GB limit to the memory cache and we also throw away the cache when topics change (there are challenges with not doing that that we have not tackled). During looping playback of the sample data I do not experience unbounded network use.

I tried the latest version of the app with "explore sample data" and I experienced 511MB of data transfer as reported by devtools. Even when I went back to the start of the file and played again there was no new data transferred.

I'm going to close this out as the caching is what is currently expected. I agree we should tackle better caching and can do that as part of a future effort.

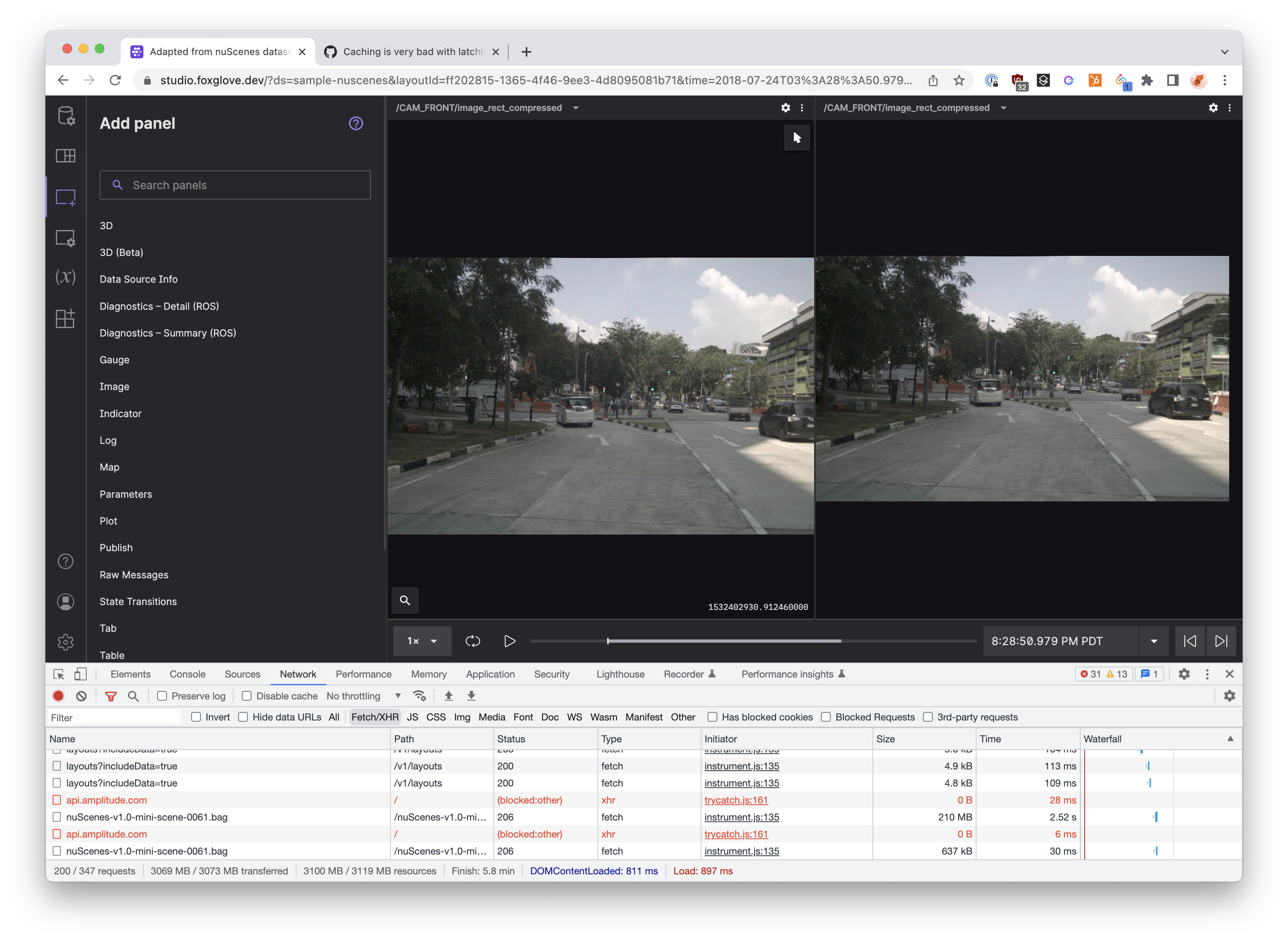

Here's me downloading 2.5GB of sample data just clicking around. If we have a memory block cache I don't understand why we go back and fetch so much data from http.

https://user-images.githubusercontent.com/637671/189048529-5ca7fb85-5022-41ad-a39f-43a2f1ab08ed.mp4

The behavior you are seeing is expected for the current implementation of both Iterable player and the underlying http bag data source.

When you change a topic on a panel (lets say to a different image topic as you are doing several times above) we try to buffer the data for that topic so your playback experience is smooth. In the case of a remote http bag file this means reading the chunks that contain the new topic (and often contain other topics). For these chunks we have to make a remote request to read them because the chunks are not in the cache. The http reading interfaces have a small cache (100mb iirc) for handling the index data rather than a large cache for storing the entire file in cache and this has always been the case. This chunk caching is not part of iterable player or the bag iterable source.

Additionally, when you change the set of subscribed topics the message level cache in the iterable player is discarded. This is a technical tradeoff decision to simplify the cache handling logic and is seen as something we can improve in the future.

Another change in behavior with the iterable player is that we separate the message level cache for on-demand topic subscriptions and full topic subscriptions (for plot). We download these topics separately as the management of their caching is separate. This is also a technical tradeoff for delivering the new playback behavior and for some data sources (like our data platform player) leads to faster loading of the full topics if they are lightweight.

I do not believe the behavior described above is a bug. It is the as-designed behavior that comes with some tradeoffs. There are caching improvements to make specific to not only the new player but also specific to how this impacts each data source (they are still different data sources).

For this ticket I recommend it be put back to a feature and reworded as an improvement to the remote-bag caching behavior. Other items to explore for this would be why http level caching is not doing anything here where for something like this we could assume the browser would help us - maybe that isn't true because of our range requests or some other aspect.

There are paths to improvements for our caching strategies for the different data sources but these are not bugs. The behavior you are seeing is expected and represents a tradeoff decision in the current implementation.

Per slack discussion - short term improvement here would be to stream sample dataset from data platform, which is much more efficient bandwidth wise.

We still need to improve caching to reduce network calls (across both DP and http), but improving the new user experience with sample dataset is higher priority.