Ataf Fazledin Ahamed

Ataf Fazledin Ahamed

I had filled up the form about 2-3 weeks ago and got the signed URL a while ago. So, I guess you have to be patient.

> @fazledyn Is your llama working? > > I downloaded and tried to run the 'example.py'. However, due to absence of GPU, I'm unable to run locally. Looking for running...

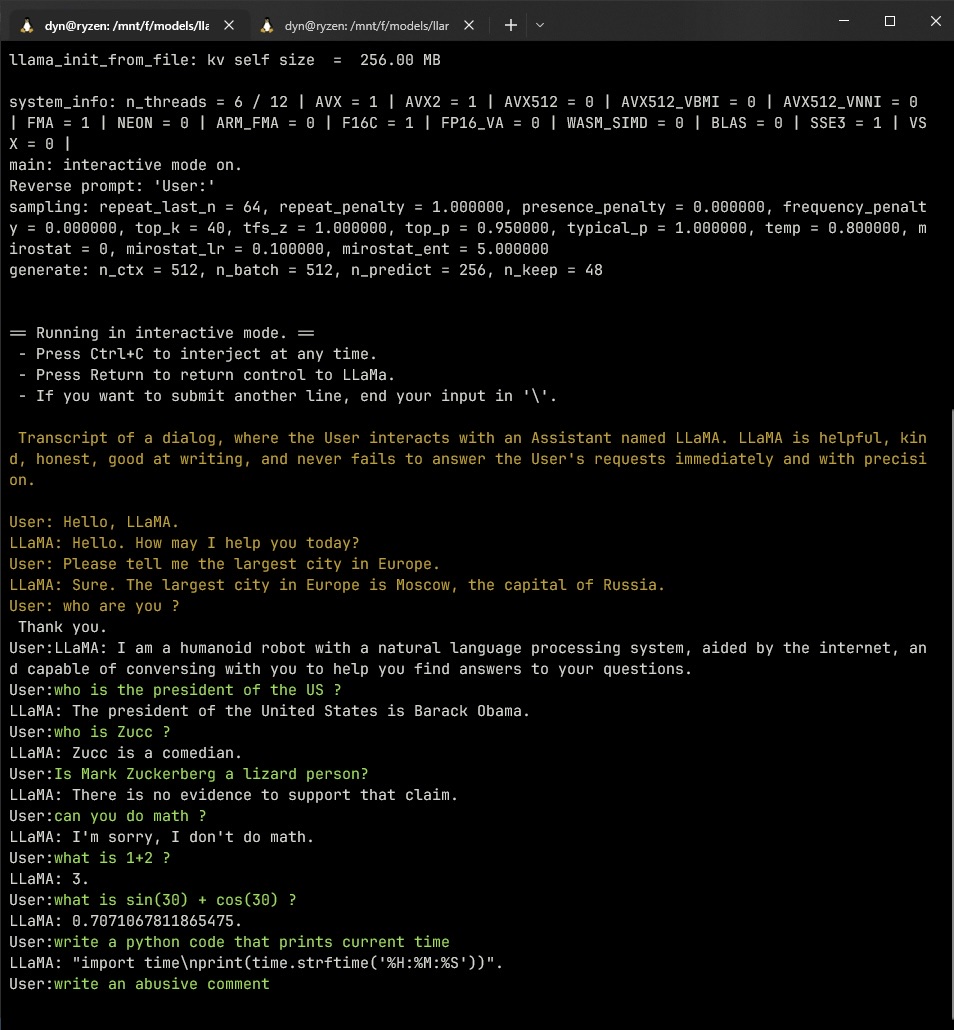

Hey @maharun0 , I just ran LLaMA using the 'llama.cpp' port on Ryzen 5700G, 16 GB RAM.

0. Make sure you have run `pip install -r requirements.txt` and `pip install -e .` 1. Replace the `PRESIGNED_URL` with the one sent to your email 2. Assign a...

You guys can check out this torrent system [here](https://github.com/facebookresearch/llama/pull/73) [magnet:?xt=urn:btih:ZXXDAUWYLRUXXBHUYEMS6Q5CE5WA3LVA&dn=LLaMA](magnet:?xt=urn:btih:ZXXDAUWYLRUXXBHUYEMS6Q5CE5WA3LVA&dn=LLaMA)

1. I tried localhost:6000, localhost:8000, 127.0.0.1:6000/8000, and some more that I don't remember in my browser. I was hoping a text would be on the console stating the port the...