Todd Parsons

Todd Parsons

Do you have any eyetracking components in your experiment? ioHub handles both keyboard/mouse input and eyetracker input, so the code for setting up ioHub (which also creates the variable `eyetracker`)...

Ah, I see the problem - the code to end the experiment assumes the existance of `eyetracker` even if the backend isn't `ioHub` and there's no eyetracking used. Should be...

That one's trickier as it looks like a problem with ioHub internally - an ioHub server should be launched when the keyboard backend is `ioHub` so the Python code being...

This exists in the form of @wakecarter 's [ScreenScale demo](https://pavlovia.org/Wake/screenscale) - which calculates the screen size and stores the relevant attributes in expInfo. In Python we can go one step...

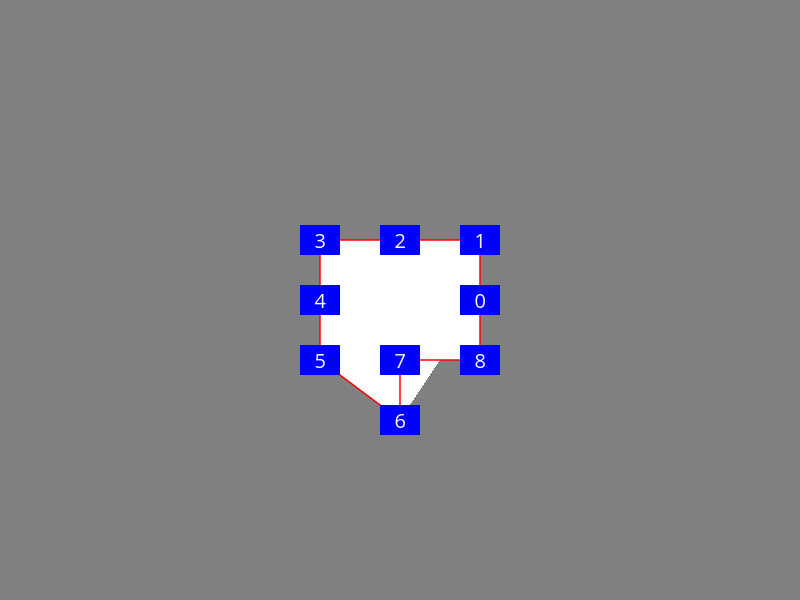

If you use `verts.reverse()` to arrange vertices anticlockwise, it instead results in this:

Task linked: [CU-8692uzc0q CounterBalance Component for Builder](https://app.clickup.com/t/8692uzc0q)

Closing this as the microphone component can be used without pocketsphinx - if "Transcribe audio" is unchecked or if the transcription backend is something else then it doesn't use pocketsphinx...

Fixed in #6303

Solution 2 is implemented in #6740

@mdcutone is this still relevant? I know you've made a lot of improvements since opening this, do any of those supersede this?