eg3d

eg3d copied to clipboard

eg3d copied to clipboard

I try to implement EG3d, and I find the camera parameters controls ID, and noise Z is invalid.

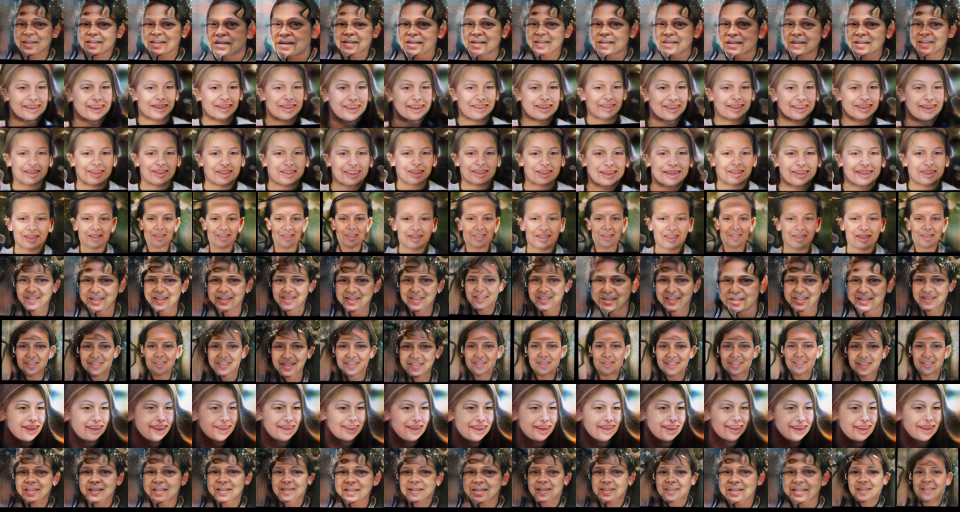

Like this. Each row is generated by different noise z, and each column is generated by the same camera paramters.

Like this. Each row is generated by different noise z, and each column is generated by the same camera paramters.

You can find, each row contains different IDs, although each column control same angle.

Hi,

May I ask how did you get the coordinate of the sampled point in the tri-plane space?

In the original NeRF, the sampled points are under the world coordinate.

In my understanding, the world coordinate is a continuous and unlimited space, while the tri-plane sapce is a bounded space. I am confused about the mapping between these two spaces.

Best,

just scale coords into -1 to 1

------------------ Original ------------------ From: Xiao Pan @.> Date: Wed,Jan 19,2022 4:28 PM To: NVlabs/eg3d @.> Cc: shoutOutYangJie @.>, Author @.> Subject: Re: [NVlabs/eg3d] I try to implement EG3d, and I find the cameraparameters controls ID, and noise Z is invalid. (Issue #5)

Hi, May I ask how did you get the coordinate of the sampled point in the tri-plane space? In the original NeRF, the sampled points are under the world coordinate. In my understanding, the world coordinate is a continuous and unlimited space, while the tri-plane sapce is a bounded space.

Best,

— Reply to this email directly, view it on GitHub, or unsubscribe. Triage notifications on the go with GitHub Mobile for iOS or Android. You are receiving this because you authored the thread.Message ID: @.***>

Thanks for your reply. Could you please provide more details about the scaling process? In my understanding, scaling the unlimited world coordinate to [-1, 1] needs to know the upper bound (Max) and lower bound (Min) of each axis. How did you choose Max and Min ? Am I missing something important in the paper ? Best,

Thanks for your reply. Could you please provide more details about the scaling process? In my understanding, scaling the unlimited world coordinate to [-1, 1] needs to know the upper bound (Max) and lower bound (Min) of each axis. How did you choose Max and Min ? Am I missing something important in the paper ? Best,

Making the initialized rays under the camera coordinate and divide a depth range, which can make points ranging from -1 to 1. you can read the code of CIPS-3d

Thank you for your help~ I will read the referenced code Best,

Hi,

I wonder whether you use the softmax activation as described in the paper? If so, can you provide with more details about the architecture of decoder?

Besides, the reason why camera parameters controls identity while noise not is probably that the model is experiencing mode collapse which may be relieved after training for more time and camera parameters are passed as condition to the mapping network. As described in the paper, during the inference stage, the camera parameters passed to the mapping network should be fixed while you need to move camera when rendering. Thus, these two are separated.

Best,

Hi,

I wonder whether you use the softmax activation as described in the paper? If so, can you provide with more details about the architecture of decoder?

Besides, the reason why camera parameters controls identity while noise not is probably that the model is experiencing mode collapse which may be relieved after training for more time and camera parameters are passed as condition to the mapping network. As described in the paper, during the inference stage, the camera parameters passed to the mapping network should be fixed while you need to move camera when rendering. Thus, these two are separated.

Best,

If two are separated, the view direction is always decided by nerf. Why should the condition send to mapping network?

Hi, I wonder whether you use the softmax activation as described in the paper? If so, can you provide with more details about the architecture of decoder? Besides, the reason why camera parameters controls identity while noise not is probably that the model is experiencing mode collapse which may be relieved after training for more time and camera parameters are passed as condition to the mapping network. As described in the paper, during the inference stage, the camera parameters passed to the mapping network should be fixed while you need to move camera when rendering. Thus, these two are separated. Best,

If two are separated, the view direction is always decided by nerf. Why should the condition send to mapping network?

That's their design.

Like this. Each row is generated by different noise z, and each column is generated by the same camera paramters.

You can find, each row contains different IDs, although each column control same angle.

Hi shoutOutYangJie! If you are conditioning the generator based on the camera pose (generator pose conditioning), then we expect that this pose will be correlated with the scene identity. This is a good thing, since it allows the model to more closely match the image distribution! At the beginning of training, you might find that the camera parameters seem to have more influence over scene identity than the noise vector, but this should improve throughout training.

For rendering images shown in the paper, we typically condition the generator on a single fixed pose (a front-facing pose) and then move the camera independently.

Like this. Each row is generated by different noise z, and each column is generated by the same camera paramters. You can find, each row contains different IDs, although each column control same angle.

Hi shoutOutYangJie! If you are conditioning the generator based on the camera pose (generator pose conditioning), then we expect that this pose will be correlated with the scene identity. This is a good thing, since it allows the model to more closely match the image distribution! At the beginning of training, you might find that the camera parameters seem to have more influence over scene identity than the noise vector, but this should improve throughout training.

For rendering images shown in the paper, we typically condition the generator on a single fixed pose (a front-facing pose) and then move the camera independently.

How can you condition the generator on a single fixed pose and move the camera independently? Cause the condition came from camera pose?

hi,I meet same problems ,do you have solved it?I use about 20k images,is it possible that there is too little data?