TensorRT

TensorRT copied to clipboard

TensorRT copied to clipboard

NVIDIA® TensorRT™ is an SDK for high-performance deep learning inference on NVIDIA GPUs. This repository contains the open source components of TensorRT.

## Description ## Environment **TensorRT Version**: TensorRT-8.2.5.1 **NVIDIA GPU**: V100 32G **NVIDIA Driver Version**: 470.57.02 **CUDA Version**: 11.1 **CUDNN Version**: 8.0.5 **Operating System**: Centos7 **Python Version (if applicable)**: 3.8 **Tensorflow...

## Description Hello, I'm debugging a INT8 model and want to mark some model layer as output. But I got error while I tried "mark all" command.  Because mark...

## Description ## Environment **TensorRT Version**: **NVIDIA GPU**: **NVIDIA Driver Version**: **CUDA Version**: **CUDNN Version**: **Operating System**: **Python Version (if applicable)**: **Tensorflow Version (if applicable)**: **PyTorch Version (if applicable)**: **Baremetal...

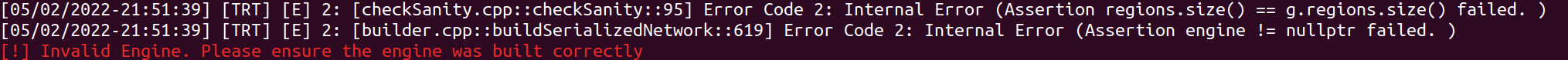

I want to convert pytorch model to TensorRT to do INT8 inference, then I do pytorch model -> onnx model -> trt engine, and in TensorRT 7.2.2.3, I succeed. I...

## Description I encountered the following error: ```bash The engine plan file is generated on an incompatible device, expecting compute 8.6 got compute 7.5, please rebuild.4: [runtime.cpp::deserializeCudaEngine::50] Error Code 4:...

I'm implementing N:M fine-grained sparsity acceleration on ViT, ConvNets. It seems that during the acceleration process of ConvNets (e.g. the official example for ResNext101-32x8d), The "Layers eligible for sparse math"...

I test inference in GeForce 3090 and Jeston TX2. The env of 3090 is ``` TensorRT Version: 8.4.1.5 NVIDIA GPU: GeForce 3090 CUDA Version: 11.4 CUDNN Version: 8.4.0 Operating System:...

CUDA:11.1 TensorRT:7.2.1.6 CUDNN:8.0 At first I use trtexec to convert a onnx model to trt file with the flag "--fp16", then I serialized the trt file and create engine and...

## Description For trt 8.0.3 ,the following code runs OK, BUT use trt 8.4 occurred error. ``` # cu_seq_lens shape is (-1), I want to get cu_seq_lens[:-1] cu_seq_shape = network.add_shape(cu_seq_lens).get_output(0)...