TensorRT

TensorRT copied to clipboard

TensorRT copied to clipboard

TensorRT8 int8 model convtranspose layer output error

Description

Hello,

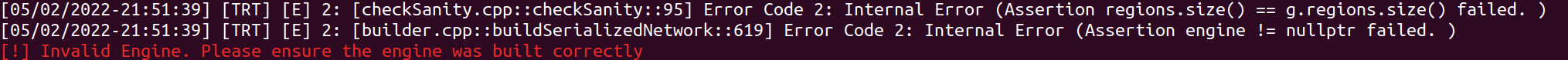

I'm debugging a INT8 model and want to mark some model layer as output. But I got error while I tried "mark all" command.

Because mark all works fine with normal float onnx models, I'm wondering if this "mark all" function is not supported by TensorRT for INT8 models? And is there some other way to get all layer's output for debugging INT8 model? Could you help me with this issue? Thanks!

Environment

TensorRT Version: 8.4 NVIDIA GPU: 2080Ti NVIDIA Driver Version: CUDA Version: 11.6 CUDNN Version: 8.3 Operating System: Ubuntu18.04 Python Version (if applicable): 3.7 Tensorflow Version (if applicable): PyTorch Version (if applicable): Baremetal or Container (if so, version):

Relevant Files

Steps To Reproduce

Hello @zhangjoey115 , I assume you are using the mark all function in the polygraphy.

first mark all can hidden some issue while debug accuracy, this is because without mark all output, some layers are fused together, but the fusion is broken when mark all output. The fusion kernel and unfused kernel did not share the same implementation, even you have tuned accuracy for the unfused version, you might still has accuracy issue in the fused version.

secondly, mark all not works for network contains boolean tensor or loop. This is TRT internal implementation issue and I am not sure if you hit this.

so usually we recommend to check the fusion log by enable version log level, and check the layer information section in the log, and only mark the fused layers output as network output.

@ttyio Thanks a lot for detailed replay! Yes, I'm using polygraphy to debug the accuracy issue. I tried marking only several layer and it works!

But while I compare the layer output between float model and INT8 trt model, I found that one ConvTranspose2d layer give quite different output with float model. The inputs to ConvTranspose2d(=Relu output in following pic) in 2 models are almost same(diff <5%) but output are quite different(diff = 20%~ >100%).

Do you have any clue about this difference, and is there anyway to debug into the ConvTranspose2d calculation in TRT model?

@ttyio By the way, I've already fixed the "axis=(0)" in pytorch_quantization code for convtranspose2d, but the results are still differ a lot. Is there any other way to fix this problem? Or I should not quant all convtranspose layers? Thanks!

@zhangjoey115 , sorry for the delay response, do you share a simple repro onnx model? thanks!

@ttyio Thanks for response! Please find attached model for reference. The output from final convtranspose is different between onnx and trt. Looking forward your reply! convtrans.zip

Hello @ttyio , do you have any suggestion about the convtranspose process in model I attached? Or did I made any mistake in comparing the onnx & trt output? Looking forward your reply. Thanks!

I can repro this accuracy issue using Polygraphy.

[I] Accuracy Comparison | onnxrt-runner-N0-06/14/22-23:03:14 vs. trt-runner-N0-06/14/22-23:03:14

[I] Comparing Output: 'output' (dtype=float32, shape=(1, 128, 128, 240)) with 'output' (dtype=float32, shape=(1, 128, 128, 240))

[I] Tolerance: [abs=1e-05, rel=1e-05] | Checking elemwise error

[I] onnxrt-runner-N0-06/14/22-23:03:14: output | Stats: mean=-0.008292, std-dev=0.049881, var=0.0024881, median=0.0014456, min=-0.30628 at (0, 109, 6, 66), max=0.14754 at (0, 37, 4, 148), avg-magnitude=0.032867

[I] ---- Histogram ----

Bin Range | Num Elems | Visualization

(-0.306 , -0.261 ) | 1091 |

(-0.261 , -0.216 ) | 27378 |

(-0.216 , -0.17 ) | 12329 |

(-0.17 , -0.125 ) | 170305 | ###

(-0.125 , -0.0794) | 117975 | ##

(-0.0794, -0.034 ) | 366587 | ########

(-0.034 , 0.0114 ) | 1827683 | ########################################

(0.0114 , 0.0568 ) | 1297045 | ############################

(0.0568 , 0.102 ) | 109710 | ##

(0.102 , 0.148 ) | 2057 |

[I] trt-runner-N0-06/14/22-23:03:14: output | Stats: mean=-0.007906, std-dev=0.051306, var=0.0026323, median=0.0011116, min=-0.29945 at (0, 109, 3, 7), max=0.14445 at (0, 118, 1, 30), avg-magnitude=0.034616

[I] ---- Histogram ----

Bin Range | Num Elems | Visualization

(-0.306 , -0.261 ) | 295 |

(-0.261 , -0.216 ) | 16885 |

(-0.216 , -0.17 ) | 48533 | #

(-0.17 , -0.125 ) | 131221 | ##

(-0.125 , -0.0794) | 143633 | ###

(-0.0794, -0.034 ) | 395246 | ########

(-0.034 , 0.0114 ) | 1758043 | ########################################

(0.0114 , 0.0568 ) | 1253736 | ############################

(0.0568 , 0.102 ) | 179307 | ####

(0.102 , 0.148 ) | 5261 |

[I] Error Metrics: output

[I] Minimum Required Tolerance: elemwise error | [abs=0.12891] OR [rel=1.1654e+06] (requirements may be lower if both abs/rel tolerances are set)

[I] Absolute Difference | Stats: mean=0.011486, std-dev=0.0092949, var=8.6396e-05, median=0.009384, min=3.7253e-09 at (0, 117, 55, 151), max=0.12891 at (0, 37, 4, 148), avg-magnitude=0.011486

[I] ---- Histogram ----

Bin Range | Num Elems | Visualization

(3.73e-09, 0.0129) | 2524715 | ########################################

(0.0129 , 0.0258) | 1104125 | #################

(0.0258 , 0.0387) | 248857 | ###

(0.0387 , 0.0516) | 40561 |

(0.0516 , 0.0645) | 10145 |

(0.0645 , 0.0773) | 3383 |

(0.0773 , 0.0902) | 295 |

(0.0902 , 0.103 ) | 56 |

(0.103 , 0.116 ) | 19 |

(0.116 , 0.129 ) | 4 |

[I] Relative Difference | Stats: mean=3.8879, std-dev=673.27, var=4.533e+05, median=0.4068, min=1.1756e-07 at (0, 34, 125, 6), max=1.1654e+06 at (0, 50, 65, 61), avg-magnitude=3.8879

[I] ---- Histogram ----

Bin Range | Num Elems | Visualization

(1.18e-07, 1.17e+05) | 3932153 | ########################################

(1.17e+05, 2.33e+05) | 4 |

(2.33e+05, 3.5e+05 ) | 2 |

(3.5e+05 , 4.66e+05) | 0 |

(4.66e+05, 5.83e+05) | 0 |

(5.83e+05, 6.99e+05) | 0 |

(6.99e+05, 8.16e+05) | 0 |

(8.16e+05, 9.32e+05) | 0 |

(9.32e+05, 1.05e+06) | 0 |

(1.05e+06, 1.17e+06) | 1 |

[E] FAILED | Difference exceeds tolerance (rel=1e-05, abs=1e-05)

[E] FAILED | Mismatched outputs: ['output']

[!] FAILED | Command: /home/pohanh/.local/bin/polygraphy run --onnxrt --trt --int8 build/convtrans.onnx

I have filed internal tracker (id: 3681310) and will let you know if we have any update.

This has been fixed internally and will be available in TRT 8.5 release. We are also adding this to our test suite so that it doesn't happen again in the future.

Good news! Looking forward the new version TRT!

closing since no activity for more than 14 days, please reopen if you still have question, thanks!