DeepLearningExamples

DeepLearningExamples copied to clipboard

DeepLearningExamples copied to clipboard

State-of-the-Art Deep Learning scripts organized by models - easy to train and deploy with reproducible accuracy and performance on enterprise-grade infrastructure.

I'm not good at Python so I want to inference Tacotron by libtorch C++ How to convert Tacotron2 to Libtorch ?

Adds `--save_ckpt` in the above documentation. I spent time training assuming that checkpoints would be saved by default in the pipeline, so I think adding this to the documentation would...

Related to **Model/Framework(s)** *BART*'s documentation **Describe the bug** The documentation to your [BART example](https://github.com/NVIDIA/DeepLearningExamples/blob/master/PyTorch/LanguageModeling/BART/README.md) is unclear and ambiguous about whether it supports pre-training or not. In my opinion you should...

I'm using the training script from https://github.com/NVIDIA/DeepLearningExamples/tree/master/TensorFlow2/Classification/ConvNets/efficientnet_v2/S/training/AMP/convergence_8xA100.sh on my A100-80G node, no changes of parameters I am getting lot of errors about ```yml 7: [1,5]: File "/usr/local/lib/python3.8/dist-packages/tensorflow/python/eager/execute.py", line 59, in...

Related to **BERT/PyTorch** **Describe the bug** A clear and concise description of what the bug is. I want to get exact_match and f1 score when doing prediction. I changed some...

Related to **ConvNet/PyTorch** *(e.g. GNMT/PyTorch or FasterTransformer/All)* **Describe the bug** SyncBatchNorm is not used in the script https://github.com/NVIDIA/DeepLearningExamples/tree/master/PyTorch/Classification/ConvNets. Is this a potential bug when DDP is activated? I make this...

Hi, We are trying to train a multi-speaker model starting from the LibriTTS data and using the latest FastPitch commit. We selected the 50 speakers which have the most utterances...

Related to **Bert/Pytorch** **Describe the bug** After running a long period, for example, after 200,000 iterations, there will be some skipped steps. Such skipped steps are counted into the total...

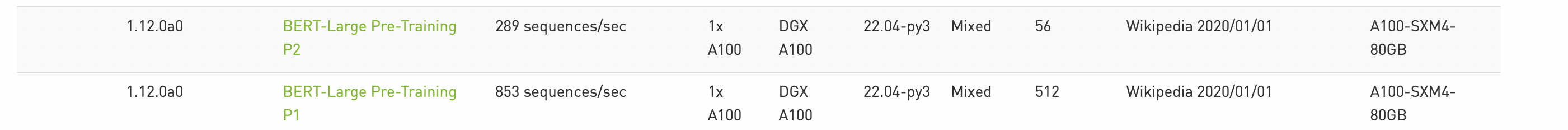

Hi, I have notice that on A100 80G, bert Phase1 and Phase 2 can have a throughput of 853 and 289 sequences/sec respectively.  I want to reproduce this result...