DeepLearningExamples

DeepLearningExamples copied to clipboard

DeepLearningExamples copied to clipboard

[BERT/PyTorch] Unable to reproduce bert benchmark under A100

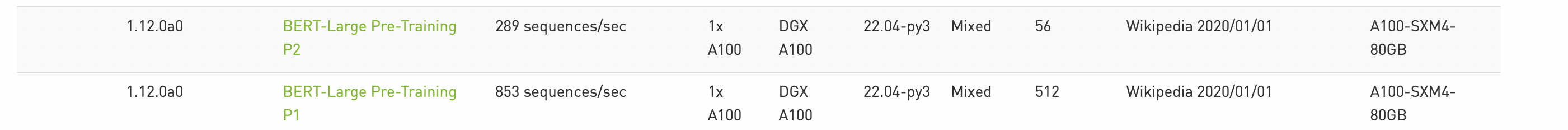

Hi, I have notice that on A100 80G, bert Phase1 and Phase 2 can have a throughput of 853 and 289 sequences/sec respectively.

I want to reproduce this result on my own A100(80G) GPU. But when following bellow instruct, I only get the result 486 and 177 sequences/sec for bert phase 1 and phase 2.

https://github.com/NVIDIA/DeepLearningExamples/tree/master/PyTorch/LanguageModeling/BERT#benchmarking

I have notice that the result on NVIDIA website is using NGC 22.04-py3 + PyTorch1.12.0a0, so I build a NGC22.04 docker. When run bert performance with the same command, I got the error:

device: cuda:0 n_gpu: 1, distributed training: False, 16-bits training: True DLL 2022-06-27 02:08:09.119577 - PARAMETER Config : ["Namespace(allreduce_post_accumulation=False, allreduce_post_accumulation_fp16=False, amp=False, checkpoint_activations=False, config_file='bert_configs/large.json', cuda_graphs=False, disable_jit_fusions=False, disable_progress_bar=True, do_train=True, fp16=True, gradient_accumulation_steps=32, init_checkpoint=None, init_loss_scale=1048576, input_dir='pretrain/phase1/unbinned/parquet/', json_summary='results/dllogger.json', learning_rate=0.006, local_rank=-1, log_freq=1.0, loss_scale=0.0, max_predictions_per_seq=20, max_seq_length=128, max_steps=1.0, n_gpu=1, no_dense_sequence_output=False, num_steps_per_checkpoint=200, num_train_epochs=3.0, num_workers=1, output_dir='output', phase1_end_step=7038, phase2=False, profile=False, profile_start=0, resume_from_checkpoint=False, resume_step=-1, seed=42, skip_checkpoint=False, steps_this_run=1.0, train_batch_size=256, use_env=False, vocab_file='vocab/vocab', warmup_proportion=0.2843)"] /opt/conda/lib/python3.8/site-packages/torch/jit/_recursive.py:234: UserWarning: 'bias' was found in ScriptModule constants, but it is a non-constant parameter. Consider removing it. warnings.warn("'{}' was found in ScriptModule constants, "

Traceback (most recent call last):

File "run_pretraining.py", line 731, in

weight_size = weight.size()

grad_input = torch.matmul(grad_output, weight)

~~~~~~~~~~~~ <--- HERE

grad_weight = torch.matmul(grad_output.reshape(-1, weight_size[0]).t(), input.reshape(-1, weight_size[1]))

# Note: calling unchecked_unwrap_optional is only safe, when we

RuntimeError: expected scalar type Half but found Float

Have I missing anything?

For containers > 21.11, you will need to add an additional torch._C._jit_set_autocast_mode(True) here

Also note that in the image shared, batchsize/gpu = 512 for phase1 and batchsize/gpu = 56 for phase2.

The default config here runs 256 and 32 by default for phase1 and phase2.

batch/gpu = train_batch_size / gradient_accumulation_steps

you can set gradient_accumulation_steps=16, train_batch_size_phase2=4088 and gradient_accumulation_steps_phase2=73 to run the desired batch sizes/gpu of 512 for phase1 and 56 for phase2.

Thank you for this information. @sharathts I have modify this torch._C._jit_set_autocast_mode(True), and can be run successfully.

When changed batch size to 512, get CUDA OOM.

Traceback (most recent call last):

File "run_pretraining.py", line 731, in

So I change batch size to 480, this time I can get result. But the result is still 400+, does not improve much, compared with 853 sequence/sec.

One reason I can suspect is that my GPU can not set to the highest frequency 1410.

I mean if I want to get the best result, should I need run under 1410MHz, or the benchmark script does not open source currentlly?

I mean if I want to get the best result, should I need run under 1410MHz, or the benchmark script does not open source currentlly?

Bellow is my script to run bert phase1, please help me to check it, thanks!

python3 -m torch.distributed.launch --nproc_per_node=1 /workspace/bert/run_pretraining.py --input_dir=/workspace/bert/data/pretrain/phase1/unbinned/parquet/ --output_dir=/workspace/bert/results/checkpoints --config_file=bert_configs/large.json --vocab_file=vocab/vocab --train_batch_size=7680 --max_seq_length=128 --max_predictions_per_seq=20 --max_steps=10 --warmup_proportion=0.2843 --num_steps_per_checkpoint=200 --learning_rate=6e-3 --seed=42 --fp16 --gradient_accumulation_steps=16 --allreduce_post_accumulation --allreduce_post_accumulation_fp16 --do_train --json-summary /workspace/bert/results/dllogger.json --disable_progress_bar --num_workers=4

LDDL - 2022-06-29 05:10:04,641 - datasets.py:128:init - node-0 - WARNING : lost 6/62583982=9.587117674934778e-06% samples in total get_bert_pretrain_data_loader took 0.03091573342680931 s! DLL 2022-06-29 05:10:04.641539 - PARAMETER SEED : 42 DLL 2022-06-29 05:10:04.641611 - PARAMETER train_start : True DLL 2022-06-29 05:10:04.641636 - PARAMETER batch_size_per_gpu : 480 DLL 2022-06-29 05:10:04.641654 - PARAMETER learning_rate : 0.006 LDDL - 2022-06-29 05:10:04,711 - datasets.py:246:iter - node-0 - WARNING : epoch = 0 DLL 2022-06-29 05:10:32.376414 - Training Epoch: 0 Training Iteration: 0 average_loss : 11.1728515625 learning_rate : 0.0021104468032717705 skipped_steps : 1 DLL 2022-06-29 05:10:32.376605 - PARAMETER loss_scale : 524288.0 DLL 2022-06-29 05:10:32.376766 - PARAMETER checkpoint_step : 0 /opt/conda/lib/python3.8/site-packages/torch/nn/modules/module.py:1383: UserWarning: positional arguments and argument "destination" are deprecated. nn.Module.state_dict will not accept them in the future. Refer to https://pytorch.org/docs/master/generated/torch.nn.Module.html#torch.nn.Module.state_dict for details. warnings.warn( DLL 2022-06-29 05:10:50.967377 - Training Epoch: 0 Training Iteration: 1 average_loss : 11.1728515625 learning_rate : 0.0021104468032717705 skipped_steps : 1 DLL 2022-06-29 05:10:50.968261 - PARAMETER loss_scale : 262144.0 DLL 2022-06-29 05:11:06.035707 - Training Epoch: 0 Training Iteration: 1 average_loss : 11.17529296875 learning_rate : 0.0021104468032717705 skipped_steps : 2 DLL 2022-06-29 05:11:06.037040 - PARAMETER loss_scale : 131072.0 DLL 2022-06-29 05:11:21.106720 - Training Epoch: 0 Training Iteration: 1 average_loss : 11.173828125 learning_rate : 0.0021104468032717705 skipped_steps : 3 DLL 2022-06-29 05:11:21.108043 - PARAMETER loss_scale : 65536.0 DLL 2022-06-29 05:11:36.175364 - Training Epoch: 0 Training Iteration: 1 average_loss : 11.169921875 learning_rate : 0.0021104468032717705 skipped_steps : 4 DLL 2022-06-29 05:11:36.176255 - PARAMETER loss_scale : 32768.0 DLL 2022-06-29 05:11:51.274713 - Training Epoch: 0 Training Iteration: 0 average_loss : 11.17724609375 learning_rate : 0.0021104468032717705 skipped_steps : 6 DLL 2022-06-29 05:11:51.274829 - PARAMETER loss_scale : 16384.0 DLL 2022-06-29 05:11:51.274950 - PARAMETER checkpoint_step : 0 DLL 2022-06-29 05:12:34.153084 - Training Epoch: 0 Training Iteration: 1 average_loss : 11.17724609375 learning_rate : 0.0021104468032717705 skipped_steps : 6 DLL 2022-06-29 05:12:34.162706 - PARAMETER loss_scale : 16384.0 DLL 2022-06-29 05:12:49.257666 - Training Epoch: 0 Training Iteration: 1 average_loss : 11.599609375 learning_rate : 0.004220893606543541 skipped_steps : 7 DLL 2022-06-29 05:12:49.257780 - PARAMETER loss_scale : 8192.0 DLL 2022-06-29 05:13:04.354570 - Training Epoch: 0 Training Iteration: 1 average_loss : 11.59814453125 learning_rate : 0.004220893606543541 skipped_steps : 8 DLL 2022-06-29 05:13:04.354719 - PARAMETER loss_scale : 4096.0 DLL 2022-06-29 05:13:19.454927 - Training Epoch: 0 Training Iteration: 2 average_loss : 11.5791015625 learning_rate : 0.004220893606543541 skipped_steps : 8 DLL 2022-06-29 05:13:34.547416 - Training Epoch: 0 Training Iteration: 3 average_loss : 11.79296875 learning_rate : 0.00501995999366045 skipped_steps : 8 DLL 2022-06-29 05:13:49.642132 - Training Epoch: 0 Training Iteration: 4 average_loss : 9.8173828125 learning_rate : 0.004647579975426197 skipped_steps : 8 DLL 2022-06-29 05:14:04.739040 - Training Epoch: 0 Training Iteration: 5 average_loss : 9.8212890625 learning_rate : 0.0042426404543221 skipped_steps : 8 DLL 2022-06-29 05:14:19.832876 - Training Epoch: 0 Training Iteration: 6 average_loss : 9.36767578125 learning_rate : 0.0037947327364236116 skipped_steps : 8 DLL 2022-06-29 05:14:34.921243 - Training Epoch: 0 Training Iteration: 7 average_loss : 9.2021484375 learning_rate : 0.0032863353844732046 skipped_steps : 8 DLL 2022-06-29 05:14:50.017687 - Training Epoch: 0 Training Iteration: 8 average_loss : 9.119140625 learning_rate : 0.0026832816656678915 skipped_steps : 8 DLL 2022-06-29 05:15:05.113993 - Training Epoch: 0 Training Iteration: 9 average_loss : 9.015625 learning_rate : 0.0018973668338730931 skipped_steps : 8 DLL 2022-06-29 05:15:20.204955 - Training Epoch: 0 Training Iteration: 10 final_loss : 8.95849609375 DLL 2022-06-29 05:15:20.205117 - PARAMETER checkpoint_step : 10 DLL 2022-06-29 05:15:24.055250 - e2e_train_time : 325.98641705513 training_sequences_per_second : 455.7037746112786 final_loss : 8.95849609375 raw_train_time : 299.1416959762573

BTW, there is another place I need change when run under pytorch 1.12. return torch.nn.functional.gelu(x, approximate="tanh")

The benchmarking script does not set frequency, nor any system setting. This is something you will have to ensure if running as expected.

Can you match the reported performance on finetuning?