Mayorc1978

Mayorc1978

> nmkd Tested on cloned locally GFPGAN 1.4: It works as expected, you should post complaints on nmkd site, not here.

Same problem here. Did you solve this?

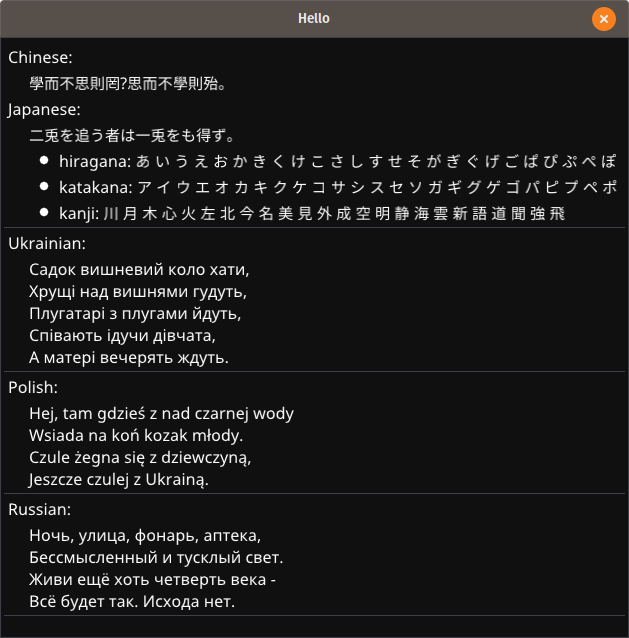

> This should work now, please see: https://github.com/podgorskiy/bimpy#non-english-text  `bp.load_fonts(chinese=True, latin_ext=True, japanese=True, cyrillic=True)` This line of code will crash the program, giving this error, and by the way the demo...

> I've the same problem and I didn't solve the issue can you specify the other needed changes to make it work. Cause even with ExponentialML corrections I still get...

Can you specify what goes in Template, and Chat Template. Also there was a problem with stop tokens in early Llama3 how I am sure that is fixed with llamafile?

> It doesn't, however can make use of [LiteLLM](https://github.com/BerriAI/litellm) to wrap the endpoint to make it openai compatible So it's possible to run ollama in docker, and let's say it...

What is the discord bot we are talking about?

> > Link : https://drive.google.com/file/d/1krOLgjW2tAPaqV-Bw4YALz0xT5zlb5HF/view { thanks to that reddit guy } > > Is there any reason why there are different inswapper_128.onnx files? This one at the google drive...

I didn't test it so I cannot confirm this. But in PowerShell you can simply do this and it should work: `$env:OPENAI_API_BASE="http://localhost:1234/v1" python devika.py` And have LM Studio working. For...

Most of the tools that allow you to specify the base url worked great for me, but a few are still giving me problems (**flowise** included) So if you could...