Vanilla

Vanilla

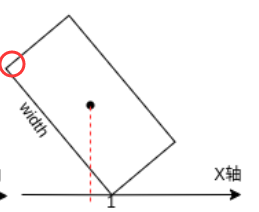

> 应该可以,五参数转四点会用到放射变换,这个过程不受具体角度定义的影响。 多谢大佬解答!我的这个想法其实是转化rolabelImg的格式到DOTA时产生的:  在rolabelImg定义下,上图中width和x轴夹角θ为0

@Darren-pfchen Could you give some advice about controlling the number of rois please?

Could you please take a moment to look into this question when you have the time? @kalekundert

> FYI, I'm just a user of this software, not a maintainer. A lot of the math that goes on behind the scenes is beyond my understanding. But I'm familiar...

> @Luo-Z13 The total number of iterations is a bit strange. Did you modify the settings in config? My script: ``` NPROC_PER_NODE=${GPU_NUM} xtuner train llava_llama3_8b_instruct_full_clip_vit_large_p14_336_lora_e1_gpu8_finetune \ --deepspeed deepspeed_zero3_offload --seed 1024...

> @Luo-Z13 How many GPUs are you using for training? I use 4*A100(40G)

> @Luo-Z13 How many GPUs are you using for training? And the pre-training of LLaVA-llama3 is normal.

> @Luo-Z13 > > Under your configuration, the total dataset size is 4 * 4 * 23076 = 369216. However, the correct size of llava fine-tuning dataset is ~650000. This...

> @Luo-Z13 > > Yes, that's possible. I suggest comparing your data format and content with llava's to see if the issue lies within the data. > > Additionally, here...

> @Luo-Z13 > > Yes, that's possible. I suggest comparing your data format and content with llava's to see if the issue lies within the data. > > Additionally, here...