Can't understand reward scaling in value clipping of PPO

https://github.com/DLR-RM/stable-baselines3/blob/5a70af8abddfc96eac3911e69b20f19f5220947c/stable_baselines3/ppo/ppo.py#L231

Hi, I'm new to PPO. I can't understand why in your code, you say value clipping is related to reward scaling. I think you just clip the value , nothing happened to reward scaling or related to it.

How do you define the value?

https://github.com/DLR-RM/stable-baselines3/blob/5a70af8abddfc96eac3911e69b20f19f5220947c/stable_baselines3/ppo/ppo.py#L230-L235

Sorry, I used the wrong link. I don't understand this sentence, "# NOTE: this depends on the reward scaling". The value I refer to is the variable which names values of your code. You clip it with rollout_data.old_values. I can't see anything related to reward scaling here, you just call the clip function. I can't understand why you say this depends on the reward scaling.

Still, what is the definition of the value? if you manage to answer that question, you should be able to answer yours.

I think the value is the output of the value network when giving the current observation. What does it relate to my question? Sorry, I may need more help. >:

I think the value is the output of the value network when giving the current observation. What does it relate to my question?

Yes, but this is a bit of a circular definition, how do you train the value network? why is it called value network? so, what is the definition of value function?

I'm talking about theory: https://spinningup.openai.com/en/latest/spinningup/rl_intro.html#value-functions

I think I understand what did you mean now. Based on this post before, https://github.com/hill-a/stable-baselines/issues/216. I think what you mean to say is that value function is the cumulated future reward with discounting, it naturally depends on the scale of the reward. Thus, the value clip depends on the reward scale. You are not clipping the ratio of old values and new values. You just clip the difference. This difference depends on the reward scale. Thus, the clipping depends on the reward scale. Am I right?

I actually pose this issue because I don't understand why we have to do reward scaling. It looks very strange.

Could you give me some insights into why we should do it?

Could you give me some insights into why we should do it?

This difference depends on the reward scale. Thus, the clipping depends on the reward scale. Am I right?

exactly =)

actually pose this issue because I don't understand why we have to do reward scaling. It looks very strange.

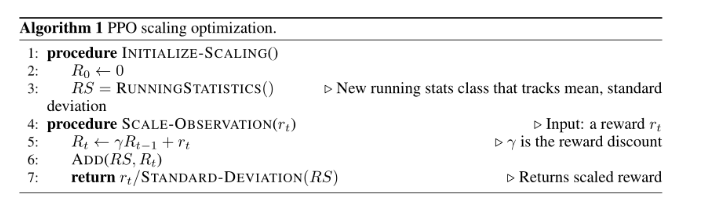

https://github.com/hill-a/stable-baselines/issues/698

https://github.com/DLR-RM/stable-baselines3/issues/348#issuecomment-795147337

Thank you. However, I read the reference but still can't understand. I need some further explanations. Here is what I understand now.

We need to make advantage have nice scale. Normalizing reward (ex: divided by 10) at each step may not be sufficient. Because if the episode length is large, the return (summation of discounted future reward) would still be very big. What I don't understand is how reward scaling affects the magnitude of the return at each step.

Suppose we have an episodic game. Say we have a trajectory (s1,a1,s2,....s50,a50,...s100,a100). The return of (s50,a50) would be much bigger than the return of (s100,a100). That is v(s50) >> v(s100). It would possibly make neural network hard to fit. However, it is naturally by definition of value function that the state corresponding to early stage of the game have larger value. What's wrong with it?

I guess it is because what we really want is the advantage of different actions at each state. This advantage should be of the same magnitude across different steps of the game. To be more precise. Say at time steps 50, we visit s50 and have two possible actions, a50_1,a50_2. At time steps 100, we visit s100 and have two possible actions, a100_1,a100_2. adv(s50,a50_1) and adv(s100,a100_1) would have different scales. It will make action network only cares about fitting s50 to the right action. So, we need to normalize the advatage across different time steps. Am I correct? However, I still don't know how reward scaling makes the advantage between each time step at the same scale.

Doesn't the reward scaling (or advantage normalization) break the policy gradient theorem (or advantage normalization)? Does the value network still fit the actual value function now when we have reward scaling?