Badis

Badis

Hello, I simply wanted to bring up the point that the output can vary based on the number of threads selected, even if the seed stays constant. I have an...

Hello, I noticed something when trying the chat with Bob is that I always get the first token as empty. 1 -> '' 4103 -> ' Trans' 924 -> 'cript'...

# Expected Behavior Hello, I wanted to convert the alpaca-native 7b GPTQ file (pt file) into a ggml file with the convert-gptq-to-ggml.py script https://github.com/ggerganov/llama.cpp/blob/master/convert-gptq-to-ggml.py # Current Behavior The problem is...

# Environment and Context Hello, Before jumping to the subject, here's the environnement I'm working with: - Windows 10 - Llama-13b-4bit-(GPTQ quantized) model - Intel® Core™ i7-10700K [AVX | AVX2...

Hello, Your [windows binaries releases](https://github.com/ggerganov/llama.cpp/releases) have probably been built with MSVC and I think there's a better way to do it. # Expected Behavior I have a Intel® Core™ i7-10700K...

Hello, I've heard that I could get BLAS activated through my intel i7 10700k by installing this [library](https://www.intel.com/content/www/us/en/developer/tools/oneapi/onemkl.html). Unfortunatly, nothing happened, after compiling again with Clung I still have no...

### Describe the bug Hello, I can't choose 128 in the groupsize parameter even though the model I try to load was quantized with groupsize 128  That means I...

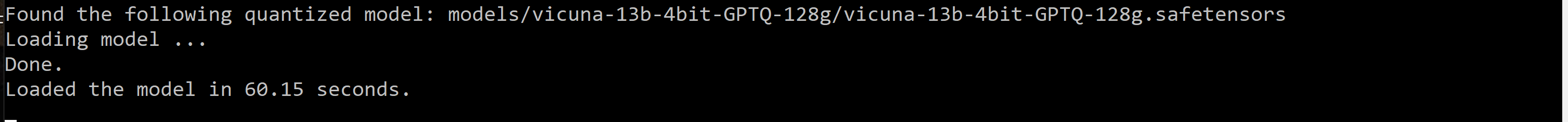

### Describe the bug Hello, When I'm loading a model in the regular way it takes usually 50-60 seconds  But when I load a model with the monkey patch...

### Describe the bug Hello, I can't run my Triton models with the monkey patch for Loras ### Is there an existing issue for this? - [X] I have searched...

Hello, Several people have reported having this issue for the v1.1 model, which wasn't a bug for the v1.0 one. Do you plan on fixing that in the future?