Badis

Badis

> Well 4 bit by itself is deterministic. 8/fp16 was not, unless you count producing a stream of unending garbage every time as deterministic. Turning off do_sample allows 8bit to...

> Yes.. but `do_sample = False` generations are repetitive garbage and you use (NovelAI-Sphinx Moth) in your example. With randomness enabled generation parameters, you can avoid the problems like I...

I have a 3060, and weirdly it works for me on the cuda part

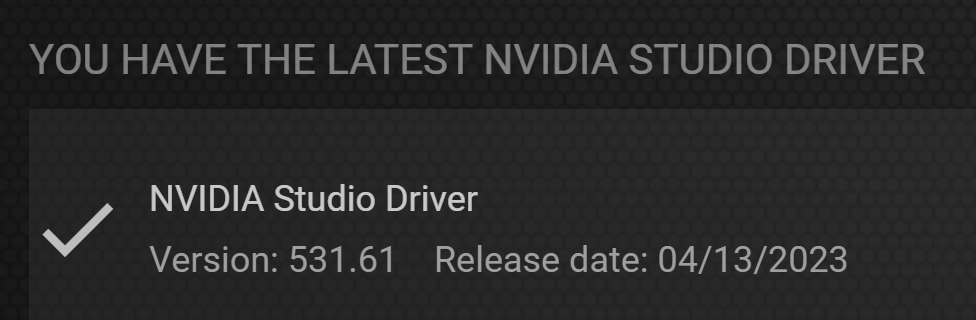

I still have this issue even with the latest Nvdia driver

I tried it and it doesn't look like you can talk with the model without image, you are obligated to give him one to get it going. That's a shame...

No, Qwen have their own tokenizer unfortunately (and somehow they never upload their tokenizer.model so...). I tried with llama's tokenizer just for the sake of it and it outputs gibberish.

I second this, there should be a way to keep the model to your VRAM until you switch to another model Edit: Don't use any flags and use those settings,...

@ggerganov I just tried with t = 14, 12 and 5 and I have the same result each time. Looks like it's fixed! Great work I appreciate 😄

I've got a new error somehow during the loading of the 13b lora ``` CUDA SETUP: Loading binary C:\Users\Utilisateur\anaconda3\envs\textgen\lib\site-packages\bitsandbyte s\libbitsandbytes_cuda116.dll... Adding the LoRA alpaca-lora-13b to the model... Traceback (most recent...

I asked gpt4 if he saw some errors on your code, here's his answer: ``` It's possible that there could be issues with the math operations in the code that...