aztk

aztk copied to clipboard

aztk copied to clipboard

AZTK powered by Azure Batch: On-demand, Dockerized, Spark Jobs on Azure

In cases where the cluster will not recover from failure (master node not selected and all nodes in unusable or start_task_failed) the method should fail early. Currently, it will just...

Hello, I'm using the SDK (v0.8.0) to spin-up an AZTK cluster. I'm also using a custom docker image, and on one instance I forgot to pass the docker registry credentials,...

Currently, we only run tests on the latest version & changes. We should run tests on every supported version. Possibly that would just be the current version & changes, and...

I currently have issues with entire clusters failing to startup due to apt-get problems, presumably setting up the host: ``` E: Unable to locate package linux-image-extra-4.15.0-1013-azure E: Couldn't find any...

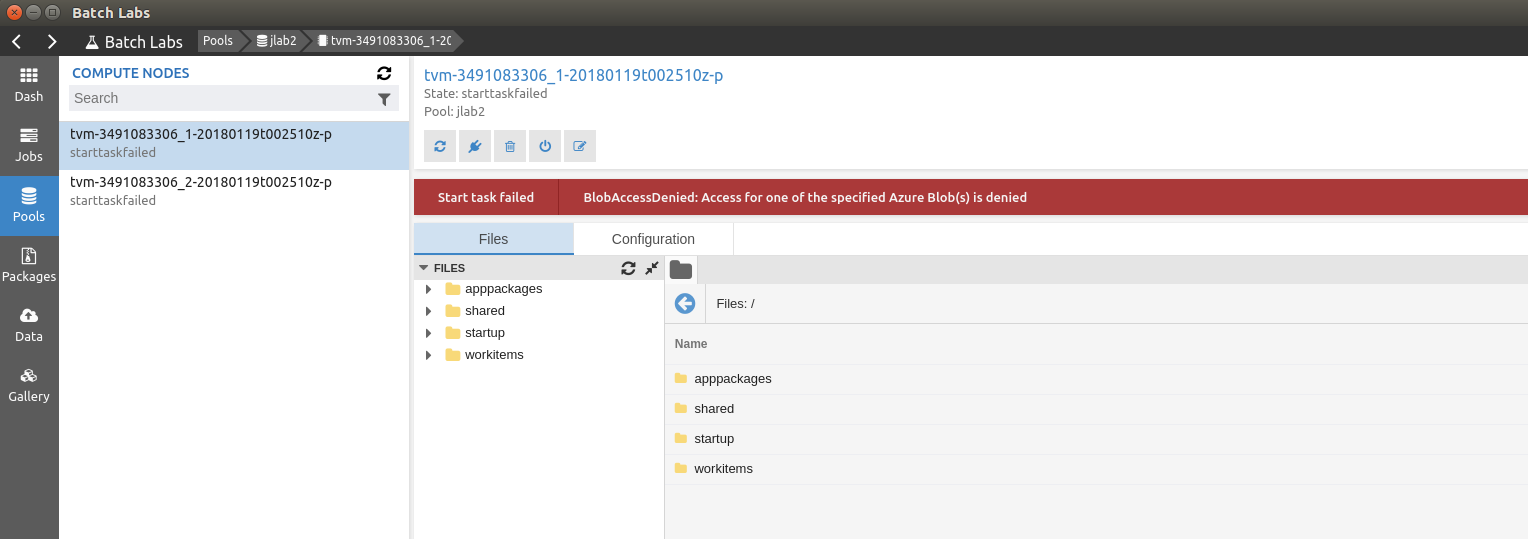

The error is: ```sh BlobSource: https://******.blob.core.windows.net/spark-node-scripts/node-scripts.zip?sr=b&sp=r&se=2018-01-19T02%3A22%3A57Z&sv=2015-07-08&sig=mSVuZVmWRZ9EKij8DUtQSraFRSE1zALfLoXt7tnvfhY%3D FilePath: /mnt/batch/tasks/startup/wd/node-scripts.zip ``` Can we get around using blob SAS's if we have access to the shared keys?

We should 1. [Add a list of mirrors to download from](http://www.apache.org/dyn/closer.cgi/hadoop/common/hadoop-2.8.2/hadoop-2.8.2.tar.gz) 2. Select one at random 3. Add timing statements for debugging purposes

Since pulling from master (5761a3663adb261f49e0e7fc2301b327ee833c12), I can only see newly created clusters in `aztk spark cluster list`, but none of our long-running instances deployed with v0.6.0. If I checkout v0.6.0,...