Yet-Another-EfficientDet-Pytorch

Yet-Another-EfficientDet-Pytorch copied to clipboard

Yet-Another-EfficientDet-Pytorch copied to clipboard

Why my infernce speed is so slow?

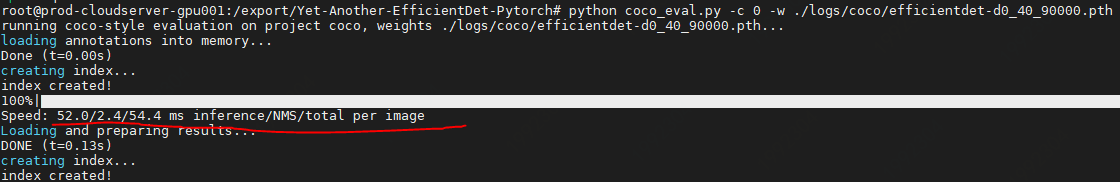

Hi, why my infernce speed is so slow? gpu: test in p40 efficientdet-d0 img:512*512

What's in inference and NMS? I mean where is the postprocessing? Postprocessing takes more time when dealing with lots of boxes.

What's in inference and NMS? I mean where is the postprocessing? Postprocessing takes more time when dealing with lots of boxes.

uh, here the inference is the inference time of the model, the NMS is postprocess, and i did not calculate the preprocessing time

It's weird. But for now, I did find out RTX2080Ti outperforms P100 and V100, and for this case, P40 in group convs. BTW, almost all convs are group convs in effdet, . I'm thinking it may also related to CPU, considering pytorch runs dynamically on python. I'm using i5 8400 whose frequence is 4.0GHz at most, which is far greater than most of the server cpus'.

It's weird. But for now, I did find out RTX2080Ti outperforms P100 and V100, and for this case, P40 in group convs. BTW, almost all convs are group convs in effdet, . I'm thinking it may also related to CPU, considering pytorch runs dynamically on python. I'm using i5 8400 whose frequence is 4.0GHz at most, which is far greater than most of the server cpus'.

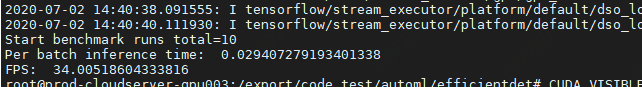

anyway,thanks!yes, my server cpu : 40 core, Intel(R) Xeon(R) CPU E5-2650 v4 @ 2.20GHz. but i try test in original official tensorflow version,(To measure the network latency (from the fist conv to the last class/box prediction output)), the fps is better : 34

i meet the same problem, how you resolve it?