[FEATURE]: Aggregating all metrics under one experiment

Contact Details [Optional]

No response

Describe the feature you'd like

Using MLflow as an experiment tracker, having one experiment track all metrics across pipelines is useful. The current behavior is different metrics get logged in a separate pipeline as a different experiment.

Is your feature request related to a problem?

We have a use-case where there are 2 pipelines, training-evaluation pipeline and export pipeline.

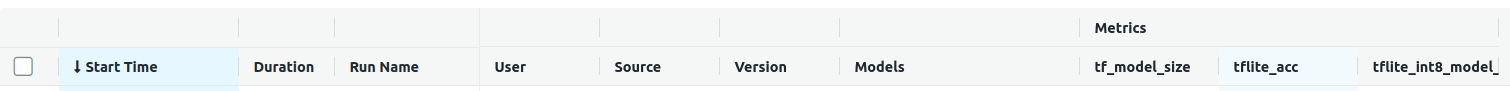

The training-evaluation pipeline takes care of training and evaluating model. We log different metrics related to this pipeline as shown in figure below.

The export pipeline exports the trained model to different format and we log metrics related to this pipeline as shown below.

We want to aggregate all these metrics under one experiment in MLflow dashboard.

How do you solve your current problem with the current status-quo of ZenML?

The experiment_name feature was present ZenML version 0.6.1 where users can provide this argument to decorator. This will track all metrics under one experiment. But seems to be removed in latest version. I was wondering if it's possible to add it back as an optional argument where it's nice for use-case like ours.

The solution that we currently have thought of is to combine all different pipelines as one pipeline. This will give us all metrics under 1 experiment. But the problem with this approach is there's no way to switch on/off different pipelines as before. We have to run all pipelines.

Any other comments?

No response

Thank you for the ticket @dudeperf3ct ! Feature requests now go to our roadmap where other community members can also vote for them! Could you perhaps make a ticket here: https://zenml.hellonext.co/b/feedback

Hi there @dudeperf3ct I've added the feature suggestion to the Roadmap on Hellonext

https://zenml.hellonext.co/p/aggregating-all-metrics-under-one-experiment

As ZenML is open source, we rely on community contributions to support new features. Please let us know if you or anyone you know might be able work on this feature.

Thank you again! :)