Text rendering has glitches for pathologically large files

Describe the bug When opening a large file (e.g. greater several hundred MB), the text rendering of lines starts to overlap towards the end of the file. In the example given below, this starts to happen ~300,000 lines in, where the cursor is rendered slightly offset the line highlight, and the problem gets progressively worse by the end of the file, where lines completely overlap each other (but not all lines).

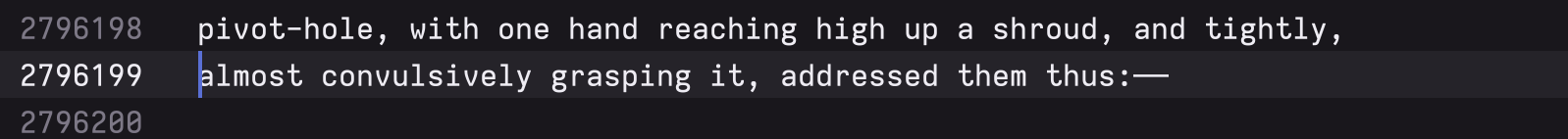

For the reproduction steps below, this image shows the last line that has a cursor aligned with the height of the line highlight

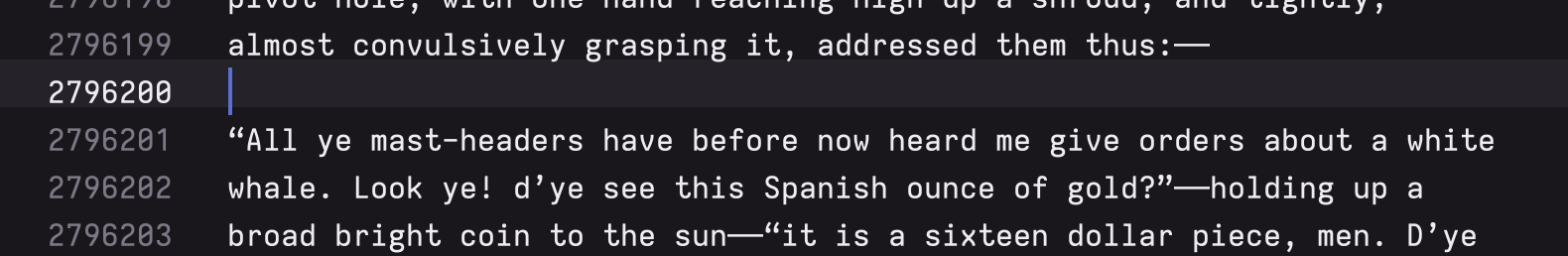

These next two images show the first two lines that have a mis-aligned cursor:

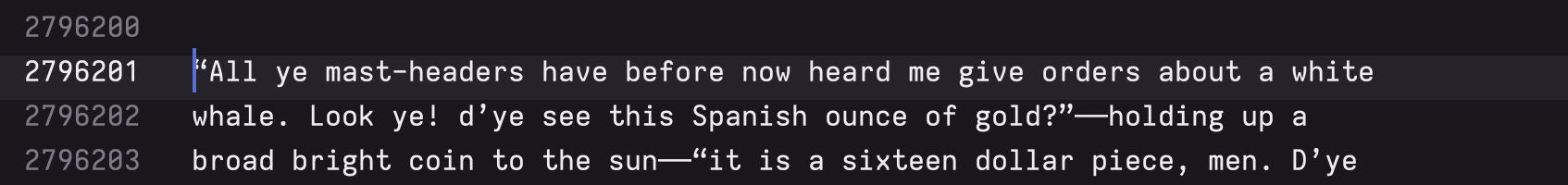

And eventually things get so bad that text just overlaps itself.

Note also that the highlighted line number is at a different location from the cursor and the line selection highlight.

To Reproduce

-

Generate a large file. For this example, I downloaded "Moby Dick" from the Gutenberg Project, saved it as moby-dick.txt and

cated it multiple times to get a 1.2GB filecurl -o moby-dick.txt https://www.gutenberg.org/files/2701/2701-0.txt for i in $(seq 1 1000); do cat moby-dick.txt >> moby-dick-1GB.txt; doneNote: a text file was used to avoid issues with syntax highlighting and large files.

-

Open the file in Zed (and wait a minute for it to load).

-

Control-G and go to line 2796200 (note the cursor is incorrectly offset at all lines after this line, but correctly offset on all lines before it).

-

Command-DownArrow to go the end of the file.

-

Note that some text lines overlap each other.

Expected behavior Text should render correctly, and cursors, line-numbers and line highlights should all align with each other regardless of the size of the underlying file.

Opening large files like this is definitely not going to be common, however any editor that claims to be fast should handle pathological cases like this correctly because it's the first thing people are going to do to see how well those claims hold up 😄

Screenshots See above.

Environment:

- Architecture [Intel]:

- Mac OS Version: [macOS 12.3.1]:

- Zed Version [e.g. 0.39.0]:

Wonder if it is related at all to

- https://github.com/zed-industries/zed/issues/5371

I think it likely is (I realised after posting that I should've searched existing issues first).

Anyway, if you increase the size of the file you used in zed-industries/zed#5371 by 10-fold, and add a few longer lines, I'm sure you'll see the same thing.

I wonder if it's something like the line-height being a non-integral value e.g. 48.000000357 which is being clamped to 48 for some things but not others, and then at some point these 'different' line heights are multiplied by line number.

For small line numbers the result of the calculation will be the same between clamped/non-clamped calculations, but for large enough values of line number, 48 * line number vs 48.000000357 * line number would be enough to start causing pixel offsets.

Thanks for exploring this! Yeah, as Joseph mentioned we are somewhat aware of this.

We'll need to figure out where those non-clamped values are, but it's possible we'll update the system we use to render text in the buffer first I imagine (to allow us to render elements that are arbitrary in height – currently all elements in the buffer need to be multiples of the buffer line height.)

I love that people are trying to break this :D

Have you found a file large enough to make the editor lag significantly/hang?

Have you found a file large enough to make the editor lag significantly/hang?

The 1GB file I talk about above takes about a minute to load and has spinning beach balls from about 5 seconds in. It also takes up 9GB of RAM when open (according to Activity Monitor) from a starting value of 135MB.

Closing the file (with Zed still open) drops memory usage to 309 MB. Reopening and closing (and reopening and closing) brings this up to 900MB - initially I thought this might be a leak, but it caps out at 900MB even after multiple opens and closes so maybe it's some sort of pool rather than a leak.

The 2GB version is similar but takes longer to load and also uses 18GB of RAM ,with 773MB in use after closing the file first time (didn't try multiple reloads yet).

The 100GB version stops loading after a minute and control returns to Zed but no file is displayed - I guess there is an error and Zed bails on loading the file, but at least Zed stays up. Memory usage however is high at 32GB even though nothing is open.

Once loaded though, navigation of large files is mostly without lag (trackpad scrolling upwards from the end of the file sometimes causes a slight pause), but jumping to line numbers works instantly.

I love that people are trying to break this :D

I write a tool that has a file viewer, and have a bunch of files ready to go of pathological cases that cause issues for most other viewers/editors (but not mine 😄).

Zed has handled the pathological cases pretty well so far - with the exception of slow loading, high memory usage, and this rendering glitch when dealing with extremely large files.

The pathological cases are mostly focused on regular text though - things like really long single lines of text, unicode outside the BMP, MBs of nothing but thin characters e.g. i (which not so much an issue in monospaced fonts and I haven't investigated how to change fonts in Zed yet) and then just really large files.

I'll spend a bit of time seeing if I can come up with some pathological files containing source code that mess with things like syntax highlighting and the like.

I recall an earlier version of Zed entirely froze while trying to use Go To Line within a massive file - I had to kill the instance and reopen it.

Have you found a file large enough to make the editor lag significantly/hang?

I tried a bunch of pathological source files (long block comments, full screen of alternating syntax colours every 2 chars etc) and Zed handled them all really well 👍 however I was able to use a text file masquerading as a source file that made Zed lag significantly. I've put the details in a new issue zed-industries/zed#5353

I started thinking about this again today and it sparked me to question: "At what point is it good enough?" For every large file that the devs optimize for, a larger one can be made. I'm all for having the fastest editor around, but it seems like some sort of line has to be drawn somewhere; defining that line seems extremely difficult.

For every large file that the devs optimize for, a larger one can be made

This is true, however things reach a point where designing things to work well with 1GB files usually means you can handle files of size N GB without much issue (at least this was true for the file viewer I wrote).

Even if that's not the case, and it can't be made fast because of data-structures required for other more important functionality, the editor still shouldn't show rendering glitches. That is, it should be slow but correct, rather than fast but incorrect.

For every large file that the devs optimize for, a larger one can be made

This is true, however things reach a point where designing things to work well with 1GB files usually means you can handle files of size N GB without much issue (at least this was true for the file viewer I wrote).

Even if that's not the case, and it can't be made fast because of data-structures required for other more important functionality, the editor still shouldn't show rendering glitches. That is, it should be slow but correct, rather than fast but incorrect.

That is a fair point - that things should render correctly, even if something is talking a long time to process.

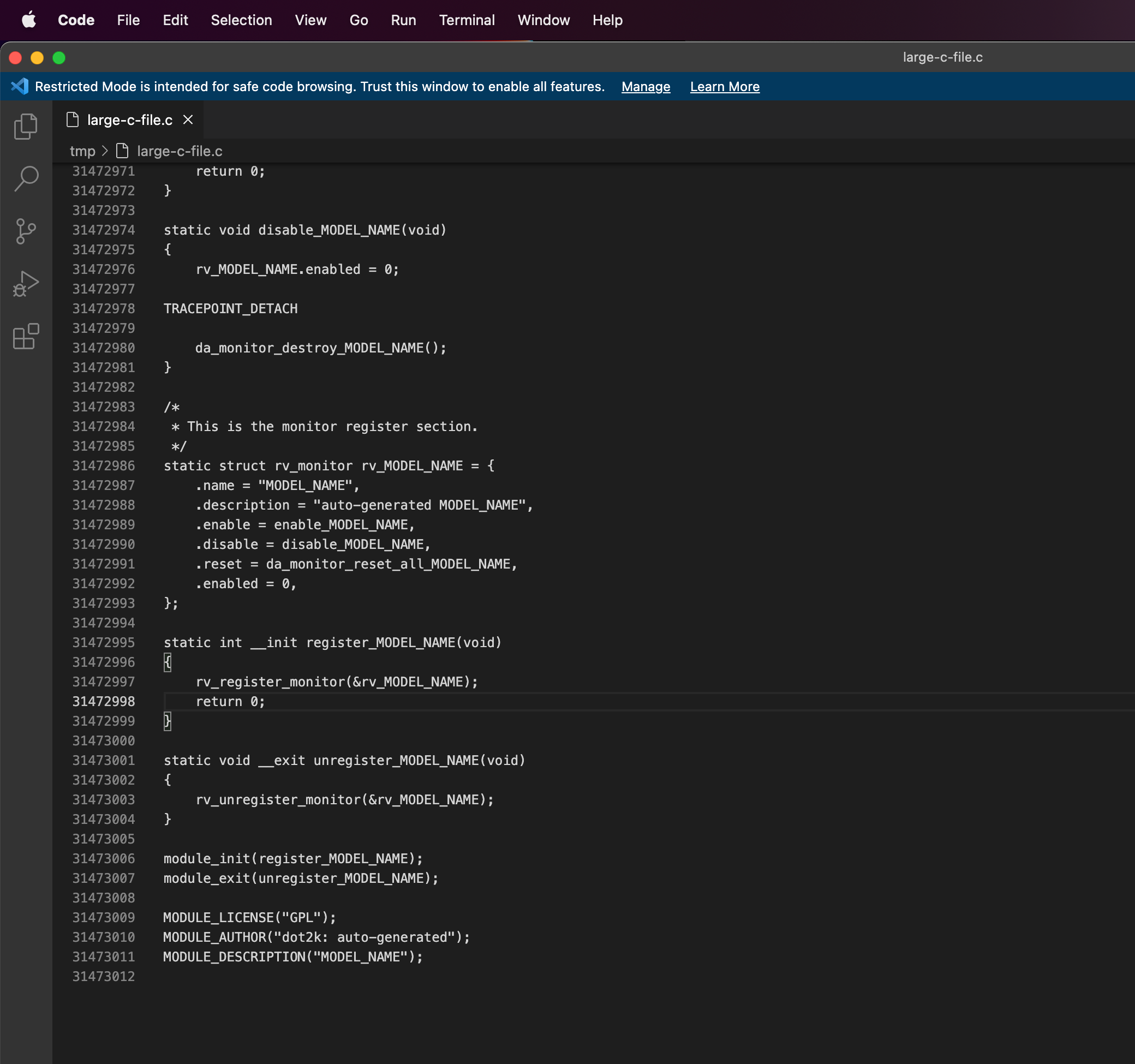

I tried it on the whole Linux kernel source code (1.2GB) as a single file just to see how it would handle syntax highlighting + the large file. It seems that Zed gave up on syntax highlighting the file? Not sure.

I checked out the Linux kernel at revision 8ed710da2873c2aeb3bb805864a699affaf1d03b and ran the following command to produce the C file:

find -type f -name '*.h' -o -name '*.c' | while read file; do cat "$file"; done > /tmp/large-c-file.c

https://user-images.githubusercontent.com/10504957/206196971-a2bceca7-5c0b-45da-938f-4d64d34bb6c0.mp4

CPU: M1 Pro macOS: 12.6.1 Zed version: 0.65.2

@b-fuze, just curious, do you know which editors (GUI or terminal-based) might do well in handling that file? I'm curious to know which editors are handling these massive files better than Zed currently is.

@JosephTLyons interestingly, VSCode opens the file a lot faster than Zed does and can display the lines at the bottom just fine. I understand that this is an edge case and I am not saying that this should be a supported use-case, nor do I expect it to be. I would say that reaching VSCode's time for opening would be ideal though. i.e. Don't wait a whole minute freezing the editor with the spinning beach ball to open it. The line rendering issue should probably be solved as well, but it's not as important imo as not freezing the editor for a long time when opening it. I'd actually be okay with it giving a message saying that it can open it but it'll take a while as a stop gap measure.

The only reason I tested this was because I'm really curious how the syntax highlighting performs, and other large files (much smaller than this one though) Zed actually does a phenomenal job with the syntax highlighting. So I was curious how it would handle a very large C file that isn't just some repeated text :smile:

I don't actually see any real problem here other than notifying the user of what will happen before doing it. Here's a screenshot of VSCode though:

I tried it on the whole Linux kernel source code (1.2GB) as a single file just to see how it would handle syntax highlighting + the large file.

I love that you tried this.

I'm curious to know which editors are handling these massive files better than Zed currently is.

For me, part of it is not so much which editors are better, but rather pushing the limits to see what Zed is capable of.

These cases are unlikely to be encountered in most real world usage (although I know of people who have sometimes needed to edit multi-gb json and/or csv files).

Hey, I know it's been a while, but I've just landed a fix that should help there:

- https://github.com/zed-industries/zed/pull/9176

It should be out on Preview today. Could you give it a spin? :)

@osiewicz thanks for looking at this! I tested it in Zed Preview 0.126.2 2423c60a9f97c2fbfbe970cff7862bfe5fae31ed

I did the same thing as before with the large C file that was just all of the Linux source code in a single file. There still seem to be some issues with rendering those lines at the far bottom

CPU: M1 Pro macOS: 12.6.1

Hey, thanks for checking it out! The builds for new Preview aren't out yet (and I also can still the issue on current Preview); the update should be out in few hours. ;)

Alright, 0.127.0 is out now @b-fuze

@osiewicz I just tested it on the latest preview v0.127.0. It might have helped in some way, though there is still glitching happening

I'm sure this issue is not really in any way important, but in case you want to test it yourself you can quickly produce the C file this way:

git clone --depth 1 https://github.com/torvalds/linux.git

find ./linux -type f \( -name '*.h' -o -name '*.c' \) -exec cat "{}" \; > large-c-file.c

It should produce a 1.2GB~ C file

Okay, I see; thank you for the repro. I think it did help a bit, though definitely not enough to warrant saying that it's a solved case. Back to the drawing board I go =)

syntax highlighting already breaks after only 4000 lines. different issue I know, but just as annoying

syntax highlighting already breaks after only 4000 lines. different issue I know, but just as annoying

Zed's code base has a bunch of files over 4000 lines and syntax highlighting works just fine for those

@WeetHet Then it's either working for Rust specifically or you haven't looked close enough. For C++ it gets really weird after 4000 lines. Almost everything stops being highlighted and is only shown as the plain text color. Some things remain highlighted, but most things aren't. I would've guessed if treesitter was failing then there'd be no highlighting at all, so if it's not treesitter it should be zed itself

same file but a few thousand lines further up:

it's also not the theme. I've tested the same file in the same locations with a builtin zed default theme, and the same issue occurs, just with different colors

@insunaa that looks like a problem with the TreeSitter parser for C++ and looks unrelated to this large file rendering issue. It looks like the parser might have broke at line 4292. Try deleting that block of static variables and see if the syntax highlighting issue is fixed.

You can also use Zed's built-in syntax highlighting tooling to debug the syntax highlighting at that line:

@b-fuze is right, this issue is not related to large file handling. The issue with rendering is language-agnostic (you don't need a tree-sitter grammar to repro it).

@b-fuze I concede my point. You're right, deleting the static variables unbreaks some of the highlighting later on. The first line where the debug tool starts spewing errors is here

and I honestly can't tell what's wrong there.

do you have a suggestion for where I can open an issue in the appropriate place, or where I can leave a comment on an existing issue? I had not found one on zed

Bumped. Even as of today, 6th March 2025, this issue still persists in latest version.

@JosephTLyons because you asked.

Sublime will open this file on my machine, less than 25 seconds.

It will render, scroll and go to line just fine. Syntax highlighting off by default due to file size.

It can syntax highlight it in 2.5 minutes and stay responsive once highlighted. Pretty insane

Issue reproduced on Zed v0.188.5

Sublime Text:

Zed:

I had some issues with the search highlighting in large files (affects normal highlighting as well), did not see any issues with text/font rendering (Zed 189.5)