xtensor_fixed constructors and destructors are always called?

The following code uses xtensor_fixed as a primitive type inside an xtensor.

I'd expect it to behave similarly to a primitive type like float in terms of allocation and deallocation.

However, it seems that when using xtensor_fixed constructors and deconstructors are called for each element. Is this expected behavior?

template<typename F>

double benchmark(const F &f) {

using std::chrono::high_resolution_clock;

const auto t1 = high_resolution_clock::now();

f();

const auto t2 = high_resolution_clock::now();

const auto s = std::chrono::duration_cast<std::chrono::milliseconds>(t2 - t1);

return s.count() / 1000.0f;

}

void test_xt() {

typedef xt::xtensor_fixed<float, xt::xshape<3>> cell_type;

xt::xtensor<cell_type, 3> data;

std::cout << benchmark([&data] () {

data.resize({2, 20000, 20000});

}) << std::endl;

std::cout << benchmark([&data] () {

data.resize({0, 0, 0});

}) << std::endl;

xt::xtensor<float, 3> data2;

std::cout << benchmark([&data2] () {

data2.resize({2, 20000, 20000});

}) << std::endl;

}

The code above outputs these timings:

2.208

3.039

0

Whereas I'd expect:

0

0

0

Compiling using clang++ -std=c++17 -O3 on:

Apple clang version 13.0.0 (clang-1300.0.29.30)

Target: arm64-apple-darwin21.2.0

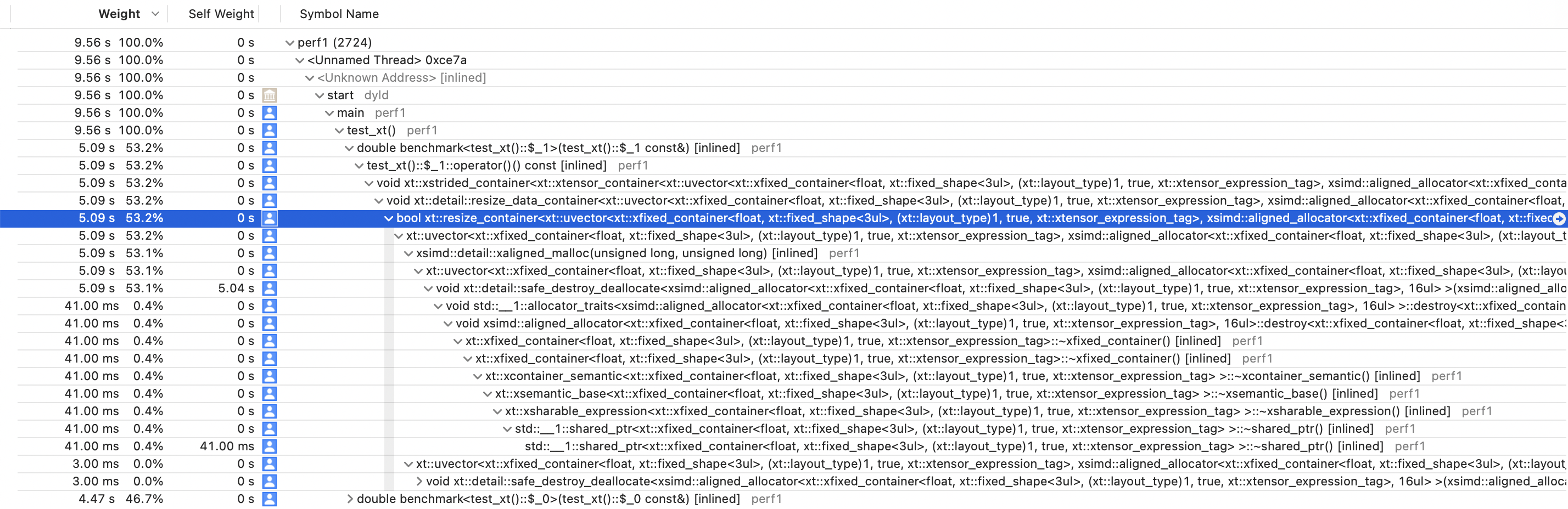

Do you have a trace from some tool like perf or Instruments on MacOS and we could see what destructor takes time?

Just ran Instruments on the resize loop. I'm lost what happens inside the actual resize. A shared ptr?

hmm, the shared_ptr and sharable_expression looks like the culprit here.

Maybe you could try with this: https://github.com/xtensor-stack/xtensor/pull/1733

@wolfv This was exactly the solution. Allocation and deallocation times are now down to 0 in the test code above.

It's cool that with this single change, my pyalign library got 3 times faster than it was 20 minutes ago https://github.com/poke1024/pyalign/blob/main/docs/benchmark_5000_10000.svg :smiley: :thumbsup:

@JohanMabille maybe we should make this the default?

@wolfv Yep, and actually there is some on-going work on performances that points to this structure as a possible culprit for performance issues, so I wonder if we should not simply find another solution for sharing expressions.

This (changing the shared policy) should be done in a single PR.