Apply back pressure to second chance writers when resizing

It has been observed that the second chance cache may be using too much memory here #1818

After reviewing the code I noticed that a cache can be full (and resizing) but no back pressure is applied to producers who may continue creating new cache entries.

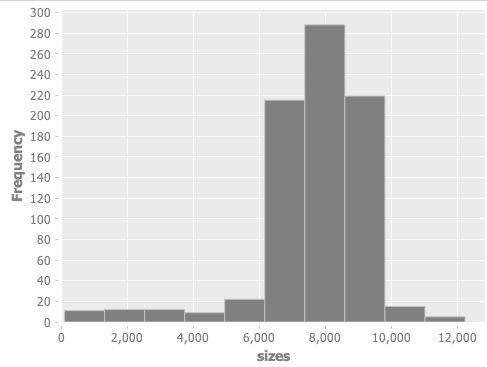

I ran some experiments and observed that even with 2 threads, you could cause the cache to grow far beyond the :cache-size limit. (See examples below).

Examples

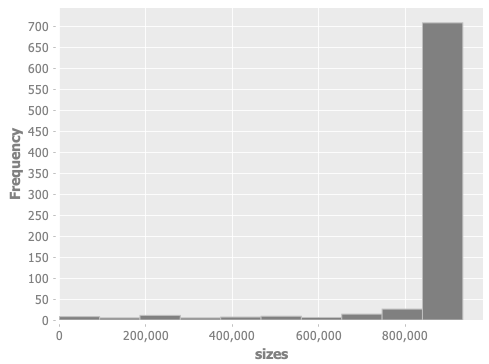

I set up a quick test that filled the cache with integers over 10 seconds, and sampled the size of the hot cache every 10 milliseconds.

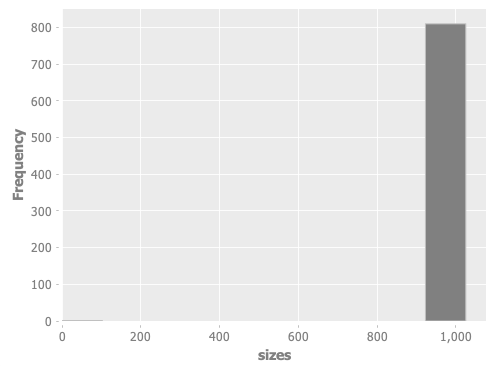

:cache-sizeis1024:adaptive-sizing?isfalse

1 Thread

2 Threads

42 Threads

Semaphore solution (initial choice)

Force cache misses to await any pending resize when the cache is bigger than the max size, applying back pressure. A small buffer of 1024 allows a small number of misses to not block, if contention is not high. We reuse the resize semaphore to cause the blocking behaviour.

Counter solution

An alternative solution that was considered is to use an atomic counter initialised to max size, each put decrements the counter - if negative, the put must spin (and increment) until the counter is not negative. Evictions increment the counter.

This should bring about desired back pressure without risking the .size problem (see @sw1nn comment) and as far as co-ordination is concerned should be very cheap - measurement pending.

Thanks @sw1nn 🙏 , so using .size then is more of a heuristic or hint in this case. That said as a heuristic it does seem to apply enough back pressure to keep the cache size almost entirely within the bounds over time - at least for my 42 threads banging on the cache all at once test! I am aware that is only one test however, and perhaps there are access scenarios that would cause problems.

I also have not yet performed the E2E perf tests (though I should get to that today), which may show an unacceptable performance regression.

As discussed on slack lets step back and talk about other solutions that might be a better fit before committing to this one due to the concern.

closing, feel free to re-open if/when we come back to it