Insert initial clouds produces unrealistic lateral boundary errors

Describe the bug When new option insert_init_cloud = .true., extreme and long lived lateral boundary errors are observed. Even after 24 hours within simulation, spurious storm(s) located near boundary and fixed around same location, still did not dissipated and seems to last forever, at least during similar flow of airmass through domain.

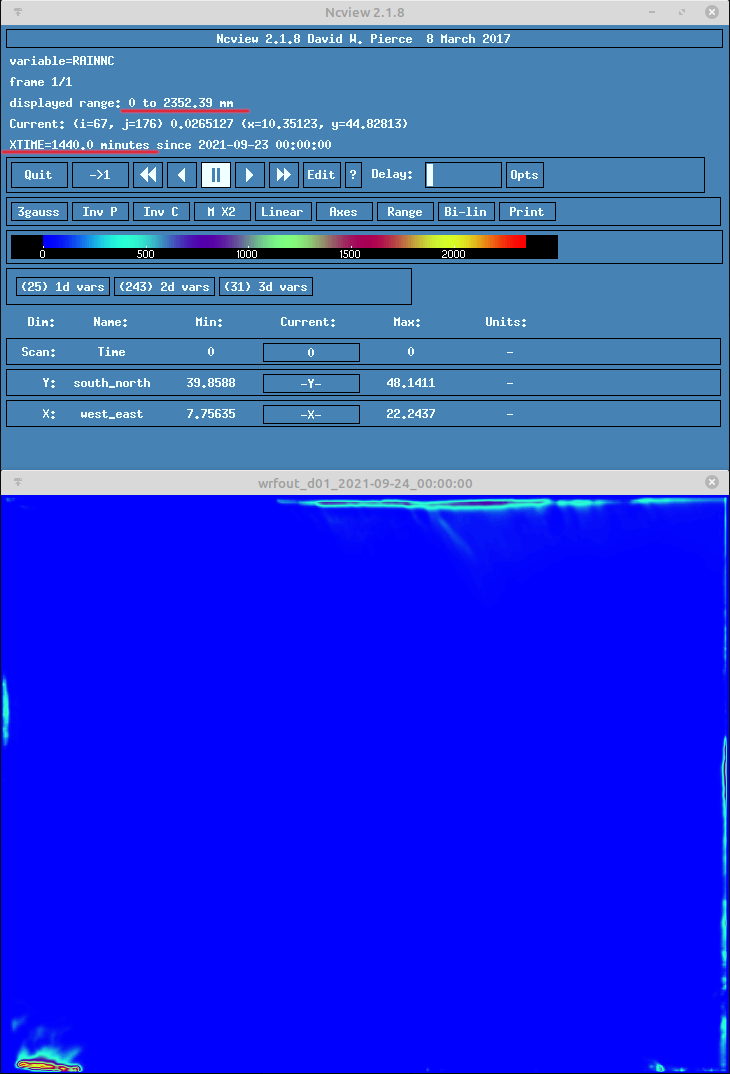

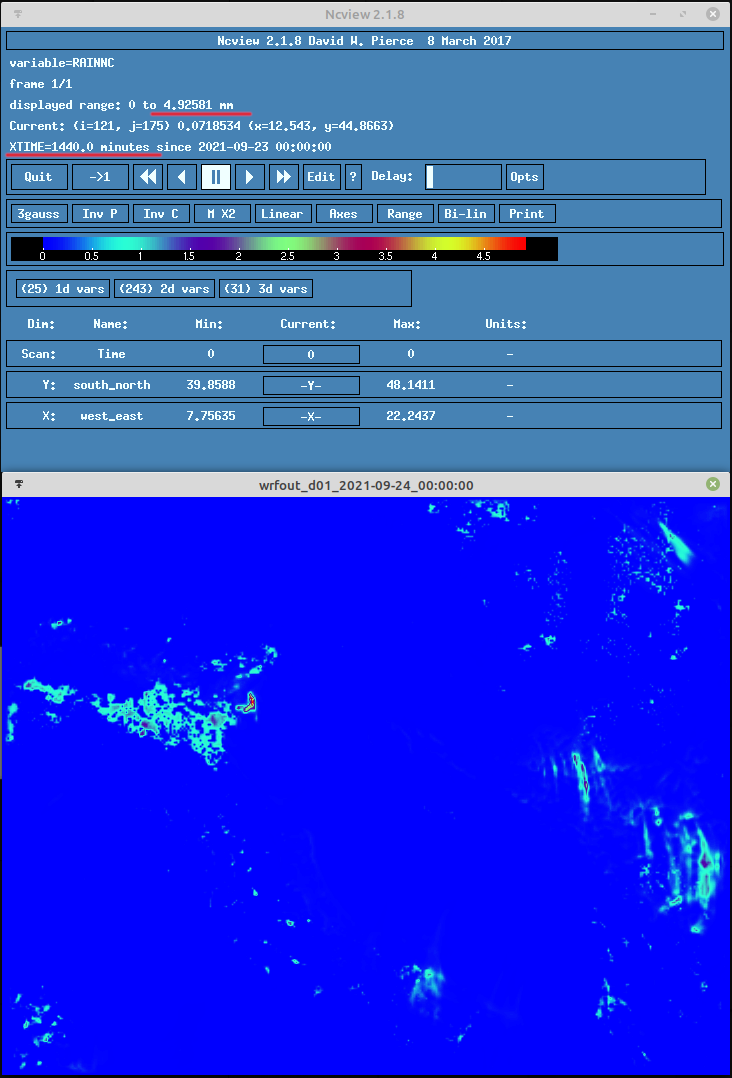

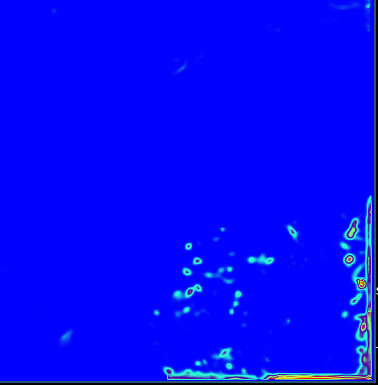

Judging from description of new feature from mr. Thompson PR #1323 the effect on simulation should be dramatic, and it certanly does improve simulation in first hours within domain significantly. However, I feel that something is not implemented properly, as it can be seen from included screenshots, where run with option turned on produces >2500 mm or rain during 24h, while run without option turned on produces maximum of ~5 mm of rain during the same simulation period. No other changes to namelists or forcing data has been made between these two experiments, except insert_init_cloud being .true. or .false.

To Reproduce Steps to reproduce the behavior:

- Version 4.3, intel compiler

- insert_init_cloud = .true. (complete namelists attached)

- See screenshots below

Expected behavior Expected output is relatively similar to one produced on screenshot that show result without insert_init_cloud = .true. option activated (default behaviour, as that is much closer to realistic simulation).

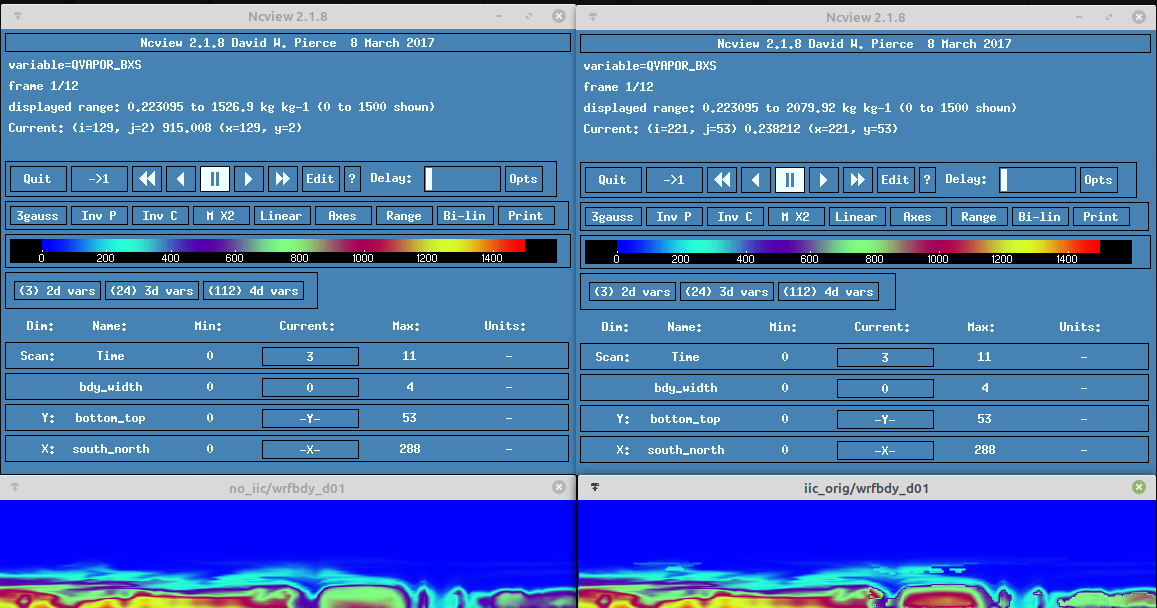

Screenshots rainnc, left image: insert_init_cloud = .true. (please, click on image to enlarge and look at lateral boundary, especially near south-west corner); right image: insert_init_cloud = .false.

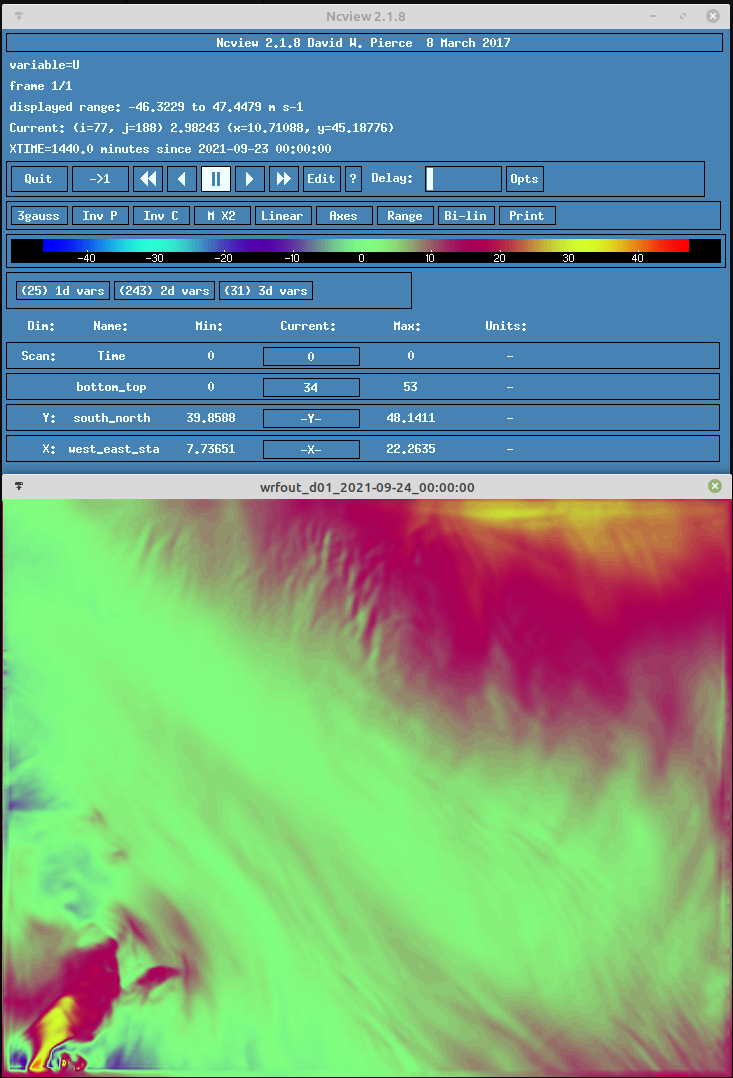

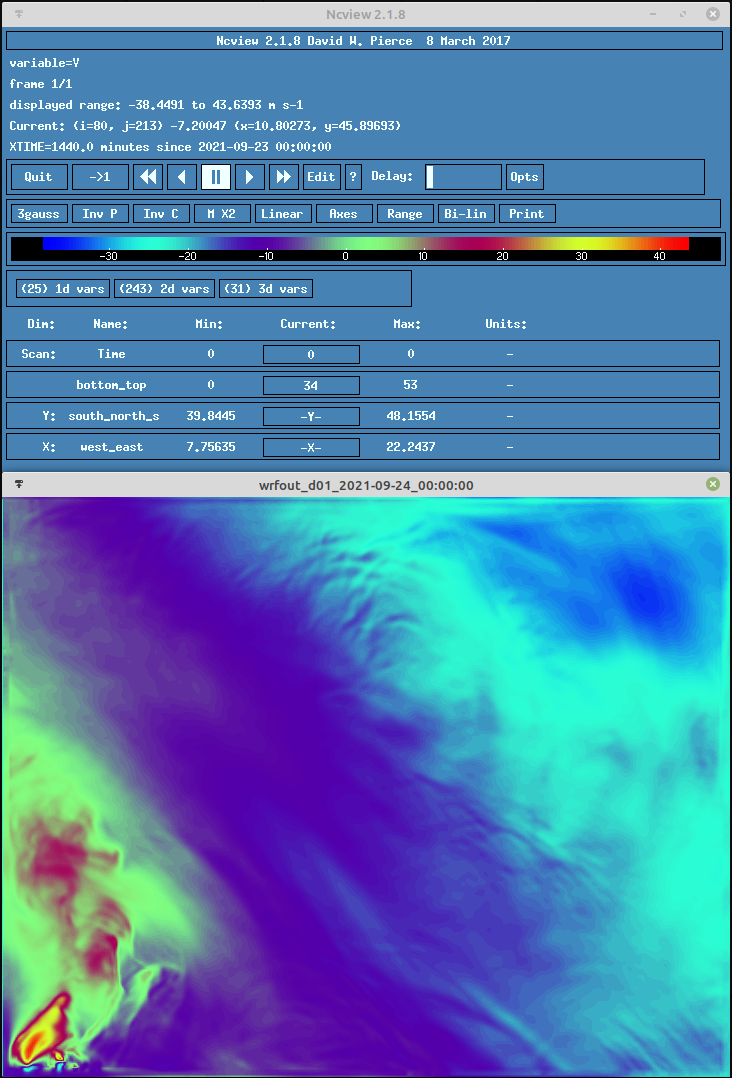

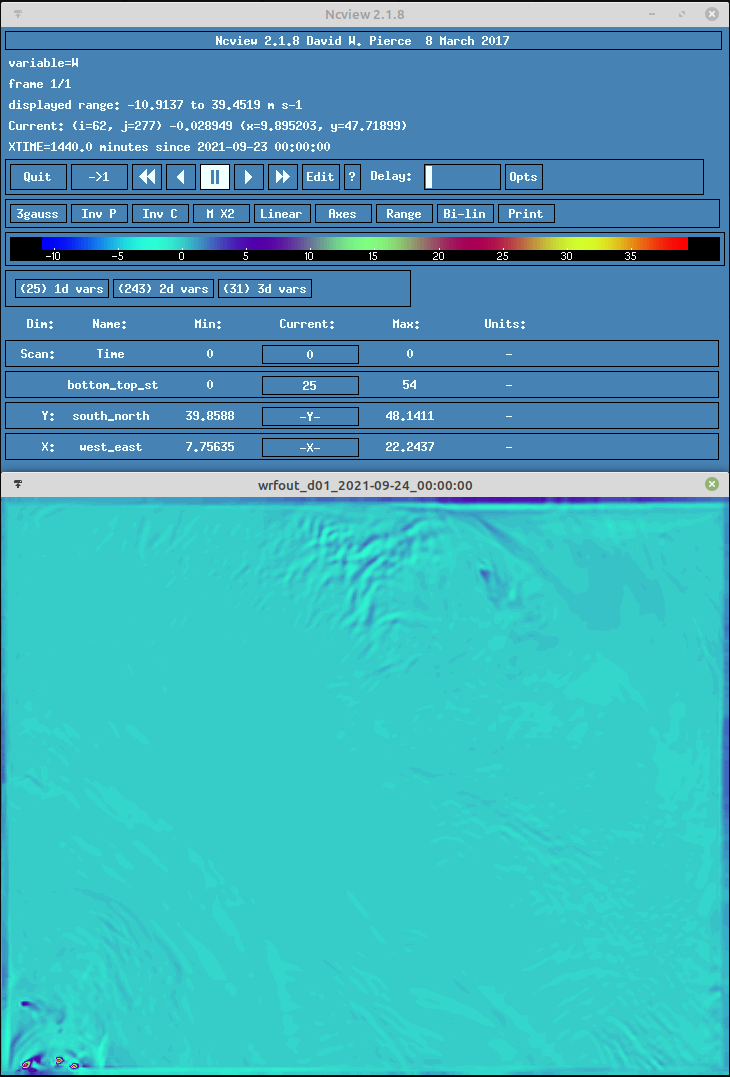

Few additional fields (u,v,w) that show the intensity of the storm near south-west corner, 24 hours after the start of simulation:

Attachments Attached: namelist.input, namelist.wps namelist.wps.txt namelist.input.txt

Additional context The run with results displayed is forced by ICON-EU on model levels, following this https://github.com/wrf-model/WPS/pull/154 ; however, that has nothing with results, as forcing with GFS is also examined and it shows same behaviour, for this particular day, too, with expected slight differences, of course.

As this option seems to be a game changer for the quality of cold-start simulations, I'm reporting this issue in a hope it can be improved further.

After more investigation, I found out that option modifies not only intial state, but also boundary conditions at later times. That could easy explain unexpected behaviour in lateral boundary areas of domain. I'm not sure that this was original intention for insert_init_cloud scheme? As I understand, it should populate CLDFRA field in wrfinput file, which is empty without this option activated, and then stop modifying boundary condtions further (i.e. leave the wrfbdy file unchanged).

If that is correct, please take a look at this comparision.

Left half of image is real output (wrfbdy file), without insert_init_cloud activated. The right part is real.exe rerun, with option activated. As you can see, option modifies vapor variables further in time (both scales set to same range 0-1500), which can explain why we see those unreasonable simulations around domain border.

I hope that I did not wrote something totally bizarre, I'm not really sure if this is how it should be, but logical judgement tells me otherwise.

Best regards, Ivan Toman

Dear all,

/arrogant mode on/ Now, I will also assume that I'm correct in above two posts and that new scheme idea is improperly implemented into code, so, I'm also proposing a fix. /arrogant mode off/

So, for experiment, I just changed in module_initialize_real.F this block of code (line 4352):

if (config_flags%insert_init_cloud .AND. & (P_QC .gt. PARAM_FIRST_SCALAR .AND. & P_QI .gt. PARAM_FIRST_SCALAR)) then

into this:

if (config_flags%insert_init_cloud .AND. & (P_QC .gt. PARAM_FIRST_SCALAR .AND. & P_QI .gt. PARAM_FIRST_SCALAR) .AND. & internal_time_loop .EQ. 1) then

Obviously I just added new condition that internal_time_loop must be = 1 for the code to be executed. The result of the change is that CLDFRA still remains populated by data in wrfinput, but within wrfbdy file, for times > 0, scheme does not change QVAPOR* fields anymore.

I performed a test run (identical case to above posts) and the boundary storm develops at the start, as vapour mixing ratio is increased at the beginning (first time in wrfbdy), but then dies off quickly, after ~ one hour or so into simulation. Overall, effect from CLDFRA is still evident as before, but boundary errors are mostly gone. I'm happy with modification.

The final solution, if you think it should be developed, I will leave to you guys. I guess, remains of vapor mixing ratio change in wrfbdy for time = 0 should also not be there if I understand all correctly, but the experimental fix that removes changes from subsequent boundary times, is fine for me.

Best regards, Ivan Toman

We should get @pedro-jm to look at this. Probably we don't want initial clouds at the boundary or we need a consistent mod in the boundary file(?)

On Sat, Sep 25, 2021 at 2:06 PM Ivan Toman @.***> wrote:

Dear all,

/arrogant mode on/ Now, I will also assume that I'm correct in above two posts and that new scheme idea is improperly implemented into code, so, I'm also proposing a fix. /arrogant mode off/

So, for experiment, I just changed in module_initialize_real.F this block of code (line 4352): if (config_flags%insert_init_cloud .AND. & (P_QC .gt. PARAM_FIRST_SCALAR .AND. & P_QI .gt. PARAM_FIRST_SCALAR)) then

into this:

if (config_flags%insert_init_cloud .AND. & (P_QC .gt. PARAM_FIRST_SCALAR .AND. & P_QI .gt. PARAM_FIRST_SCALAR) .AND. & internal_time_loop .EQ. 1) then

Obviously I just added new condition that internal_time_loop must be = 1 for the code to be executed. The result of the change is that CLDFRA still remains populated by data in wrfinput, but within wrfbdy file, for times > 1, scheme does not change QVAPOR* fields anymore.

I performed a test run (identical case to above posts) and the boundary storm develops at the start, as vapour mixing ratio is increased at the beginning (first time in wrfbdy), but then dies off quickly, after ~ one hour or so into simulation. Overall, effect from CLDFRA is still evident as before, but boundary errors are mostly gone. I'm happy with modification.

The final solution, if you think it should be developed, I will leave to you guys. I guess, remains of vapor mixing ratio change in wrfbdy for time = 0 should also not be there if I understand all correctly, but the experimental fix that removes changes from subsequent boundary times, is fine for me.

Best regards, Ivan Toman

— You are receiving this because you are subscribed to this thread. Reply to this email directly, view it on GitHub https://github.com/wrf-model/WRF/issues/1561#issuecomment-927176632, or unsubscribe https://github.com/notifications/unsubscribe-auth/AEIZ77BQZUFJ6TCCS52EJULUDYTUNANCNFSM5EXIZCMA . Triage notifications on the go with GitHub Mobile for iOS https://apps.apple.com/app/apple-store/id1477376905?ct=notification-email&mt=8&pt=524675 or Android https://play.google.com/store/apps/details?id=com.github.android&referrer=utm_campaign%3Dnotification-email%26utm_medium%3Demail%26utm_source%3Dgithub.

I have been seeing the same issues, but I was never able to track it down to insert_initial_clouds before. This is a great catch.

I have been seeing the same issues, but I was never able to track it down to insert_initial_clouds before. This is a great catch.