Add more pretrain model

good job!I see another repo(https://github.com/williamyang1991/DualStyleGAN) have many other style model , can you integrate them in this repo? I had download the model,but I can't use them in VToonify's code.

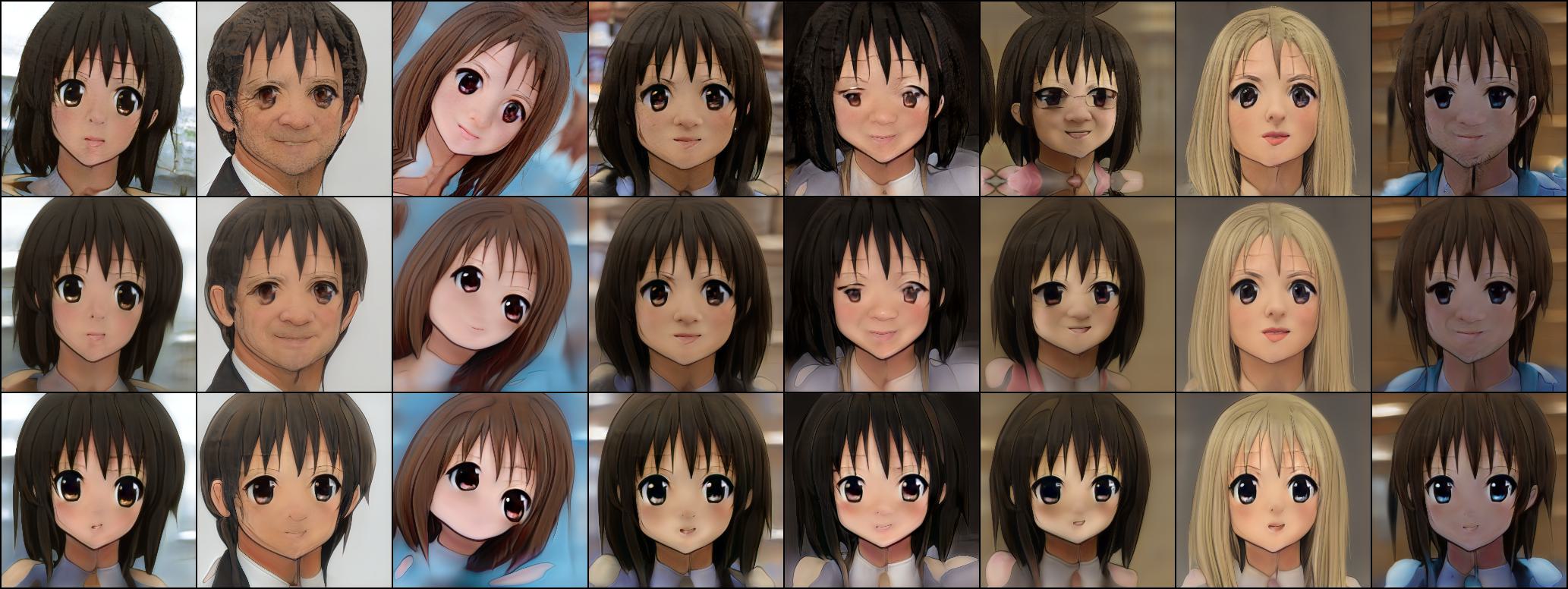

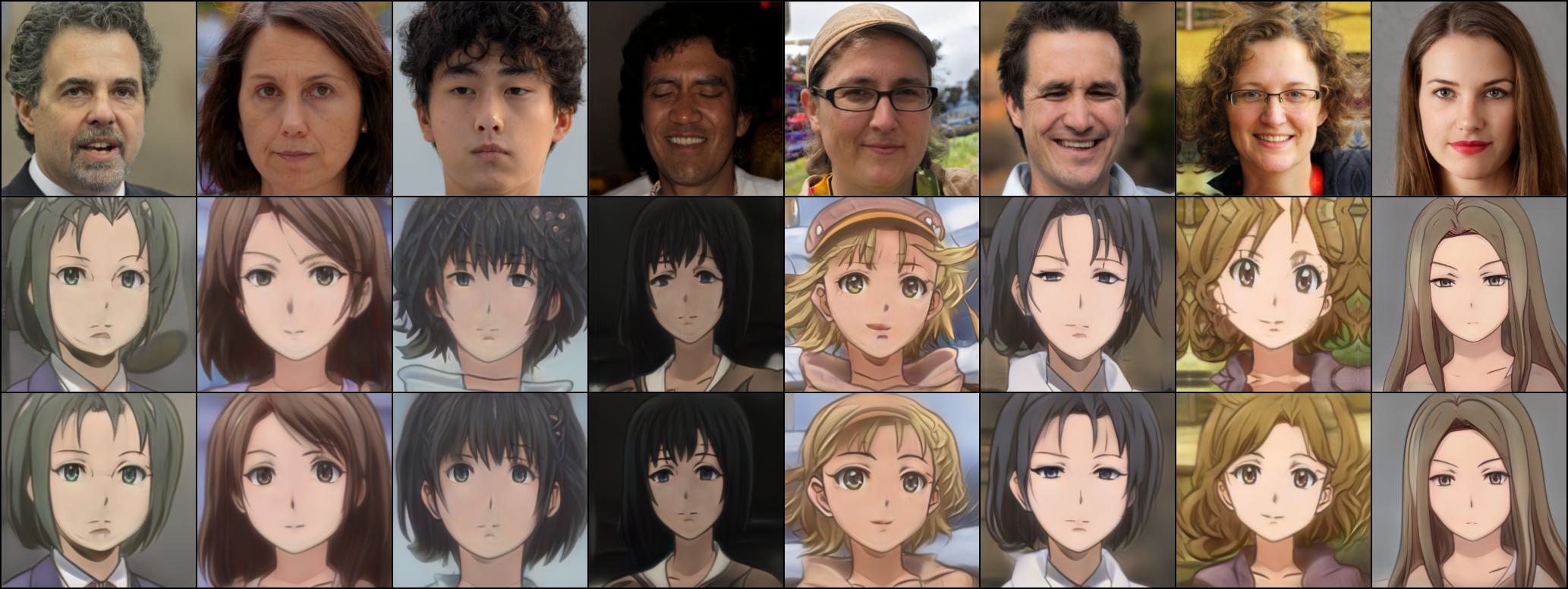

We have tried the style of Anime, but the results are not satisfactory. For those styles that are far from the real faces, the correspondence beween the inputs and the outpus is weakened and their motion looks weird. Therefore, we didn't release those style models.

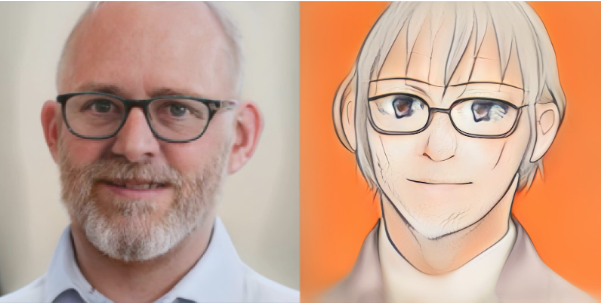

Here is an example of Anime style model.

Thank you for your explanation,your model is very interesting,I still hope to do some insteresting experiments with these insteresting models if possible.Maybe you can tell me how to use that repo's(I mean DualStyleGAN) model in VToonify's code,I see they seem to be trained out of the same network.

You only need to train the corresponding encoder to match the DualStyleGAN using the follwoing two codes:

https://github.com/williamyang1991/VToonify#train-vtoonify-d

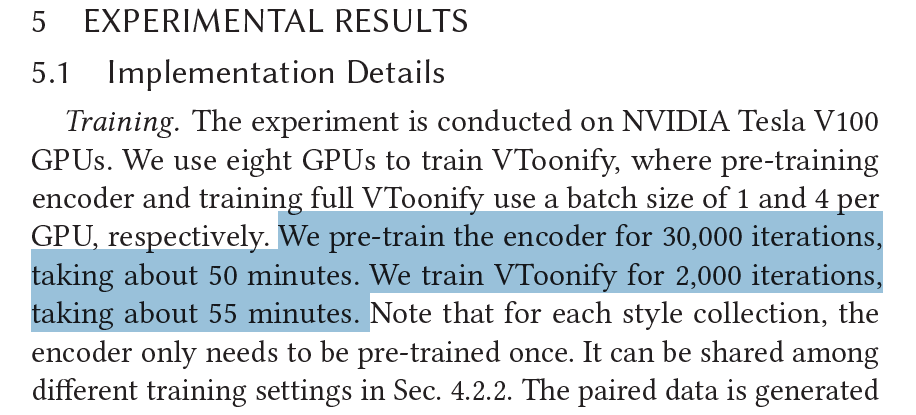

# for pre-training the encoder

python -m torch.distributed.launch --nproc_per_node=N_GPU --master_port=PORT train_vtoonify_d.py \

--iter ITERATIONS --stylegan_path DUALSTYLEGAN_PATH --exstyle_path EXSTYLE_CODE_PATH \

--batch BATCH_SIZE --name SAVE_NAME --pretrain

# for training VToonify-D given the pre-trained encoder

python -m torch.distributed.launch --nproc_per_node=N_GPU --master_port=PORT train_vtoonify_d.py \

--iter ITERATIONS --stylegan_path DUALSTYLEGAN_PATH --exstyle_path EXSTYLE_CODE_PATH \

--batch BATCH_SIZE --name SAVE_NAME # + ADDITIONAL STYLE CONTROL OPTIONS

OK,I wonder how long the training will take.

THX!

We have tried the style of Anime, but the results are not satisfactory. For those styles that are far from the real faces, the correspondence beween the inputs and the outpus is weakened and their motion looks weird. Therefore, we didn't release those style models.

Here is an example of Anime style model.

Thank for your detailed reply, I train the anime model based on the Dual-StyleGAN's checkpoint, ( generator.pt from https://drive.google.com/drive/folders/1YvFj33Bfum4YuBeqNNCYLfiBrD4tpzg7), but it seems not the anime style.

Would you please share Vtoonify’s anime checkpoint?

My checkpoint is trained with color transfer. You need not specify --fix_color during training and testing

My checkpoint is trained with color transfer. You need not specify

--fix_colorduring training and testing

Thanks for your reply, I train the model without --fix_color, but the log image also seems not like the Anime style.

I see.

You can specify the --fix_style and --style_id to learn one anime style,

or change

https://github.com/williamyang1991/VToonify/blob/db57c27b4189023a5330c21b015a8e78cc111b87/train_vtoonify_d.py#L245-L250

to (remove the and args.fix_style)

if not args.fix_color:

xl = style.clone()

else: # this will augment the colors so the anime color will be eliminated!!!!!!!!!!!!!!!!!!

xl = pspencoder(F.adaptive_avg_pool2d(xc, 256))

xl = g_ema.zplus2wplus(xl) # E_s(x''_down)

xl = torch.cat((style[:,0:7], xl[:,7:18]), dim=1).detach() # w'' = concatenate E_s(s) and E_s(x''_down)

You can specify the

--fix_styleand--style_idto learn one anime style,

In this case, you need to specify --fix_color

So the style options are

--fix_color --fix_degree --style_degree 0.5 --fix_style --style_id 114

You can tune the style_degree to find the best results.

Thanks for your suggestion, I have trained the anime model on DualStylegan following your suggestion. By the way, I also train Vtoonify based on toonify generator, without mixing shadow and deep layers, it seems more like anime than the layer-mixed generator.

Thanks again for your kind help!

I think your results look good!

How can I create my own style to use with this?