DualStyleGAN

DualStyleGAN copied to clipboard

DualStyleGAN copied to clipboard

add web demo/model to Huggingface

Hi, would you be interested in adding DualStyleGAN to Hugging Face? The Hub offers free hosting, and it would make your work more accessible and visible to the rest of the ML community. We can create a username similar to github to add the models/spaces/datasets to.

Example from other organizations: Keras: https://huggingface.co/keras-io Microsoft: https://huggingface.co/microsoft Facebook: https://huggingface.co/facebook

Example spaces with repos: github: https://github.com/salesforce/BLIP Spaces: https://huggingface.co/spaces/akhaliq/BLIP

github: https://github.com/facebookresearch/omnivore Spaces: https://huggingface.co/spaces/akhaliq/omnivore

and here are guides for adding spaces/models/datasets to your org

How to add a Space: https://huggingface.co/blog/gradio-spaces how to add models: https://huggingface.co/docs/hub/adding-a-model uploading a dataset: https://huggingface.co/docs/datasets/upload_dataset.html

Please let us know if you would be interested and if you have any questions, we can also help with the technical implementation.

@williamyang1991 also could there be a option in colab to use cpu for inference?

@williamyang1991 web demo was added (https://huggingface.co/spaces/hysts/DualStyleGAN) by https://huggingface.co/hysts on huggingface, made a PR to link it https://github.com/williamyang1991/DualStyleGAN/pull/3

@williamyang1991 web demo was added (https://huggingface.co/spaces/hysts/DualStyleGAN) by https://huggingface.co/hysts on huggingface, made a PR to link it #3

Thank you for the impressive web demo!

@williamyang1991 also could there be a option in colab to use cpu for inference?

I will modify it when I have free time.

Hi, @AK391, it seems that there is an issue on your impressive web demo: https://github.com/williamyang1991/DualStyleGAN/issues/4#issuecomment-1077196647

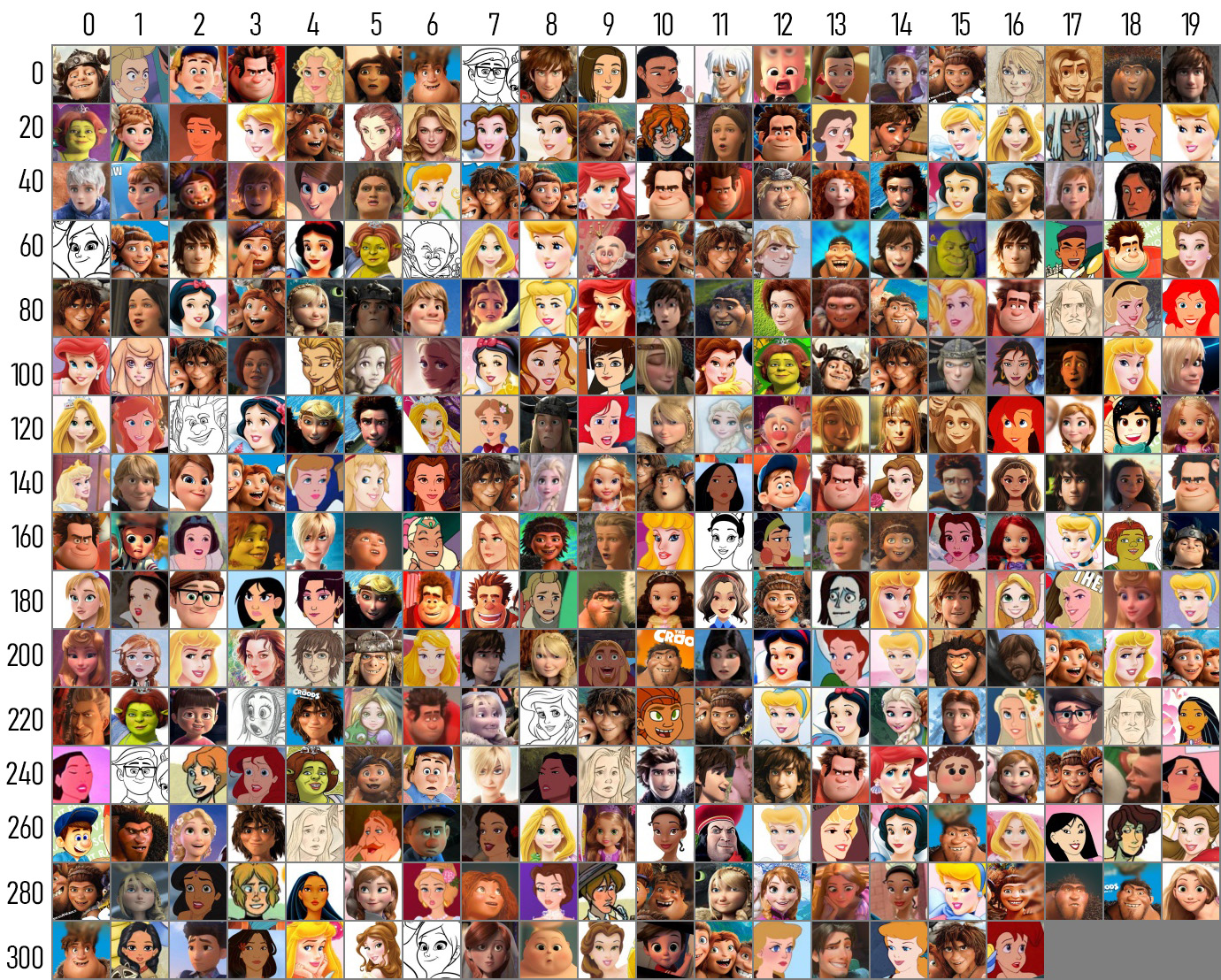

I think it will be great if you can kindly add the style overview below to the web demo, so that users could visually browse the styles for esay use. :)

@williamyang1991 also could there be a option in colab to use cpu for inference?

Hi, @AK391 , I modified the code and successfully ran the notebook on CPU following the intruction in ReadMe:

If no GPU is available, you may refer to Inference on CPU, and set

device = 'cpu'in the notebook.

@williamyang1991 thanks the demo was setup by a community member: https://huggingface.co/hysts, it was recently updated to add the table

@williamyang1991

Hi, awesome work!

I wrote the code for the web demo, and happened to find this issue, so I added the style image table you provided. Thank you.

@hysts thanks for setting up the demo, looks great, are there plans to add the other styles for example arcane, anime, slam dunk?

@AK391 Well, I wasn't planning to do it, but I can. But the problem is that style images for other style types are not provided, so we'll have the same issue as #4.

@williamyang1991 Is it possible for you to provide style image tables like the one in https://github.com/williamyang1991/DualStyleGAN/issues/2#issuecomment-1077283097 for other style types?

@hysts Part of the style images we used are collected from the Internet like Slam Dunk, Arcane and Pixar. I'm not sure if we can release them without acquiring the corresponding copyrights. If we just need a style table for style reference, maybe I can generate a style table from the reconstructed style images? These images are generated from the instrinsic and extrinsic style codes of the original style image via our network. These free copyright generated images should look like the original ones and are enough to help users visually browse the styles.

@williamyang1991

I see.

If we just need a style table for style reference, maybe I can generate a style table from the reconstructed style images?

I think that would work.

By the way, I updated the web demo to include other styles, currently without the reference style images except cartoon.

@hysts I have uploaded style tables here.

Cartoon, Caricature and Anime are built from public datasets, so I use the original images.

Other styles use the generated images (see Reconstruction in the filenames).

@williamyang1991 Cool! Thank you very much! I updated the web demo.

@hysts I saw the bar to adjust the weights for more flexible style transfer was also added. Great job! Thank you very much for this awesome web demo!

@hysts just saw that the demo is down, can you restart it by going to settings -> restart button?

@AK391 Thank you for letting me know. I just restarted it. Looks like it's out of memory, but I wonder why that happens.

File "/home/user/.local/lib/python3.8/site-packages/gradio/routes.py", line 265, in predict

output = await run_in_threadpool(app.launchable.process_api, body, username)

File "/home/user/.local/lib/python3.8/site-packages/starlette/concurrency.py", line 39, in run_in_threadpool

return await anyio.to_thread.run_sync(func, *args)

File "/home/user/.local/lib/python3.8/site-packages/anyio/to_thread.py", line 28, in run_sync

return await get_asynclib().run_sync_in_worker_thread(func, *args, cancellable=cancellable,

File "/home/user/.local/lib/python3.8/site-packages/anyio/_backends/_asyncio.py", line 818, in run_sync_in_worker_thread

return await future

File "/home/user/.local/lib/python3.8/site-packages/anyio/_backends/_asyncio.py", line 754, in run

result = context.run(func, *args)

File "/home/user/.local/lib/python3.8/site-packages/gradio/interface.py", line 573, in process_api

prediction, durations = self.process(raw_input)

File "/home/user/.local/lib/python3.8/site-packages/gradio/interface.py", line 615, in process

predictions, durations = self.run_prediction(

File "/home/user/.local/lib/python3.8/site-packages/gradio/interface.py", line 531, in run_prediction

prediction = predict_fn(*processed_input)

File "/home/user/.local/lib/python3.8/site-packages/torch/autograd/grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "app.py", line 175, in run

img_rec, instyle = encoder(input_data,

File "/home/user/.local/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1110, in _call_impl

return forward_call(*input, **kwargs)

File "DualStyleGAN/model/encoder/psp.py", line 97, in forward

images, result_latent = self.decoder([codes],

File "/home/user/.local/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1110, in _call_impl

return forward_call(*input, **kwargs)

File "DualStyleGAN/model/stylegan/model.py", line 573, in forward

out = conv1(out, latent[:, i], noise=noise1)

File "/home/user/.local/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1110, in _call_impl

return forward_call(*input, **kwargs)

File "DualStyleGAN/model/stylegan/model.py", line 363, in forward

out = self.conv(input, style, externalweight)

File "/home/user/.local/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1110, in _call_impl

return forward_call(*input, **kwargs)

File "DualStyleGAN/model/stylegan/model.py", line 284, in forward

out = self.blur(out)

File "/home/user/.local/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1110, in _call_impl

return forward_call(*input, **kwargs)

File "DualStyleGAN/model/stylegan/model.py", line 88, in forward

out = upfirdn2d(input, self.kernel, pad=self.pad)

File "DualStyleGAN/model/stylegan/op/upfirdn2d.py", line 152, in upfirdn2d

out = upfirdn2d_native(input, kernel, *up, *down, *pad)

File "DualStyleGAN/model/stylegan/op/upfirdn2d.py", line 188, in upfirdn2d_native

out = F.conv2d(out, w)

RuntimeError: [enforce fail at alloc_cpu.cpp:73] . DefaultCPUAllocator: can't allocate memory: you tried to allocate 1073741824 bytes. Error code 12 (Cannot allocate memory)

libgomp: Thread creation failed: Resource temporarily unavailable

@williamyang1991 tagging about the memory issue and @hysts there is another error now RuntimeError: can't start new thread

edit: it is back up now

@AK391 Not sure what causes the memory issue. My testing code only runs two feed-forward processes with a maximum batch size of two. I don't think it will use so much memory.

I'm not familiar with the technical implementation of the web demo. Will the demo release the memory after every inference? There are 7 models for 7 styles. Will they be all loaded into the memory?

@AK391 @williamyang1991

I'm not sure, but I guess it was just a temporary glitch in the environment of Hugging Face Spaces because I did nothing but it's back up now. Also, the app worked just fine in my local environments (mac and ubuntu), and in a GCP environment with 4 CPUs and 16 GB RAM, while the document of hf spaces says

Each Spaces environment is limited to 16GB RAM and 8 CPU cores.