Thomas Wagenaar

Thomas Wagenaar

The trainer will try to minimise your cost function.

Just so you know: that is a fairly low error. And you don't have to user `counter`, as the `data` object contains a counter itself (`data.iterations` or `data.iteration`).

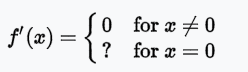

Judging from [Wikipedia](https://en.wikipedia.org/wiki/Activation_function) (see: Binary Step), that assumption is definitely correct:  But when the derivative of all `x != 0` is equal to `0`, then the [error calculation](https://github.com/cazala/synaptic/blob/master/src/Neuron.js#L160) will...

The predefined activation functions in the library aren't actually constrained to `(-1,1)`. Only one of them (`TANH`) is. `LOGISTIC` and `HLIM` have `(0,1)` and `IDENTITY` and `RELU` can get higher...

I'm having no problems, [run it here](https://jsfiddle.net/8eL11jwo/1/).

Here is the JSFiddle: https://jsfiddle.net/wagenaartje/4516xtze/3/

Show some code so we can reproduce the problem :wink:

The amount of layers is in no way correlated to the amount of outputs. Please read through [Neural Networks 101](https://github.com/cazala/synaptic/wiki/Neural-Networks-101) to get a basic understanding of neural networks. The difference...

Hmmm. Then it's a double bug. So there's 2 problems: it doens't work for recurrent networks and something is going wrong with layer counting. I shouldn't be saying this, but...